Anna: A Key-Value Store For Any Scale

This paper (ICDE'18) introduces Anna, a CALM/CRDT implementation of a distributed key-value system both at the data structure level as well as system architecture and transaction protocol levels. Anna is a partitioned, multi-mastered key-value system that achieves high performance and elasticity via wait-free execution and coordination-free consistency. Anna employs coordination-free actors that perform state update via merge of lattice-based composite data structures.

I love the strongly opinionated introduction of this paper. This is what papers should be about: opinionated, challenging conventions, making bets, and doing hypothesis testing in the small.

Conventional wisdom says that software designed for one scale point needs to be rewritten when scaling up by 10x. Anna sets out to disprove this by showing how a key-value storage (KVS) system can be architected to scale across many orders of magnitude. (Spoiler Anna can give you only upto causal consistency, but cannot provide strong consistency at key level, and nothing stronger than read-committed at the multi-key level.)

Architecture

The high-level goal for Anna is to provide excellent performance on a single multi-core machine, while also being able to scale up elastically to geo-distributed cloud deployment. In order to achieve these goals, they take the following as design requirements.

- To ensure data scaling, partition (shard) the key space, not only across nodes at cloud scale but also across cores for high performance.

- To enable workload scaling, employ multi-master replication to concurrently serve puts and gets against a single key from multiple threads.

- To achieve maximum hardware utilization and performance within a multi-core machine, guarantee wait-free execution, i.e., coordination techniques such as locking, consensus protocols or even "lock-free" retries should be avoided.

Each actor's private state is maintained in a lattice-based data-structure, which guarantees that an actor's state remains consistent despite message delays, re-ordering, and duplication. This design pattern is uniform across threads, machines, and data-centers, which leads to a system that is simple and easy to reconfigure dynamically.

Anna exploits the associativity of lattices to minimize communication via a merge-at-sender scheme. Batches of associative updates can be merged at a sending replica without affecting results. Merging at the sender can dramatically reduce communication overhead for frequently-updated hot keys, and reduces the amount of computation performed on a receiving replica, which only processes the merged result of updates to a key, as opposed to every individual update. (In order for merge-at-sender to work, this must be assuming a request is sent to only one master. Otherwise, a receiver won't be able to merge cumulative result with its cumulative results without knowing individual operations it is missing there.)

Flexible consistency

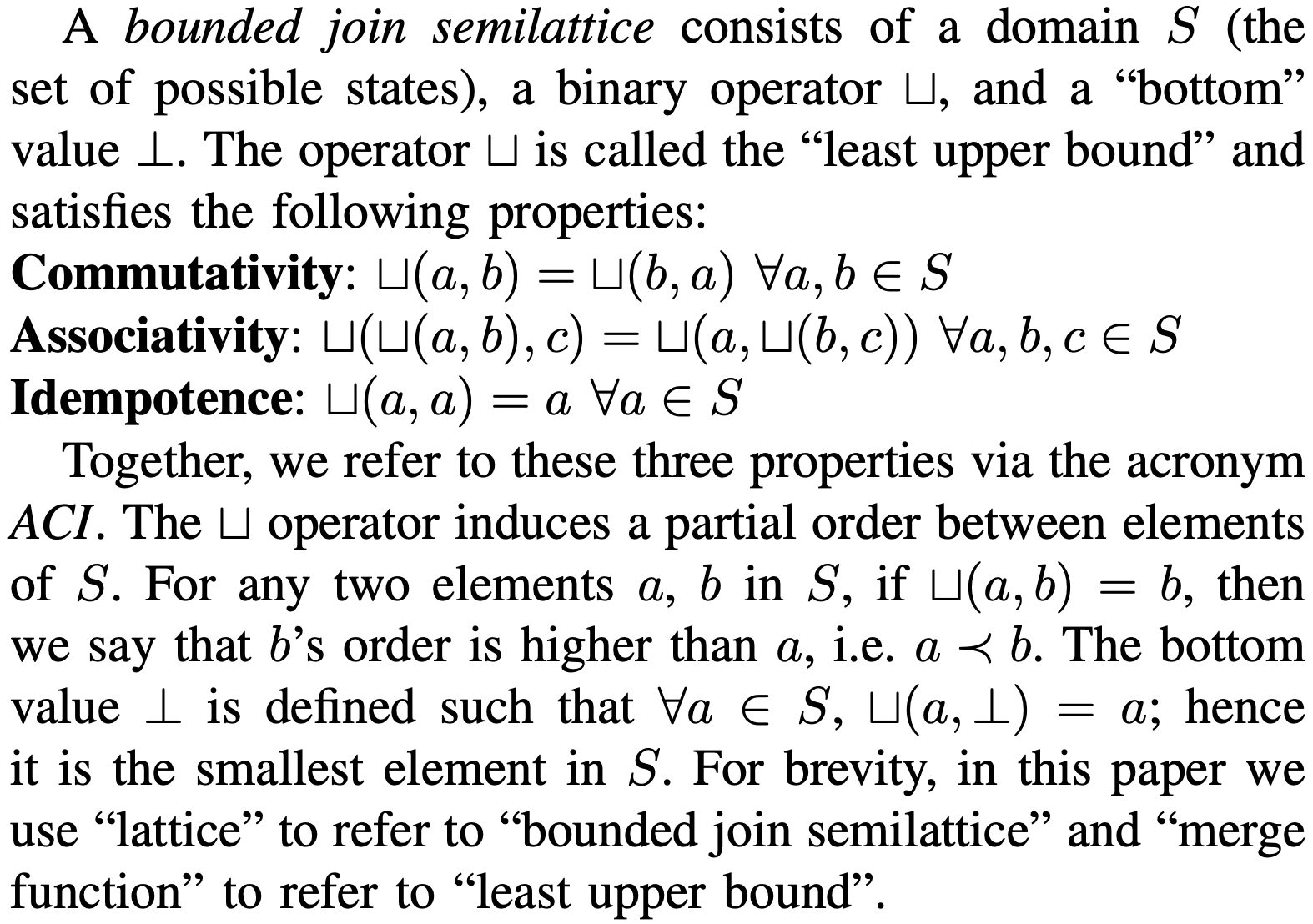

Anna is built using C++ and makes use of C++'s template structures to offer a flexible hierarchy of lattice types. The private state of each worker in Anna is represented as a lattice-valued map lattice (MapLattice) template, parameterized by the types of its keys and values. MapLattice is a hash map whose keys are immutable and of any type, and values are from some lattice type (ValueLattice). Users' PUT requests are merged into the MapLattice. The merge operator of MapLattice takes the union of the key sets of both input hash maps. If a key appears in both inputs, then the values associated with the key are merged using the ValueLattice's merge function.For multikey, Anna is only able to provide read-committed consistency. Read committed prevents both dirty writes and dirty reads. In order to prevent dirty writes in a weakly consistent system, it is sufficient to ensure that writes to each key exhibit a total ordering with respect to transactions. Although different replicas may receive writes in different orders, the final state of the KVS should be equivalent to the result of a serial execution of transaction writes. This can be achieved by appending a timestamp to each transaction (and to each write within the transaction) and applying a "larger timestamp wins" conflict resolution policy at each replica. This monotonically increasing timestamp can be easily implemented using a MaxIntLattice. Dirty reads are prevented by buffering a transaction's writes at the client proxy until commit time, which ensures that uncommitted writes never appear in the KVS.

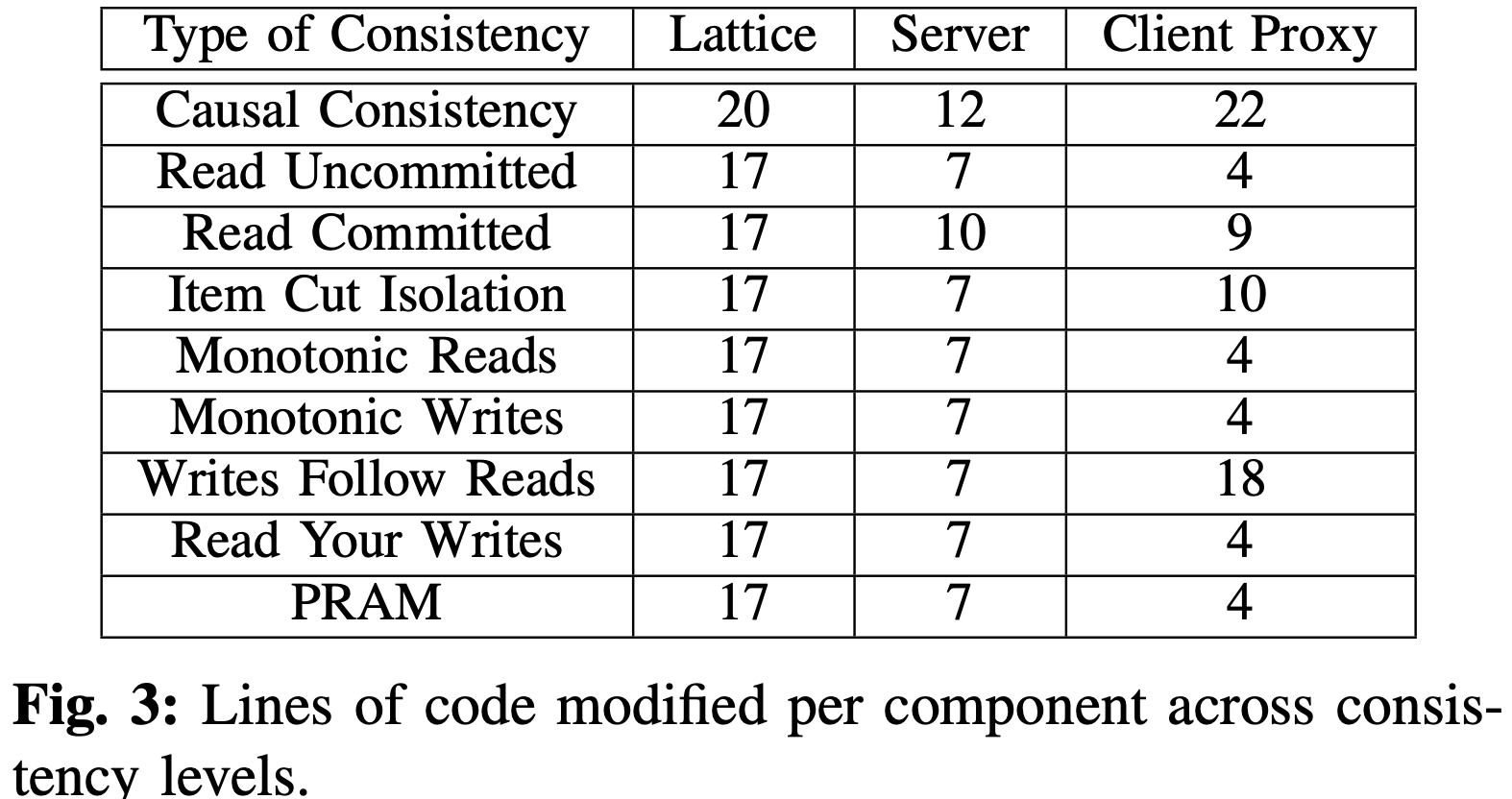

Anna shows that the full range of coordination-free consistency models can be elegantly implemented using the distributed lattices framework in a compositional fashion. The resulting consistency code is small and modular.

Implementation

The codebase (including the lattice library, all the consistency levels, the server code, and client proxy code) amounts to about 2000 lines of C++ on top of commonly-used libraries including ZeroMQ and Google Protocol Buffers. To store the private KVS replica at each actor, the unordered map from the C++ standard library is used. Interactor multicast is achieved via the pub-sub communication mechanism of ZeroMQ, a high-performance asynchronous messaging library.

Anna uses consistent hashing to partition and replicate key-value pairs across actors. Following the Dynamo design, Anna applies a CRC32 hash on the id to assign the actor to a position on the hash ring and applies the same hash function to a key in order to determine the actors responsible for storing the key. Each key-value pair is replicated N-1 times on the clockwise successor actors, where N is the user-provided replication factor. Anna handles actor joining and departure in a similar fashion as Dynamo.

Anna actors support three operations: GET, PUT, and DELETE. GET retrieves the value of a key from a (single) replica. Coordination-free consistency does not require a quorum, so GET need not merge values from more than one replica. The GET response may be stale; the staleness is bounded by the multicast period, which is an adjustable parameter to balance performance and staleness. PUT persists the merge of a new value of a key with a (single) replica using the lattice merge logic. DELETE is implemented as a special PUT request with an empty value field. Actors free the heap memory of a key/value pair only when the DELETE's timestamp dominates the key's current timestamp. To completely free the memory for a key, each actor maintains a vector clock (associated with each key-value pair) that keeps track of the latest-heard timestamps of all actors, which is kept up-to-date during multicast.

Client proxies interact with actors to serve user requests. In addition to GET, PUT, and DELETE, proxies expose two special operations to the users for consistency levels that involve transactions: BEGIN TRANSACTION and END TRANSACTION. All operations that fall in between a pair of special operations belong to a single transaction. Transaction ID is uniquely generated by concatenating a unique actor sequence number with a local timestamp.

Evaluation

The paper performs comparison against popular KVSs designed for different scale points (Redis for single-node settings, and Apache Cassandra for geo-replicated settings), and find that Anna's performance is competitive at both scales while offering a wider range of consistency levels.

In Figure 4, they compare the throughput of Anna against the TBB hash map, Masstree, and the unsynchronized KVS (labeled as "Ideal") on a single multi-core machine. Both the TBB hashmap and Masstree fail to exploit parallelism on this workload because most requests perform an update against the same key, and concurrent updates to this key have to be serialized. Furthermore, both the TBB hashmap and Masstree must employ synchronization to prevent a single key-value pair from concurrent modification by multiple threads with an overhead proportional to the number of contending threads. Synchronization cost manifests as cache coherence overhead on multi-core hardware.

Figure 5a shows that TBB and Masstree spend 92% - 95% of the CPU time on atomic instructions under high contention, and only 4% - 7% of the CPU time is devoted to request handling. As a result, the TBB hash map and Masstree perform 50× slower than Anna (rep = 1) and 700× slower than Anna (full replication). Anna also beats "ideal" hogwild-style completely inconsistent C++ hashtable since "ideal" suffers from cache coherence overhead resulting from threads modifying the same memory addresses (the contended key-value pairs). In contrast, threads in Anna perform updates against their local state in parallel without synchronizing, and periodically exchange state via multicast. Coordination-freeness keeps every actor in its swimlane doing useful work: in high contention workloads we see 90% of Anna's cycles going toward serving requests.

While Anna can exploit multi-core parallelism, Redis is a single- threaded KVS system, and cannot exploit any parallelism whatsoever. So they additionally compare Anna against Redis Cluster, which knits together multiple independent Redis instances, each of which contain a shard of the KVS.

For performance across consistency levels, for causal consistency, they observe a slight degradation in throughput as Anna has to maintain the vector clock associated with each key-value pair, requiring more sophisticated lattice merge logic. The throughput improvement gained from client-side buffering and caching is highlighted in green. Note that although other consistency levels does not require client-side buffering or caching, it is possible to use these techniques to improve throughput.

Followup work to Anna

Anna is available as opensource here.One lesson learned from Figure 4 is that for systems that support multi-master replication, having a high replication factor under low contention workloads can hurt performance. So a best of both words approach is dynamically monitoring the data's contention level and selectively replicating the highly contented keys across threads. This autoscaling angle is explored in "Autoscaling Tiered Cloud Storage in Anna".

The serverless angle, leveraging on Anna, is explored in "Cloudburst: Stateful Functions-as-a-Service".

A forward looking position paper on "New Directions in Cloud Programming" appeared in CIDR 2021.

Discussion

One problem I have with Anna is that fault-tolerance is not assured. When do we return an "ACK" to the client about its update, be it single key or transaction update? Anna doesn't check for replication. I guess it may be OK to send this at the end of the epoch (or maybe wait two epochs for good measure) when the update is sent to the other nodes for replication. It is unsafe to ACK the client before that, because this node can crash and all data within the last multicast period is lost.I am amazed by how Anna is able to be this competitive with so little code (2K lines in total for the initial version of Anna used for evaluations). This is certainly a testament to the elegance of the CALM theory and the principled design of Anna. But I also wonder how much of the effect is due to neglecting to deal with bunch of tedious unpleasant tasks the real KVS have to deal with as they need to serve a large segment and address more issues.

As an interesting parallel, I want to note that FoundationDB also used a single thread per core, but it was for deterministic debuggability.

UPDATE 5/2/22: See the 5/2/22 comment below for answers to some questions I raised in the summary.

Comments

On a technical note, you assert that In order for merge-at-sender to work, this must be assuming a request is sent to only one master. Otherwise, a receiver won't be able to merge cumulative result with its cumulative results without knowing individual operations it is missing there. As it turns out, that's not true. Since merge is associative/commutative, each "master" can merge updates in any batches they like without changing semantics, and since it's also idempotent it's OK to get cumulative merges from multiple nodes and merge them at the receiver even if they have overlaps.

Example: Node 1 creates merge(X,merge(Y,Z)) and Node 2 creates merge(Y, merge(Z,A)). Suppose Node 3 contains merge(X,merge(A,B)) already, but receives those two opaque messages and merges them in: merge(merge(X,merge(A,B)),(merge(X,merge(Y,Z)),merge(Y, merge(Z,A)))). Thanks to ACI that's equivalent to merge(X,Y,Z,A,B) in any order/nesting/redundancy that could arise.

WRT fault tolerance, it's optional but also so easy you can just do it via the client or a proxy. E.g. the Anna Python client has a simple optional put_all that fetches the addresses of replica nodes for a key from the hashring, puts to all explicitly, and waits for acks from all. You can of course write a similar API that puts to or waits for fewer nodes. This could be baked into the system API as well, we just kept it minimal and did it in the client code.

For more interesting CALM/coordination-free fault tolerance, there's the AFT paper from Eurosys '20. We chose to demo that over S3 and DynamoDB to illustrate that it's not Anna-specific -- any storage backend will do.