Scalog: Seamless Reconfiguration and Total Order in a Scalable Shared Log

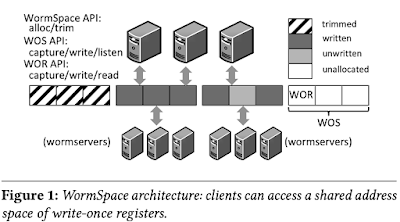

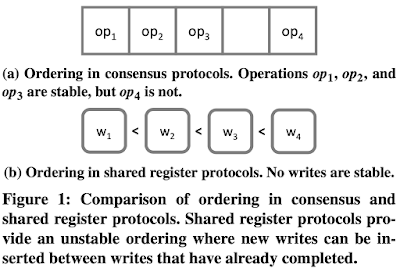

This paper appeared in NSDI'20 and is authored by Cong Ding, David Chu, Evan Zhao, Xiang Li, Lorenzo Alvisi, and Robbert van Renesse. The video presentation of the paper is really nice and gives a good overview of the paper. Here is a video presentation of our discussion of the paper, if that is your learning style, or whatever . (If you like to participate in our paper discussions, you can join our Slack channel .) Background The problem considered is building a fault-tolerant scalable shared log. One way to do this is to employ a Paxos box for providing order and committing the replication to the corresponding shards. But as the number of clients increase the single Paxos box becomes the bottleneck, and this does not scale. Corfu had the nice idea to divorce ordering and replication. The ordering is done by the Paxos box, i.e., the sequencer, and it assigns unique sequence numbers to the data. Then the replication is offloaded to the clients, which contact the storage