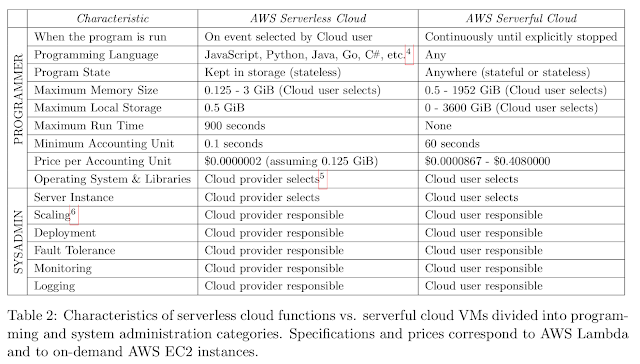

Paper summary. Cloud programming simplified: A Berkeley view on Serverless Computing

This position paper by UC Berkeley RISE lab is about serverless computing, its shortcomings, and its potential. It is easy reading, and is still useful even if you have a pretty good understanding about serverless computing due to some insights and forecasts in the paper. As you will read below, the paper provides a very strong endorsement for serverless computing. Instead of explaining the paper in my terms, I quote some of my highlights from the paper below, and at the end, in the MAD questions section, I discuss some of my thoughts on serverless computing. Introduction We believe the main reason for the success of low-level virtual machines was that in the early days of cloud computing users wanted to recreate the same computing environment in the cloud that they had on their local computers to simplify porting their workloads to the cloud. To set up your own environment in cloud (using virtual machines), you need to address these 8 issues. Redundancy for availability,