Debugging designs with TLA+

This post talks about why you should model your systems and exhaustively test these models/designs with the TLA+ framework. In the first part, I will discuss why modeling your designs is important and beneficial, and in the second part I will explain why TLA+ is a very suitable framework for modeling, especially for distributed and concurrent systems.

There are many corner cases because there are many parameters, and these do interfere in unanticipated ways with each other. The corner cases violate your seemingly reasonable implicit assumptions about the system components and environment, e.g.,"1-hop is faster than 2-hops", "0-hop is faster than 1-hop", and "processes work with the same rate". There are abundant race conditions because today (with the rise of SOA, cloud, and microservices) all systems are distributed systems. Code that is supposedly "atomic block of execution" fails due to other processes executing concurrently. Finally, faults happen and their effects are almost always underestimated pre-deployment. Faults take your system to unanticipated states, and from there on with the interleaving of recovery actions with normal system actions, the system may be thrown to even more unanticipated states.

In large software systems, which are inevitably distributed systems, there are many unknown-unknowns and an infinite number of highly-improbable ways things can go wrong. Human brain and reasoning cannot scale to handle all these possibilities. To alleviate these problems, the industry developed tools for better observability and even testing in production for improving availability. These tools are very important and indispensable. But by the time you figure out some inherent problems with your design it may be too hard and expensive to fix things. What you thought would be the last 10% of the project ends up taking 90% of your time at production and operations.

If you model your designs first and exhaustively test and debug these models for correctness against corner cases, failed assumptions, concurrency, and failures, you can catch errors at the design time and fix them before they develop into problems and become costly to fix.

TLA+ uses basic math to model and reason about algorithms: practical logic, set theory, and temporal logic are used for specifying systems. Best of all, the framework integrates a model checker that exhaustively tests your models to the face of corner cases, failed assumptions, concurrency, and failures. The model checker tries all executions possible for your model and tells you for which executions, your invariants and system guarantees break.

Invariant-based reasoning

TLA+ framework promotes invariant-based reasoning to prevent the problems that arise from operational reasoning. In operational reasoning, you start with a "happy path", and then you try to figure out "what can go wrong?" and how to prevent them. Of course, you always fall short in that enumeration of problem scenarios and overlook corner cases, race conditions, and cascading failures. In contrast, invariant-based reasoning focuses on "what needs to go right?" and how to ensure this properties as invariants of your system at all times. Invariant-based reasoning takes a principled state-based rather than operation/execution-based view of your system.

To attain invariant-based reasoning, we specify safety and liveness properties for our models. Safety properties specify "what the system is allowed to do". For example, at all times, all committed data is present and correct. Liveness properties specify "what the system should eventually do". For example, whenever the system receives a request, it must eventually respond to that request. In other words, safety properties are concerned with "nothing bad happens", and liveness properties with "something good eventually happens".

Modeling with TLA+

The TLA+ framework supports you in building a model and figuring out its invariant properties in two major ways. Firstly, the math-based formal language helps you achieve precision while still working with high-level declarative statements. Secondly, the integrated model checker exhaustively debugs your model to the face of concurrency and failures, and produces counterexamples for which your candidate invariants fail. (After years of working with TLA+, I am still surprised about the counterexamples the model checkers spit out for my models: It is very easy to overlook some scenarios, but the model checker sets you straight.) You address these problems by improving your model or sometimes by relaxing your candidate invariants, and after many iterations converge to an exhaustively debugged model which guarantees the invariants.

Building a TLA+ model is beneficial even for systems that are already implemented and running. Through building the model, you learn about your system better, and figure out some latent failure modes and correct them before they occur in production.

Finally, maintaining a TLA+ model of your system provides important benefits for continuous development. While software systems need to be extended with new features frequently, these extensions may interfere in unanticipated way with the system and lead to downtimes. With the TLA+ model at hand, you can first add these features to your model, and catch/debug the problems at the design-level using the model-checker. This way you resolve potential issues before they even become problems.

TLA+ is practical

Since using TLA+ actually saves time for building large software systems, TLA+ modeling is adopted as a practice by many software companies.

I am on sabbatical at Cosmos DB, Microsoft globally distributed cloud-native database. The team has been using TLA+ to model the replication and global distribution protocols and exhaustively tests the designs for correctness against failures. We have recently published the customer-facing part of the model which precisely defines the 5 consistency levels offered by Cosmos DB.

Amazon has also used TLA+ modeling for some of their AWS offerings and has written a nice experience report on this. There are also reports of using TLA+ for modeling hardware systems as well.

For the last 4 years, I have been incorporating TLA+ in my distributed systems classes. TLA+ enables students to learn about concurrency and invariant-based reasoning and it provides them hands-on experience with distributed protocols. I also use TLA+ exhaustively in my research on new distributed algorithms.

In my experience, it is possible to pick up TLA+ up in a couple weeks. This is firstly because TLA+ adopts a very simple state-machine approach to model systems. A system consists of: (1) A set of variables which define the state of the system, and (2) A finite set of assignments/actions that serves to transition the system from one state to another.

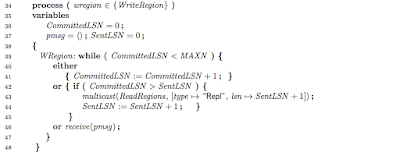

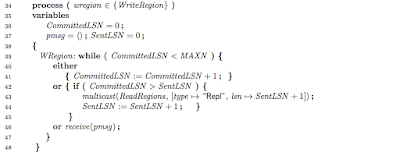

Furthermore, PlusCal provides syntactic a sugar for the TLA+, which has a tendency to grow long (due to its low-level state-transition centric syntax) and look cryptic for some people. PlusCal is a pseudocode for writing algorithms at a higher-level of abstraction, and it is translated to the underlying TLA+ specifications for model checking. To give you some idea about the PlusCal, here is an example of a PlusCal code for a database replica process. While this is a straightforward code, you can see a nondeterministic choice construct "either or" in action. The model checker will exhaustively test all possible combinations of these "either or" actions and check if a certain sequence would break one of your safety and liveness specifications.

My blog includes many examples of TLA+/PlusCal modeling of distributed algorithms/systems.

LearnTLA provides a user-friendly introduction to TLA+/PlusCal.

Lamport's site includes TLA+/PlusCal resources (videos/books/examples) and links to download the toolkit.

Modeling is important

If you have worked on a large software system, you know that they are prone to corner cases, failed assumptions, race conditions, and cascading faults.There are many corner cases because there are many parameters, and these do interfere in unanticipated ways with each other. The corner cases violate your seemingly reasonable implicit assumptions about the system components and environment, e.g.,"1-hop is faster than 2-hops", "0-hop is faster than 1-hop", and "processes work with the same rate". There are abundant race conditions because today (with the rise of SOA, cloud, and microservices) all systems are distributed systems. Code that is supposedly "atomic block of execution" fails due to other processes executing concurrently. Finally, faults happen and their effects are almost always underestimated pre-deployment. Faults take your system to unanticipated states, and from there on with the interleaving of recovery actions with normal system actions, the system may be thrown to even more unanticipated states.

In large software systems, which are inevitably distributed systems, there are many unknown-unknowns and an infinite number of highly-improbable ways things can go wrong. Human brain and reasoning cannot scale to handle all these possibilities. To alleviate these problems, the industry developed tools for better observability and even testing in production for improving availability. These tools are very important and indispensable. But by the time you figure out some inherent problems with your design it may be too hard and expensive to fix things. What you thought would be the last 10% of the project ends up taking 90% of your time at production and operations.

If you model your designs first and exhaustively test and debug these models for correctness against corner cases, failed assumptions, concurrency, and failures, you can catch errors at the design time and fix them before they develop into problems and become costly to fix.

- Modeling first does not extend your development time, on the contrary it saves you time by reducing futile development attempts. Embarking on development with a flawed design almost always ensures that the implementation is flawed. While having a precise and correct model at hand does not guarantee that your implementation of the model is correct, it helps you avoid the big/intricate problems and also provides a good reference for testing your implementation against.

- Constructing a precise model of your system gives you clarity of thinking and supports your development immensely. By modeling you discover about the inherent complexities of the problem; that helps you focus your attention and ignore accidental/byproduct complexities.

- The model also helps you to communicate precisely with your team and others as you avoid the ambiguity of natural language and the hand-waving and generalizations involved.

- Finally with the model at hand, you also have a chance to gradually introduce design decisions, and see alternative ways to implement the design.

TLA+ is great for modeling

TLA+ is a formal language for describing and reasoning about distributed and concurrent systems. It is developed by Dr. Leslie Lamport, Turing Award winner 2013. Lamport is a very important figure in distributed systems due to his logical clocks work and Paxos work among many others. For the last decade, he is very involved with improving the TLA+ framework to help make distributed systems more manageable.TLA+ uses basic math to model and reason about algorithms: practical logic, set theory, and temporal logic are used for specifying systems. Best of all, the framework integrates a model checker that exhaustively tests your models to the face of corner cases, failed assumptions, concurrency, and failures. The model checker tries all executions possible for your model and tells you for which executions, your invariants and system guarantees break.

Invariant-based reasoning

TLA+ framework promotes invariant-based reasoning to prevent the problems that arise from operational reasoning. In operational reasoning, you start with a "happy path", and then you try to figure out "what can go wrong?" and how to prevent them. Of course, you always fall short in that enumeration of problem scenarios and overlook corner cases, race conditions, and cascading failures. In contrast, invariant-based reasoning focuses on "what needs to go right?" and how to ensure this properties as invariants of your system at all times. Invariant-based reasoning takes a principled state-based rather than operation/execution-based view of your system.

To attain invariant-based reasoning, we specify safety and liveness properties for our models. Safety properties specify "what the system is allowed to do". For example, at all times, all committed data is present and correct. Liveness properties specify "what the system should eventually do". For example, whenever the system receives a request, it must eventually respond to that request. In other words, safety properties are concerned with "nothing bad happens", and liveness properties with "something good eventually happens".

Modeling with TLA+

The TLA+ framework supports you in building a model and figuring out its invariant properties in two major ways. Firstly, the math-based formal language helps you achieve precision while still working with high-level declarative statements. Secondly, the integrated model checker exhaustively debugs your model to the face of concurrency and failures, and produces counterexamples for which your candidate invariants fail. (After years of working with TLA+, I am still surprised about the counterexamples the model checkers spit out for my models: It is very easy to overlook some scenarios, but the model checker sets you straight.) You address these problems by improving your model or sometimes by relaxing your candidate invariants, and after many iterations converge to an exhaustively debugged model which guarantees the invariants.

Building a TLA+ model is beneficial even for systems that are already implemented and running. Through building the model, you learn about your system better, and figure out some latent failure modes and correct them before they occur in production.

Finally, maintaining a TLA+ model of your system provides important benefits for continuous development. While software systems need to be extended with new features frequently, these extensions may interfere in unanticipated way with the system and lead to downtimes. With the TLA+ model at hand, you can first add these features to your model, and catch/debug the problems at the design-level using the model-checker. This way you resolve potential issues before they even become problems.

TLA+ is practical

Since using TLA+ actually saves time for building large software systems, TLA+ modeling is adopted as a practice by many software companies.

I am on sabbatical at Cosmos DB, Microsoft globally distributed cloud-native database. The team has been using TLA+ to model the replication and global distribution protocols and exhaustively tests the designs for correctness against failures. We have recently published the customer-facing part of the model which precisely defines the 5 consistency levels offered by Cosmos DB.

Amazon has also used TLA+ modeling for some of their AWS offerings and has written a nice experience report on this. There are also reports of using TLA+ for modeling hardware systems as well.

For the last 4 years, I have been incorporating TLA+ in my distributed systems classes. TLA+ enables students to learn about concurrency and invariant-based reasoning and it provides them hands-on experience with distributed protocols. I also use TLA+ exhaustively in my research on new distributed algorithms.

In my experience, it is possible to pick up TLA+ up in a couple weeks. This is firstly because TLA+ adopts a very simple state-machine approach to model systems. A system consists of: (1) A set of variables which define the state of the system, and (2) A finite set of assignments/actions that serves to transition the system from one state to another.

Furthermore, PlusCal provides syntactic a sugar for the TLA+, which has a tendency to grow long (due to its low-level state-transition centric syntax) and look cryptic for some people. PlusCal is a pseudocode for writing algorithms at a higher-level of abstraction, and it is translated to the underlying TLA+ specifications for model checking. To give you some idea about the PlusCal, here is an example of a PlusCal code for a database replica process. While this is a straightforward code, you can see a nondeterministic choice construct "either or" in action. The model checker will exhaustively test all possible combinations of these "either or" actions and check if a certain sequence would break one of your safety and liveness specifications.

To learn more

There is a very active TLA+ forum at Google Groups. Leslie Lamport chimes in several threads.My blog includes many examples of TLA+/PlusCal modeling of distributed algorithms/systems.

LearnTLA provides a user-friendly introduction to TLA+/PlusCal.

Lamport's site includes TLA+/PlusCal resources (videos/books/examples) and links to download the toolkit.

Comments