Serializable Isolation for Snapshot Databases

This paper (SIGMOD '08) proposes a lightweight runtime technique to make Snapshot Isolation (SI) serializable without falling back to locking. The key idea behind Serializable SI (SSI) is to detect potentially dangerous (write-skew) executions at runtime and abort one of the transactions to guarantee serializability (SER).

The goal is to offer the strong guarantees of SER without sacrificing SI's high performance and non-blocking reads. But would it make sense to implement SER by layering on MVCC SI instead of implementing it directly? Do you think an SI-based implementation would be more performant than native 2PL-based SER implementations? What about compared to OCC-based SER? The evaluation section gives some answers.

The problem and insight

Let's back up.

Write-skew is the canonical anomaly under SI. And the canonical example for write-skew is the "doctors on-call" scenario. Consider two doctors removing themselves from a duty roster. Each transaction checks that at least one doctor remains on duty and proceeds to update its own status. Under SI, each transaction sees the old snapshot where the other doctor is still on duty. Both commit. The resulting state violates the application’s invariant, even though each transaction was locally consistent. Compare this to SER which ensures that integrity constraints are maintained even if those constraints are not explicitly declared to the DBMS. (Another famous write-skew example is reading savings and checking accounts both, and withdrawing from one. Yet another example could be graph coloring, reading neighbor's color to switch your own in response.)

A naive fix for preventing write-skew would be to track the read set of a transaction T1 and, at commit time, check whether any item read by T1 was overwritten by a concurrent writer. If so, we abort T1. This eliminates outgoing rw-dependencies and thus prevents write-skew cycles. It works, but it's overkill: You end up aborting transactions even when no cycle would form. For example, in the execution "b1 r1(x) w2(x) w1(y) c1 c2", T1 would be aborted because its read r1(x) is overwritten by T2's w2(x), but it is possible to serialize this execution as T1 followed by T2, as the writesets are not conflicting as per SI.

The paper proposes a smarter fix: only abort if a transaction has both an inConflict and an outConflict edges with other transactions. That is, it sits in the middle of two concurrent rw-dependencies (the paper calls this a pivot in a dangerous structure). Prior work by the authors (Fekete et al. 2005) proves that all SI anomalies contain such a pivot. So this check would suffice.

The approach still over-approximates somewhat: having both in and out conflicts doesn’t always imply a cycle. See Fig 9, for an example. Although T0 has both inConflict and outConflict, it is possible to serialize these transactions as TN, T0, and T1 order. The paper doesn't quantify the false positive rate or explore pathological workloads.

The paper says full serialization graph cycle detection would be too expensive, and this is the compromise. This is still a tighter and more efficient condition than the naive approach. The Ferro-Yabandeh Eurosys '12 paper uses something close to the naive approach. Same with naive OCC-based SER implementations. They abort based on single outConflict edge.

Implementation and evaluation

The implementation tracks two flags per transaction: inConflict and outConflict. It also adds a new lock mode, SIREAD, to record reads. Reads acquire SIREAD locks; writes acquire WRITE locks. When a read at T1 observes a version written by T2, we set T1.outConflict and T2.inConflict. This is called a rw-dependency (a.k.a antidependency) from T1 to T2. When a write at T1 detects an existing SIREAD by T2, we set T1.inConflict and T2.outConflict. See the pseudocode in Figures 5-8 for how this is implemented.

When both flags are set for any transaction, one transaction in the conflict chain is aborted. The implementation prefers to abort the pivot (the transaction with both in and out conflicts), unless it has already committed. Else an adjacent transaction is aborted. If there are two pivots in the cycle, whichever is detected first gets aborted. This choice is sound and gives room for policy tuning (e.g., prefer aborting younger transactions).

Note that all of this is layered on top of SI. Readers never block writers. Writers never block readers. The only cost is the extra bookkeeping to track conflicts.

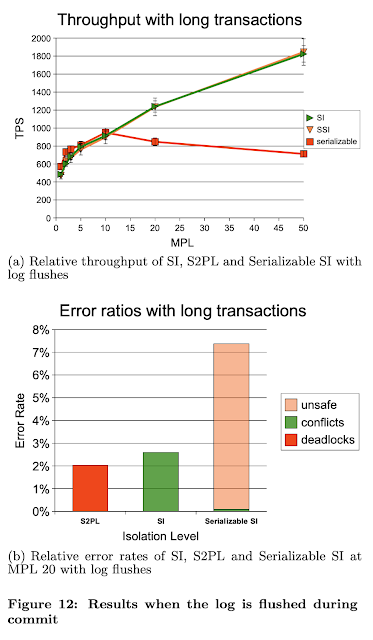

The authors implemented SSI over Berkeley DB (which provides SI through MVCC) with minimal changes, around 700 lines of modified code out of a total of over 200,000 lines of code in Berkeley DB. The experiments with the SmallBank benchmark show that the performance is close to plain SI and significantly better than 2PL under contention. Note that the S2PL implementation of Berkeley DB does not leverage MVCC and locks at page level.

The paper does not contrast SSI with OCC implementation of SER. But it argues that naive OCC implementations abort on any rw-conflict. SSI waits until both in and out conflicts are present, and would result in fewer false positives and better throughput.

My take

SI is attractive because it avoids blocking: readers don't block writers and vice versa. Maybe the takeaway is this: Stick with SI. But if you really must go serializable, consider layering SSI on top of SI instead of using 2PL or OCC based SER implementations that doesn't leverage MVCC.

But, still, there are many caveats to SSI as presented in this paper: phantoms are not addressed, false positives remain, B-tree level conflicts inflate aborts, and adapting this to distributed systems is non-trivial.

This paper is a research prototype after all. A VLDB'12 paper by Dan Ports followed this algorithm to provide a PostgreSQL implementation adds integration with real-world features like: replication and crash recovery, two-phase commit (tupac), subtransactions and savepoints, and memory bounding via transaction summarization. It also handled phantoms by leveraging SIREAD locks on index ranges to detect predicate read/write conflicts.

Caveats and Open Questions

The paper punts on phantoms. It says that Berkeley DB uses page-level locking, so predicate-based anomalies don’t occur. But for engines with row-level locking, it suggests that SSI would need to acquire SIREAD locks on index ranges or use multigranularity locking.

The design assumes a single-node system. In a distributed DB, you would need to propagate SIREAD metadata and conflict flags across partitions. Detecting cross-partition dependencies and coordinating pivot aborts would be hard. The paper does not talk about implementation in distributed databases.

A known artifact in the Berkeley DB implementation is page-level locking. Updates to shared pages, like the B-tree root during a split, look like write-write conflicts across unrelated transactions. These inflate false positives and aborts under SSI.

My troubles with Figure 4

I mean I have to complain about this (otherwise it won't be a blog post from Murat). Figure 4 was terribly confusing for me. I had a hard time figuring out why these two scenarios are not serializable. It turns out I got confused by the "helpful text" saying: "In Figure 4(a), both reads occur after the writes. In Figure 4(b), TN reads x before it is written by T0." No, that is irrelevant: the reads in SI happen from the snapshot at the transaction start time, so when the reads happen is not relevant. Confused by this I was wondering why we can't serialize Figure 4.a execution as T1, T0, TN.

Ok, let's focus on Fig 4.a. T0's serialization point should be before T1, because of rw-dependency from T0 to T1: T0' read happens before T1's write. T1 needs be serialized before TN, because TN reads z from T1's write. But TN is problematic. TN introduces rw-dependence from TN to T0. It reads x from its snapshot which is earlier than T0's commit point. So TN should come before T0. This conflicts with the two previous requirements that T0 precedes T1 and T1 precedes TN. We have a cycle that prevents serialization.

Figure 4.b is very similar to Figure 4.a and has the same cycle. The only difference is TN's commit point comes a little later, but it doesn't change anything, TN does its read from the snapshot taken at the start of TN.

It would have been nice to supplement the figure with a text explaining the cycles, and giving some insights into the thought process.

Comments