Looking Back at Postgres

This is a 2019 article by Joe Hellerstein, the Jim Gray Professor of Computer Science at UC Berkeley. Last year at Sigmod, Joe was awarded the Ted Codd innovation award where he gave an awesome overview of his research agenda.

This article, written to be included in Stonebraker’s Turing Award book, provides a retrospective on Postgres project, which Stonebraker led from the mid-1980’s to the mid-1990’s. I love this article a lot, because as I have written before, context is my crack: "The more context I know, the better I become able to locate something almost spatially, and the more I can make sense of it. Even reading the history and motivation for the subject can give my understanding a big boost." The entire paper is context, and it even has a section titled "Context", how cool is that? The footnotes on the article are also excellent! Very interesting gems there as well.

Disclaimer: I use a lot of text from the article to summarize it. The features and the impact sections in this write up are just text lifted off from the article. (Don't taze me bro!)

Postgres origin story

Riding on the success of Ingres project at Berkeley, and the subsequent start-up Relational Technology, Inc. (RTI), Stonebraker began working on database support for data types beyond the traditional rows and columns of Codd's relational model in the early 1980s. A motivating example was to provide database support for Computer-Aided Design (CAD) tools for the microelectronics industry, including "new data types such as polygons, rectangles, text strings, etc." "efficient spatial searching" "complex integrity constraints" and "design hierarchies and multiple representations" of the same physical constructions.

What the hey? I didn't expect this to be the origin story of Postgres!! This is almost exactly the motivation for MongoDB document database in 2007, a good 25 years later.

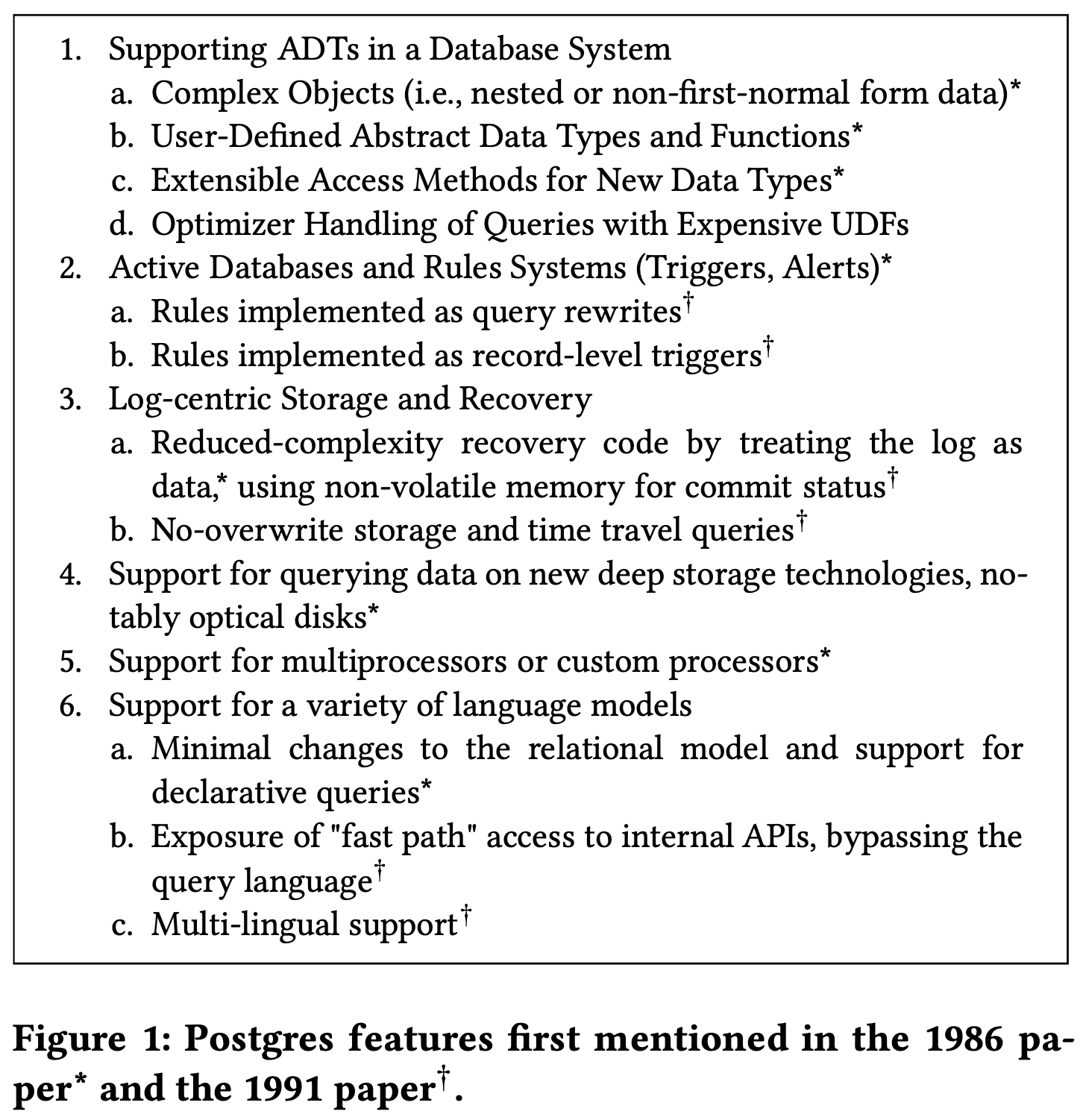

Postgres was "Post-Ingres": a system designed to take what Ingres could do, and go beyond. The signature theme of Postgres was the introduction of what Stonebraker eventually called Object-Relational database features: support for object-oriented programming ideas within the data model and declarative query language of a database system. But Stonebraker also decided to pursue a number of other technical challenges in Postgres that were independent of object-oriented support, including active database rules, versioned data, tertiary storage, and parallelism.

So Postgres was Stonebraker's grand effort to build a one-size-fits-all database system. This is ironic, because later he (when he joined MIT) published the "One size does not fit all" paper. Joe also picks up on this, and he sides with the Berkeley-Stonebraker's approach "that a broad majority of database problems can be solved well with a good general-purpose architecture."

The article reviews these features. What intruged me was that only a couple of these was a hit, and most of these are misses. Joe says that: "Many of these topics were addressed in Postgres well before they were studied or reinvented by others; in many cases Postgres was too far ahead of its time and the ideas caught fire later, with a contemporary twist."

So, then, what made Postgres so successful? What was the big hit? It may be the flexible opensource code base and dynamic group behind it that kept this going. Berkeley had been a hot bed of software development with BSD and other projects coming out at the time.

Stonebraker acknowleges this very humbly, and Joe summarizes the lesson here as "do something important and set it free."

[A] pick-up team of volunteers, none of whom have anything to do with me or Berkeley, have been shep- herding that open source system ever since 1995. The system that you get off the web for Postgres comes from this pick-up team. It is open source at its best and I want to just mention that I have nothing to do with that and that collection of folks we all owe a huge debt of gratitude to.

In the lessons section at the end of the article, Joe also talks about the second system effect as follows. I agree with what he says. I think another reason this worked is due to the incremental delivery of the system, with piecewise student projects.

The highest-order lesson I draw comes from the fact that that Postgres defied Fred Brooks’ “Second System Effect” (1975). Brooks argued that designers often follow up on a successful first system with a second system that fails due to being overburdened with features and ideas. Postgres was Stonebraker’s second system, and it was certainly chock full of features and ideas. Yet the system succeeded in prototyping many of the ideas, while delivering a software infrastructure that carried a number of the ideas to a successful conclusion. This was not an accident --at base, Postgres was designed for extensibility, and that design was sound. With extensibility as an architectural core, it is possible to be creative and stop worrying so much about discipline: you can try many extensions and let the strong succeed. Done well, the “second system” is not doomed; it benefits from the confidence, pet projects, and ambitions developed during the first system. This is an early architectural lesson from the more “server-oriented” database school of software engineering, which defies conventional wisdom from the “component-oriented” operating systems school of software engineering.

Features

Complex objects

Relational modeling religion dictated that data should be restructured and stored in an unnested format, using multiple flat entity tables (orders, products) with flat relationship tables (product_in_order) connecting them. But in some cases you want to store the nested representation, because it is natural for the application.

Postgres retained tables as its "outermost" data type, but allowed columns to have "complex" types including nested tuples or tables. One of its more esoteric implementations, first explored in the ADT-Ingres prototype, was to allow a table-typed column to be specified declaratively as a query definition: "Quel as a data type".

As Postgres has grown over the years (and shifted syntax from Postquel to versions of SQL that reflect many of these goals), it has incorporated support for nested data like XML and JSON into a general-purpose DBMS without requiring any significant rearchitecting. The battle swings back and forth, but the Postgres approach of extending the relational framework with extensions for nested data has shown time and again to be a natural end-state for all parties after the arguments subside.

User-defined abstract data types and functions

Postgres pioneered the idea of having opaque, extensible Abstract Data Types (ADTs), which are stored in the database but not interpreted by the core database system. To enable queries that interpret and manipulate these objects, an application programmer needs to be able to register User-Defined Functions (UDFs) for these types with the system, and be able to invoke those UDFs in queries. User-Defined Aggregate (UDA) functions are also desirable to summarize collections of these objects in queries. Postgres was the pioneering database system supporting these features in a comprehensive way.

Why put this functionality into the DBMS, rather than the applications above? The classic answer was the significant performance benefit of “pushing code to data,” rather than “pulling data to code.” Postgres showed that this is quite natural within a relational framework: it involved modest changes to a relational metadata catalog, and mechanisms to invoke foreign code, but the query syntax, semantics, and system architecture all worked out simply and elegantly.

Extensible access methods for new datatypes

This problem was au courant at the time of Postgres, and the R-tree developed by Antonin Guttman (1984) in Stonebraker’s group was one of the most successful new indexes developed to solve this problem in practice. Still, the invention of an index structure does not solve the end-to-end systems problem of DBMS support for multi-dimensional range queries. Many questions arise. Can you add an access method like R-trees to your DBMS easily? Can you teach your optimizer that said access method will be useful for certain queries? Can you get concurrency and recovery correct?

R-trees became a powerful driver and the main example of the elegant extensibility of Postgres’ access method layer and its integration into the query optimizer. Postgres demonstrated— in an opaque ADT style --how to register an abstractly described access method (the R-tree, in this case), and how a query optimizer could recognize an abstract selection predicate (a range selection in this case) and match it to that abstractly described access method.

PostgreSQL today leverages both the original software architecture of extensible access methods (it has B-tree, GiST, SP-GiST, and Gin indexes) and the extensibility and high concurrency of the Generalized Search Tree (GiST) interface as well. GiST indexes power the popular PostgreSQL-based PostGIS geographic information system; Gin indexes power PostgreSQL’s internal text indexing support.

Active Databases and Rule Systems

Stonebraker’s work on database rules began with Eric Hanson’s Ph.D., which initially targeted Ingres but quickly transitioned to the new Postgres project. It expanded to the Ph.D. work of Spyros Potamianos on PRS2: Postgres Rules System 2. A theme in both implementations was the potential to implement rules in two different ways. One option was to treat rules as query rewrites, reminiscent of the work on rewriting views that Stonebraker pioneered in Ingres. In this scenario, a rule logic of "on condition then action" is recast as "on query then rewrite to a modified query and execute it instead." For example, a query like "append a new row to Mike’s list of awards" might be rewritten as "raise Mike’s salary by 10%." The other option was to implement a more physical "on condition then action," checking conditions at a row level by using locks inside the database. When such locks were encountered, the result was not to wait (as in traditional concurrency control), but to execute the associated action.

In the end, neither the query rewriting scheme nor the row-level locking scheme was declared a "winner" for implementing rules in Postgres—both were kept in the released system. Eventually all of the rules code was scrapped and rewritten in PostgreSQL, but the current source still retains both the notions of per-statement and per-row triggers.

Log-centric Storage and Recovery

Stonebraker described his design for the Postgres storage system this way:

When considering the POSTGRES storage system, we were guided by a missionary zeal to do something different. All current commercial systems use a storage manager with a write-ahead log (WAL), and we felt that this technology was well understood. Moreover, the original Ingres prototype from the 1970s used a similar storage manager, and we had no desire to do another implementation.

Over the years, Stonebraker repeatedly expressed distaste for the complex write-ahead logging schemes pioneered at IBM and Tandem for database recovery. One of his core objections was based on a software engineering intuition that nobody should rely upon something that complicated--especially for functionality that would only be exercised in rare, critical scenarios after a crash.

In the end, the Postgres storage system never excelled on performance; versioning and time-travel were removed from PostgreSQL over time and replaced by write-ahead logging. This is because, once the commercial vendors had write-ahead logs working well, they had innovated on follow-on ideas such as transactional replication based on log shipping, which would be difficult in the Postgres scheme.

Support for Multiprocessors: XPRS

Stonebraker never architected a large parallel database system, but he led many of the motivating discussions in the field. His “Case for Shared Nothing” paper (1986) documented the coarse-grained architectural choices in the area; it popularized the terminology used by the industry, and threw support behind shared-nothing architectures like those of Gamma and Teradata, which were rediscovered by the Big Data crowd in the 2000s.

The basic idea of what Stonebraker called “The Wei Hong Optimizer” was to cut the problem in two: run a traditional single-node query optimizer in the style of System R, and then “parallelize” the resulting single-node query plan by scheduling the degree of parallelism and placement of each operator based on data layouts and system configuration. This approach is heuristic, but it makes parallelism an additive cost to traditional query optimization, rather than a multiplicative cost. Although “The Wei Hong Optimizer” was designed in the context of Postgres, it became the standard approach for many of the parallel query optimizers in industry.

Support for a Variety of Language Models

One of Stonebraker’s recurring interests since the days of Ingres was the programmer API to a database system. The OODB idea was to make programming language objects be optionally marked “persistent,” and handled automatically by an embedded DBMS. Postgres supported storing nested objects and ADTs, but its relational-style declarative query interface meant that each roundtrip to the database was unnatural for the programmer (requiring a shift to declarative queries) and expensive to execute (requiring query parsing and optimization). To compete with the OODB vendors, Postgres exposed a so-called “Fast Path” interface: basically a C/C++ API to the storage internals of the database. This enabled Postgres to be moderately performant in academic OODB benchmarks, but never really addressed the challenge of allowing programmers in multiple languages to avoid the impedance mismatch problem. Instead, Stonebraker branded the Postgres model as “Object-Relational,” and simply sidestepped the OODB workloads as a “zero-billion dollar” market. Today, essentially all commercial relational database systems are “Object-Relational” database systems.

This application-level approach is different than both OODBs and Stonebraker’s definition of Object-Relational DBs. In addition, lightweight persistent key-value stores have succeeded as well, in both non-transactional and transactional forms. These were pioneered by Stonebraker’s Ph.D. student Margo Seltzer, who wrote BerkeleyDB as part of her Ph.D. thesis at the same time as the Postgres group, which presaged the rise of distributed “NoSQL” key-value stores like Dynamo, MongoDB, and Cassandra.

Impact

Opensource Impact

As the Postgres research project was winding down, two students in Stonebraker’s group—Andrew Yu and Jolly Chen—modified the system’s parser to accept an extensible variant of SQL rather than the original Postquel language. The first Postgres release supporting SQL was Postgres95; the next was dubbed PostgreSQL.

A set of open-source developers became interested in PostgreSQL and “adopted” it even as the rest of the Berkeley team was moving on to other interests. Over time the core developers for PostgreSQL have remained fairly stable, and the open-source project has matured enormously. Early efforts focused on code stability and user-facing features, but over time the open source community made significant modifications and improvements to the core of the system as well, from the optimizer to the access methods and the core transaction and storage system.

While many things have changed in 25 years, the basic architecture of PostgreSQL remains quite similar to the university releases of Postgres in the early 1990s, and developers familiar with the current PostgreSQL source code would have little trouble wandering through the Postgres3.1 source code (c. 1991). Everything from source code directory structures to process structures to data structures remain remarkably similar. The code from the Berkeley Postgres team had excellent bones.

PostgreSQL today is without question the most high-function open-source DBMS, supporting features that are often missing from commercial products. It is also (according to one influential rankings site) the most popular widely used independent open source database in the world and its impact continues to grow: in both 2017 and 2018 it was the fastest-growing database system in the world in popularity PostgreSQL is used across a wide variety of industries and applications, which is perhaps not surprising given its ambition of broad functionality.

Heroku is a cloud SaaS provider that is now part of Salesforce. Postgres was adopted by Heroku in 2010 as the default database for its platform. Heroku chose Postgres because of its operational reliability. With Heroku’s support, more major application frameworks such as Ruby on Rails and Python for Django began to recommend Postgres as their default database.

Commercial adaptations

Many of the commercial efforts that built on PostgreSQL have addressed what is probably its key limitation: the ability to scale out to a parallel, shared-nothing architecture. Illustra, Netezza, Greenplum, EnterpriseDB, AsterData, ParAccel (acquired by Amazon and formed a basis for AWS Redshift), and Citus.

Although the article doesn't mention it AWS RDS and AWS Aurora also provide managed Postgres services and are big.

Comments

What are your thoughts on the future of user defined functions? The reward is very tantalizing, but engineers are usually advised against using them due to the difficulty of debugging a database and the risk of crashing it as apposed to your server. Is there a future where these costs could be ameliorated?

This part of the post also jumped out at me:

> its relational-style declarative query interface meant that each roundtrip to the database was unnatural for the programmer (requiring a shift to declarative queries) and expensive to execute (requiring query parsing and optimization). To compete with the OODB vendors, Postgres exposed a so-called “Fast Path” interface: basically a C/C++ API to the storage internals of the database.

This solution seems to have fizzled out, but it seems that the problem persists. ORMs try to improve the ergonomics, but at the cost another layer of abstraction, and potentially large performance penalties, Query builders also gesture at the problem but are relatively janky. Are there new approaches on the horizon? Is the performance penalty from parsing and optimizing still relevant today, or subsumed by networking costs?