Detock: High Performance Multi-region Transactions at Scale (Sigmod 2023)

This paper (from Sigmod 2023) is a followup to the deterministic database work that Daniel Abadi has been doing for more than a decade. I like this type of continuous research effort rather than people jumping from one branch to another before exploring the approach in depth.

The backstory for Detock starts with the Calvin paper from 2012. Calvin used a single logically centralized infallible coordinator (which is in fact 3 physical nodes under the raincoat using Paxos for state machine replication) to durably lock-in on the order of oplogs to be executed. The coordinator also gets rid of nondeterminism sources like random or time by filling in those values. The oplogs then get sent to the workers that execute them and materialize the values. The execution is local, where the executors simply follow the logs they receive.

This deterministic database architecture makes an interesting tradeoff. Rather than primaries executing and replicating the value of the results to secondaries, here a coordinator orders and disseminates the oplogs and each replica does the execution themselves locally. Basically, this is trading off communication of replicated values with doing computation locally and deriving the values. This approach can actually simplify the design, and as we will see for Detock work below, this can help improve latency and throughput in a wide area network (WAN) multi-region deployment.

The biggest con of the deterministic database is that you lose the interactive query/transaction execution. The transaction is basically a stored procedure with its reads-set and write-set known in advance. In other words, the read/write sets do not extend by interacting with the database: we have a one-shot transaction.

Oh, also this is a batch-based processing system, which puts a toll on the latency. The coordinator locks-in on the oplogs in mini-batches (default is 5ms), and sends these mini-batches for execution. Not too bad, batching is an idea that works. And we already see disaggregation at work here. Sorting oplogs is separate from executing them. However, I am a little puzzled by why Detock didn't go further to get storage disaggregated, and read from it, rather than the caching data from other nodes and executing oplogs on them to read them. Ok, we will get to this later in Detock discussion. Where were we again?

Ok, Calvin got a follow up work, SLOG in 2019, to decentralize the coordinator. SLOG differentiated between two types of transactions: single-homed, and multi-homed transactions. This concept is still used in Detock, and Detock one-ups SLOG in its efficiency of multi-home transaction handling. Ok, this gets confusing, if you don't pay enough attention to the definition of single/multi-home transaction, as I failed to do when reading Detock. I wish they used standard terminology: single-home==single-region and multi-home==multi-region, where region is a geographic region, such as US-EAST, US-WEST. You can still have a single-home (i.e., single region say at US-EAST) transaction that touches multiple partitions (hence it is called, duh, multi-partition) over machines all in that same region.

OK, we were talking about how SLOG went about decentralizing the coordinator. SLOG has a regional dedicated coordinator for each single-home oplog. These coordinators then disseminate the mini-batch oplogs to the other regions, so the executors there can execute them and materialize them in case they need it for multi-home transactions. And for the multi-home transactions SLOG uses a global designated single/centralized coordinator to serialize them in to a total-order and disseminate these mini-batch oplogs to the regions for execution. The total-ordering of multihome transactions avoids deadlock, but this comes at the cost of routing all multi-home transactions through a single coordinator in the globe.

See how that is limiting? In my summary of SLOG, back in 2020, I mentioned that this can be improved even further:

The use of a global log for multi-home transaction ordering was an interesting point of discussion in our Zoom reading group. We discussed whether it could be possible to order the multi-home transactions by other means, say using version vectors included in their metadata. The global log provides a central point which increases latency, as we will see in the latency graphs in the evaluation.

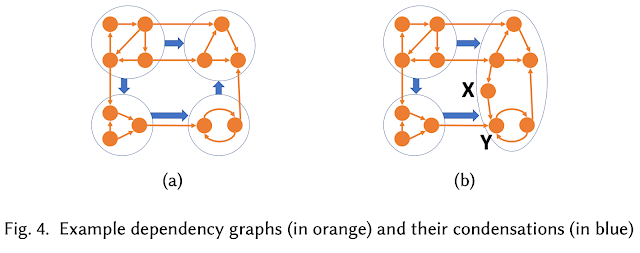

The Detock paper does that relaxation. Ok, not with version vectors, but with dependency tracking. This puts Detock in similar company with EPaxos, and followup work like the Tempo and Accord protocols. Detock in fact compares against Janus, which is an EPaxos (dependency-graph) based transaction implementation. Unfortunately it fails to make a connection between Detock and recent work that alleviates the problems associated with the EPaxos's dependency graph hell. EPaxos Revisited paper (from NSDI 2021) identifies a problem with EPaxos dependency chains from growing indefinitely under certain conditions, and shows a way to fix it using strongly-connected-components (SCCs), topologically sorting them, and using an increasing sequence number to order them deterministically and locally at different nodes. This is also the approach Detock paper takes.

A second line of work that improved on the dependency graph problem of EPaxos is Tempo (from EuroSys 2021). Tempo used timestamps to encode dependencies, and executed commands only after the timestamp becomes stable, i.e., all commands with a lower timestamp are known. The Accord protocol (Cassandra Enhancement Protocol "CEP-15: General Purpose Transactions") improved on Tempo further by using loosely synchronized clocks for timestamping and future timestamping to improve the efficiency of Tempo, and avoid unnecessary cross contention of dependencies. Detock paper uses a similar future timestamping idea to reduce the temporal contention domain of dependencies, in an effort to combat the livelock (indefinite growing of dependency chains) problem.

Since Detock uses similar ideas for resolving dependency graphs, I think failing to cite these papers was a missed opportunity for Detock. Dan Abadi's group works in the intersection of distributed systems and databases, and I think they missed an opportunity to bridge these two communities by relating work across the two banks of the river.

Here is Detock's summary of how they deal with the dependencies coming from multi-home transactions:

Detock presents a graph-based concurrency control protocol that enables multi-region transaction to be scheduled deterministically at each region such that all regions involved in processing a transaction will construct the same graph independently and process transactions completely without cross-region coordination after receiving all parts of the transaction. The graphs constructed by each region are formed based on conflicting accesses by different transactions, and indeed may contain deadlocks. However, since each region constructs the same graph, deadlocks can be resolved by dynamically reordering accesses by deadlocked transactions to resolve the deadlock deterministically without ever having to resort to aborting transactions and without having to communicate this reordering with other regions.

Nevertheless, high network delays between regions can cause the size and number of deadlocks to grow unbounded. We therefore implement a practical version of this algorithm within a new system called Detock that annotates transactions with real-time based timestamps, which are used to strategically schedule transactions to reduce the probability of deadlock. Detock also implements a novel protocol for migrating data to other geo-partitions using a simpler approach than used in previous work.

Detock overview

Each item is assigned to exactly one geographic region. This is called a home region. A region may store local data for which it is designated as the home region, and remote data which are materialized by replaying logs asynchronously replicated from other regions. Data is also partitioned locally within a region independently of home status: each partition might contain a mix of local and remote data.

This is the point where I connect back to the big-data and disaggregation mention I made above. I guess executing it yourself avoids communication cost to the other side and back again to read the value by trading this off with extra storage/cache and computation cost. I wonder if with a disaggregated storage it would be possible to read from a nearby materialization of this by another node, or if you are the first to do it for sharing it with others.

A region can deterministically replay a local log from any other region 𝑅 to obtain the state of R’s local data. Therefore, persisting the local logs is sufficient for durability, and replication is performed by shipping these local logs. While it is not required for a region to hold any remote data, having a possibly stale copy of the remote data allows local snapshot reads of data from other regions and makes executing multi-home transactions (including the home-movement transactions in Section 4) faster. To this end, regions in Detock and SLOG asynchronously exchange their local logs to each other, so each region eventually receives and replays the complete local log from every other region, as can be seen in the Log Managers of both regions in Fig. 1.

This another way of recapturing of Detock's improvement over SLOG from the paper. It is important so it is worth repeating:

SLOG globally orders multi-home transactions to avoid inconsistently ordering them across regions (e.g. T1 before T2 at region 1, but T2 before T1 at region 2) which could result in serializability violations, OCC aborts, or deadlock. Detock eliminates this global ordering, but must therefore deal with the problems that arise from inconsistent ordering (discussed in the next section). By eliminating multi-home transaction ordering, Detock is able to guarantee that each transaction, regardless of its type, only needs a single-round trip from the initiating region to the participating regions: the initiating region sends the multi-home transaction to each region which houses data that it accesses, waits to receive the local log records back from those regions through which it can derive the state at those regions over which that transaction must run, and can then process that transaction to completion locally. Every other region, including the ones that write local data, see the same local logs and also process that transaction locally.

Fig 5 shows a scenario where the dependencies between multi-home transactions can grow indefinitely if transaction arrival at different nodes range over a longer duration, keeping the tab open for collisions in the temporal domain with other transactions. Detock deals with this by using a best-effort scheme called opportunistic ordering that merely reduces the probability of conflicting orders of multi-home transactions (and thus deadlock), but does not eliminate it entirely. When a transaction 𝑇 first enters Detock, its coordinator assigns it a future (real time) timestamp based on its local clock. To do this in such a way to reduce conflicts, the coordinator attempts to assign a timestamp far enough into the future so that it will arrive everywhere prior to its designated start time. To accomplish this, the future timestamp is computed by adding to the coordinator’s local time the one-way delay to the farthest participating region (delay-wise) plus a small overshoot (2 ms).

Evaluation

Detock is implemented in C++ with ZeroMQ for message passing between processes on different nodes and between threads in the same process MH means two regions. The implementation is available at https://github.com/umd-dslam/Detock. Yay! What is more, they re-implemented Calvin, SLOG, and Janus inside this Detock codebase so that all four systems can use the same storage layer, communication library, local consensus code, and logging infrastructure to make things more fair for comparison.

The evaluation is well written and described. There are two non-standard things to note. Multihome (MH) transactions access data from two regions, rather than 2+ regions. Secondly, when evaluating with TPC-C benchmark, transactions whose access set are dependent on a read were modified to remove these dependencies, since the Detock codebase does not currently support dependent transactions.

Figures 10 and 11 show the advantages of Detock over previous work.

The comparison to CockroachDB in Section 5.4 was interesting. The comparison doesn't use absolute performance numbers because the two systems come from separate codebases. Figure 13 shows that as the contention increases with the ratio of HOT records, CockroachDB's throughput falls significantly. The paper explains this as follows: "CockroachDB uses a form of locking to handle write-write conflicts and thus is susceptible to deadlocks. It breaks a deadlock by randomly aborting one of the transactions. Additionally, its use of two-phase commit exacerbates the time a transaction needs to hold locks."

Comments