Vertical Paxos and Primary-Backup Replication

This is a 2009 paper by Leslie Lamport, Dahlia Malkhi, and Lidong Zhou. This paper was very well written and it was clear, and easy to follow (at least for me after having read so many Paxos papers). I finished reading the paper in one hour -- a record time for a slow reader like me.

I knew about this work, but had not read the paper carefully before. I think I was overindexing on the reconfiguration aspect of Vertical Paxos, but by reading this paper (by going to the source) I found that the primary-backup replication application of Vertical Paxos was as much the point of emphasis as the reconfiguration aspect. The paper talks about the connection between Paxos and primary-backup replication applications, and presents vertical Paxos as a way of bridging these two.

The paper delves down in to the leader handover and reconfiguration, and shows a practical application of these in the context of primary-backup replication protocols. Although it is possible to let the state machine reconfigure itself as in MultiPaxos or Raft, Vertical Paxos presents a simpler and more powerful reconfiguration approach by assuming the presence of a dedicated configuration master. The downside is that, the configuration master itself would have to be implemented as a Paxos group for fault-tolerance. While this may sound costly, this can be done in a feasible manner in cloud deployments, because the same configuration master/group can serve many deployments.

Vertical Paxos II protocol presented here is especially simple and useful, and shows how to implement primary-backup replication in a safe, fault-tolerant, and performant manner. I will focus on that protocol in the second half of my writeup.

Vertical Paxos reconfiguration

Vertical Paxos leverages on the master to enable the set of acceptors to change within each individual consensus instance. Think of the ballots as arranged in a two-dimensional array, each vertical column consisting of all the ballots within a single instance arranged according to their number. In standard “horizontal” Paxos algorithms, configurations can change only as we move horizontally; they are unchanged when we move vertically (within a single instance). In Vertical Paxos, configurations change when we move vertically, but remain the same as we move horizontally from a ballot in one instance to the ballot with the same number in any other instance.

|

| Figure from https://paxos.systems/variants/#vertical |

In other words, Vertical Paxos allows every round, not just every consensus instance/slot, to have a different configuration of acceptors. Round 0 of a consensus instance may use some configuration C0, while round 1 of the same consensus instance may use some completely different configuration C1.

Overview of Vertical Paxos I and II

When replicas fail, the system must replace the failed replicas with new ones through reconfiguration, before more replica failures lead to permanent data loss. This involves state transfer to the new replicas, an often costly operation. The system therefore faces the difficult decision of either allowing the replica group to continue with a reduced level of resilience or disrupting the service during state transfer.

- Vertical Paxos I addresses this issue by allowing a replica group to operate with the restored resilience, while enabling state transfer concurrently.

- Vertical Paxos II takes the opposite approach, which results in a simpler reconfiguration experience.

When a new ballot and its leader is chosen in Paxos, the leader must communicate with acceptors from lower-numbered ballots. Since different ballot numbers have different configurations, a leader in Vertical Paxos must communicate with acceptors from past configurations. In Algorithm Vertical Paxos I, the new configuration becomes active right away. The previous configuration remains active only for storing old information, the new one also accepts new commands. When the state of the previous configuration has been transferred to the new configuration, the new leader informs the master that this has happened. The master will then tell all future leaders that they need not access that old configuration.

There is a downside to this no-downtime/concurrent-state-transfer reconfiguration approach. Suppose a new ballot b+1 is begun, but its leader fails before the state transfer from the ballot b configuration is complete. A new ballot b+2 then begins, but its leader could also fail before any state transfer occurs. This could continue happening until ballot b+42 begins, and its leader must communicate with acceptors from ballots b through b+42.

Vertical Paxos II avoids the dependence on so many configurations by having a new configuration initially inactive. The new leader notifies the master when the state transfer from the previous configuration is complete, and the master then activates the new configuration. The leader communicates only with acceptors from the new configuration and the previous active configuration. However, a number of new ballots could be started, but remain forever inactive because their leaders failed, before a new configuration becomes active and starts accepting new commands. Vertical Paxos II is especially useful for primary-backup replication.

The Primary Backup Case

In a Vertical Paxos consensus algorithm, a ballot leader must access a write quorum of its own ballot and read quorums of one or more lower-numbered ballots. Because a reconfiguration is usually performed in response to a failure, processes participating in lower-numbered ballots are more likely to have failed. We therefore want to keep read quorums small. Read and write quorums must intersect, so there is a tradeoff: making read quorums smaller requires making write quorums larger.

If we let the read quorums be as small as possible --namely, making any single acceptor a read quorum, there is only one choice left for the write quorum: the set of all acceptors. These quorums allow k-fault tolerance with only k+1 acceptors (thanks to the external master they employ -- similar idea as in chain replication really). Suppose in Vertical Paxos II we also always make the leader one of the acceptors and, upon reconfiguration, always choose the new leader from among the current acceptors. The new leader by itself is a read quorum for the previous ballot. Hence, it can perform the state transfer all by itself, with no messages. (It will still have to interact with the master and may have to exchange messages with acceptors in the new configuration.) If we call the leader the primary and all other acceptors backups, then we have a traditional primary-backup system.

What is interesting here is that this comes very close to discovering the flexible quorums idea, but doesn't generalize the idea to phase1 and phase2 quorums. That came later in 2016.

Vertical Paxos II protocol

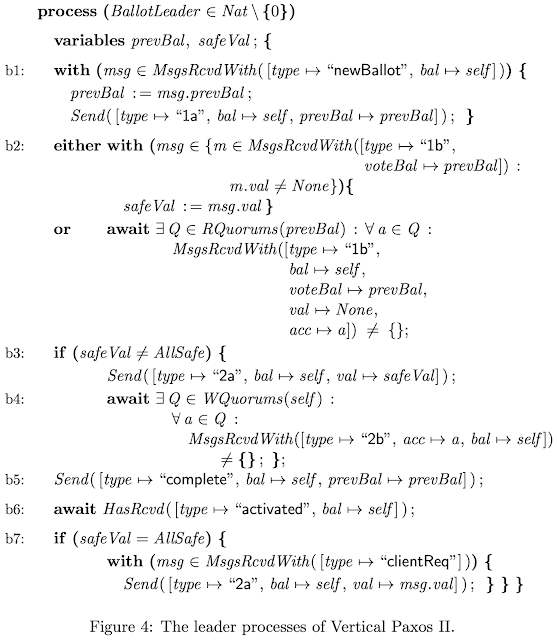

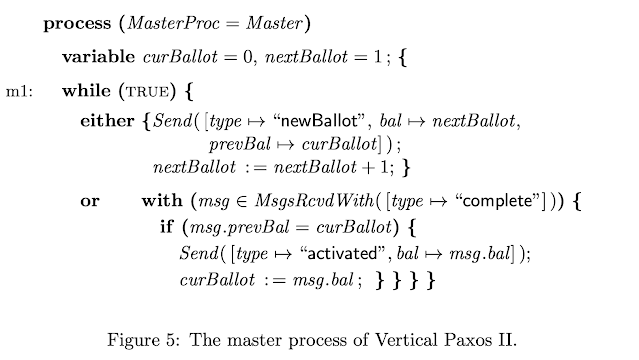

The leaders’ code is in Figure 4 and the master’s code is in Figure 5. The big simplification here is that, the leader assumes that prevBal is the next lower ballot number. It does not need to search for a complete ballot as in Vertical Paxos I. Just go to the previous active ballot and you are done.

In Label b2, in the second arm of either or, the leader discovers that no proposal was made in the last active ballot, so it can be ignored. Since the leader upped the new ballot, there won't be any proposal that could be made in that prev-active ballot. But if, from the first arm of Label b2, the leader finds that a value that was proposed, it is this leader's duty to read-repair that value for the safety of consensus, and that is done in b3 and b4. The leader then inform the master in Label b5, upon which it waits to get activated.

If the master has not activated any ballot since sending its newBallot message to b, then it activates b and sends an activated message to the leader. Upon receipt of this message, in label b7, the leader performs phase 2 if it has not already done so. If ballot b is not activated, then the actions performed have no effect because b is not a ballot number.

Comparison with other reconfiguration approaches

Vanilla MultiPaxos and Raft use log-based approach to reconfiguration. Pipelining of Paxos commands becomes a challenge for this approach. Paxos solves this by limiting the length of the pipeline window to α > 0 and only activating the new config Cnew chosen at slot i after slot i + α. Depending on the value of α, this approach either limits throughput or latency of the system. On the other hand, Raft does not impose any limitation of concurrency and proposes two solutions. The first solution is to restrict the reconfiguration operation, i.e. what can be reconfigured. For example, if each operation only adds one node or removes one node, a sequence of these operations can be scheduled to achieve arbitrary changes. The second solution is to change configuration in two phases: a union of both old and new configuration C+Cnew is proposed in the log first, and committed by the quorums combined. Only after the commit, the leader may propose the new config Cnew. During the two phases, any election or command proposal should be committed by quorum in both C and Cnew. To ensure safety during reconfiguration, all these solutions essentially prevent two configurations C and Cnew to make decision at the same time that leads to divergent system states.

Matchmaker Paxos is a realization/implementation of Vertical Paxos with a more integrated deployment to the Paxos protocol. Vertical Paxos requires an external master, which is itself implemented using state machine replication. The matchmakers in MM are analogous to that external master/Paxos-box and show that such a reconfiguration does not require a nested invocation of state machine replication. Matchmaker Paxos uses and generalizes the approach in Vertical Paxos for reconfiguration and is OK with lazy/concurrent state transfer.

Comments

I’ll start off with a synopsis - in case this isn’t being monitored anymore.

The first thing I had a question on was the 2-D array illustration that is used to differentiate between “horizontal Paxos” aka traditional (multi?) Paxos and vertical Paxos - as well as what it actually means that a configuration changes (in both horizontal and vertical Paxos). I felt like I understood what it meant in the paper (the master can select who the leader is and who the acceptors are - for a Paxos instance). But I can’t quite understand what the diagram is trying to say, which prevents me from understanding why horizontal Paxos configuration changes are restricted “horizontally” and vertical Paxos cannot make configuration changes between Paxos instances (or slots).

I also don’t understand from section 4.1 Paxos Preliminaries - where does the concept of read and write quorums come from. I understand the role of acceptors and learners in the basic Paxos algorithm but haven’t been able to make the leap to readers/writers. “An acceptor is a process that is in a read or write b-quorum, for some ballot number b.” I’m confused.

I won’t write anymore for now. Much appreciation if you are able to engage. Thank you!