High Throughput Replication with Integrated Membership Management (USENIX ATC 2022)

This paper, which appeared at USENIX ATC 2022, introduces ChainPaxos. ChainPaxos applies ideas from chain replication to the MultiPaxos protocol. (Here is an overview of chain replication if you are unfamiliar with it.)

Since ChainPaxos is a Paxos protocol, its fault-tolerance is independent of an external coordination service. This allows for continuous execution of operations during reconfigurations, and uncoupling of the system's fault-tolerance from that of an external service.

More interestingly, ChainPaxos introduces a local linearizable read operation that can be executed in any replica with no communication overhead, relying only on information used to process update operations. This read can be served by any single replica at the cost of increased latency.

ChainPaxos protocol

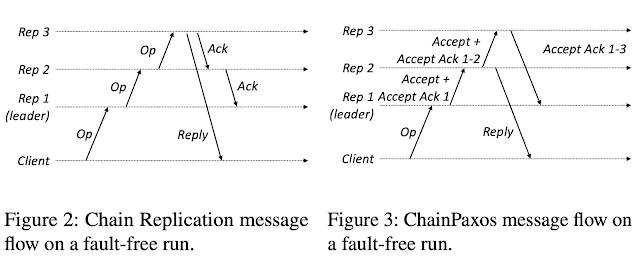

ChainPaxos is MultiPaxos that is communicating using a chain topology. Leveraging the chain, ChainPaxos combines and forwards multiple Multi-Paxos messages in a single message as shown in Figure 3.

In ChainPaxos, when an accept reaches the replica at the middle of the chain, it returns an ack to the client because a majority quorum is reached. ChainPaxos tolerates F<N/2 faults, compared to chain replication which tolerates F<N faults---provided that the Paxos configuration box it depends on has more than 2*F nodes.

As ChainPaxos is just MultiPaxos that is using a different communication pattern to convey the messages, it can fall back to the regular two phases of Paxos to handle faults. This let's us focus on reconfiguration involving adding/removing a replica as special case, in isolation from leader change.

For adding a node n to the chain, n sends a request to a replica with AddNode(n) operation as its value. The leader processes this request by starting an instance that is executed as any other instance of ChainPaxos. When the instance is decided, n is added to the tail of the chain updating the local chain configuration. That is, n is added as a log-only copy first (which helps for durability) and it catches up asynchronously and builds SMR capability over time.

Similarly, when the leader is notified that node n is suspected, it starts an instance with RemoveNode(n) operation to remove node n from the chain. When the instance is decided, n is removed from the chain, updating the variables with the local configuration of the chain.

Local linearizable read operations

In chain replication, the tail returns the read by using a lease mechanism. In an asynchronous system, linearizability can be violated, as the tail can become isolated and be excluded from the chain without knowing, while still serving outdated reads. Due to a similar reason, leased-based leader-read solutions in Paxos cannot execute read operations by contacting only the leader.

To provide linearizable reads, it is necessary to guarantee that the result of a read reflects a state that, at the moment the read is received, is at least as recent as the most recent state for which any node has returned a result (either for a read or for a write). In ChainPaxos, a node can guarantee this property by waiting for a message to loop around the entire chain, making sure that the local node is as up-to-date as any node was at the moment the message started looping around the chain.

Clients issue read operations to any replica in the chain. Upon receiving the operation, the replica locally registers that the operation depends on the lowest unseen consensus instance. For instance, if the highest instance that the replica has seen so far is 6 (regardless of it being decided or not), the read operation will depend on instance 7. Upon receiving the accept ack message to the consensus instance for which the operation depends on, the read operation is performed locally in the local committed state and the reply is sent to the client.

This trades a higher latency for the possibility of processing a read locally at any node. Under low load, write operations may be less frequent, which could delay read operations. In these cases the head of the chain issues periodic NoOP operations if no write is received, as to show to the other replicas that the head is still correct, hence the maximum latency of reads in scenarios with a low load will be controlled by the frequency of these NoOP operations.

Evaluation

Discussion

Despite all these good things going for it, ChainPaxos has a big drawback which makes it unusable for many production deployments. Do you want to make a guess at it? Stop now and think about it. I will explain it at the end of this section, so as not to spoil the answer in the immediate next paragraph.

Let's discuss the two big ideas in ChainPaxos now.

The idea of overlaying slightly different communication pattern over Paxos (which is safe in any case being oblivious to the communication pattern used) is an idea that keeps on giving. In previous work, we had also used this successfully several times in the past, including the PigPaxos paper and Compartmentalized Paxos paper. It would be nice to see how PigPaxos would compare with ChainPaxos.

The local read idea of ChainPaxos is really nice. It effectively uses a barrier to figure out when it is safe for strong-consistency to reply with the read. In 2019, we had presented Paxos Quorum Reads, an asynchrony-safe non-leader read solution that improves throughput significantly. The ChainPaxos paper does not include a citation to PQR, but the barrier ideas are similar. The nice thing in ChainPaxos is it is done from a single replica, whereas PQR requires contacting a majority of replicas. On the other hand, PQR read filters out worst-case latency leveraging quorum, whereas ChainPaxos read has to incur the latency of the full chain/ring traversal and suffers from worst case latency of a slow node.

Yes, this is the big drawback which makes ChainPaxos unusable for many production deployments: worst of N nodes tail-latency. Quorum techniques are very attractive because they filter the slow nodes, and let's the system to move on with the responses of the fastest nodes. ChainPaxos uses a chain, and is bound to walk with the feet of the slowest replica in the system for reads and writes. So ChainPaxos is particularly vulnerable to gray failures and worst-case tail-latencies.

Comments