High availability in cheap distributed key value storage

This paper is authored by Thomas Kim, Daniel Lin Kit, Gregory Ganger, Michael Kaminsky, and David Andersen. It appeared in SOCC 2020.

The paper talks about using NVMM for building a distributed key value store. NVMMs are a new technology. They are slowly rolling into the datacenters, but there are still questions about their performance and how many writes it could handle before a write wear.

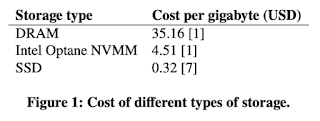

NVMMs have very low latency comparable to DRAM, yet they are 10 times cheaper. Awesome, right? Unfortunately they don't have the same high bandwidth as DRAM or SSDs. Also, they are still not anywhere as cheap as SSDs, and it may not be affordable to want to build all NVMM key-value stores.

Before reading this paper, it is important to understand that this is all about money, money, money. The cost of NVMM and SSDs influences the design decisions, and lead to this non-straightforward heterogeneous design. If cost was not an issue, we could even have DRAMs for all replicas in K-V store, easy peasy. But we see that we can save 10 times more money, if instead we use NVMMs instead. Wait, we can save even more money if we use NVMMs as primaries and SSDs as backups. This would, of course, complicate the recovery from the NVMM primary failure, given the heterogeneous nature of the system. It would be hard to construct a recovery solution that can provide small time-to-recovery (TTR) and that can provide same low-latency guarantees as the recovery is on-going.

But the savings!! If this solution is to be deployed on thousands of nodes, the savings will be huge, and would justify a more complex solution.

The setup

With the above motivation, the paper proposes a heterogeneous non-volatile distributed key-value storage system called CANDstore (pronounced as candy-store), that provides strong consistency, high availability, and low-latency during both fault-free and fault-recovery modes.

CANDstore employs three types of nodes. The suggested configuration is 1 primary and 2 backup nodes. But, what about the witness node?

The witness node is actually optional in fault-free operation, as in Cheap Paxos. "Cheap Paxos runs MultiPaxos protocol with a fixed quorum of f+1 acceptors. Upon suspected failure of an acceptor in the fixed quorum, an auxiliary acceptor becomes active so that there are once again f+1 responsive acceptors that can form a quorum. This new quorum completes the execution of any outstanding ballots and reconfigures the system replacing the suspected acceptor with a fresh one, returning the system to normal execution."

The algorithm

So this is how the protocol works.

Note that, if the primary can get the quorum only via backup nodes, it doesn't need the witness ack at all. Witness does not store actual data, and only has the operation log with placeholders for data. This way it is possible for multiple primary-replica deployments to share the same node as a witness. (The paper doesn't mention this explicitly, but since it does not count the cost of the witness as part of the primary-backup deployment cost, this setup is implied.)

Fault-tolerance

Primary recovery is involved. When the primary fails, CANDstore does not promote one of the SSD backup nodes to be the new primary. Instead, it recruits the witness to become the new primary. Why? Since the witness node is all NVMM in contrast to the backup nodes, it is more deserving to serve as primary as it can provide the low latency read response the system likes to guarantee.

Since the witness has no data, it has to learn it from the backup, but it does it in an online and on-the-fly manner banking on the skew in key-accesses. It copies the "hot" keys first from the backup[s]. This allows it to start serving hot reads immediately. If a read to a key not yet learned arrives, it does a priority pull from the backup to respond. Cold keys are gradually learned from the backup[s]. In other words, the new primary fakes it until it makes it. The paper argues that the traditional offline recovery approaches are not suitable here, and this online recovery approach speeds up TTR by up to 4.5–10.5x, where

TTR is defined as the duration the system is unable to meet its service level objectives. By faking till making the complete data recovery at the new primary, the system shortens TTR according to this new definition of TTR.

Tolerating backup failures is straightforward. When you have 1 primary and 2 backup nodes and F=1, losing one backup is not a problem. However, to rejuvenate a new backup should be prepared and added. The node can be added to the cluster using first as a non-voting node, and only after it is up to date will it be promoted to a voting node and join the write quorum.

Witness failure is the easiest case. If a witness fails, it is not necessary for the system to remove the failed witness from the cluster before making additional progress. To restore the desired level of failure tolerance quickly (remember the witness acts as extra acceptor in case of backup failure ala cheap Paxos), it may be desirable to allocate and add a new witness node to the cluster.

Here is a video of a presentation of the paper in our Zoom distributed systems reading group.

Comments