Scalable state machine replication

This paper is by Carlos Eduardo Bezerra, Fernando Pedone, and Robbert van Renesse, and it appeared in DSN 2014.

This paper presents a non-Paxos non-consensus state machine replication (SMR) solution. When I see a non-Paxos solution to SMR, I always ask, "Ok, but where is Paxos hiding here?" It turns out, in this SMR solution, Paxos is hiding in the atomic multicast. The SMR protocol described in the paper assumes atomic multicast feed it. And atomic multicast is a problem equivalent to distributed consensus, and in the evaluation we see that they implement the atomic multicast service using MultiRing Paxos.

"Atomic multicast ensures that (i) if a server delivers m, then all correct servers deliver m (agreement); (ii) if a correct process multicasts m to groups, then all correct servers in every group deliver m (validity); and (iii) relation < is acyclic (order). The order property implies that if s and r deliver messages m and m′, then they deliver them in the same order."

The paper says SMR is not scalable and says that partitioning the SMR will help. However, the requirement is that the partitioned SMR should still need to behave as one SMR. In other words, it is possible for a command to touch multi-partitions at once. Hoot, hoot!! Distributed transactions baby!

Well not quite. Here the multipartition operations do not abort, because each partition is amply replicated, hence available, and the order of operations are already determined by the atomic multicast. So the problem is simple.

It is simple, but not trivial. It is still possible to violate strict serializability as this example shows. All that is required to solve this problem is to add some completion coordination/synchronization via signaling. After delivering command C, servers in each partition send a signal(C) message to servers in the other partitions in part(C). Before finishing the execution of C, each server must receive a signal(C) message from at least one server in every other partition that executes C, as shown in case (b) in the right hand side figure. Note that the outcome of each command execution is the same as in case (a), but the executions of Cx, Cy and Cxy, as seen by clients, now overlap in time with one another. By delaying the Cxy completion in partition Px, we are able to avoid the real-time precedence between Cxy and Cy and preserve strict serializability. (For some reason the paper calls this linearizability, but this is more than linearizability because there is a real-time order component and multiple objects are involved.)

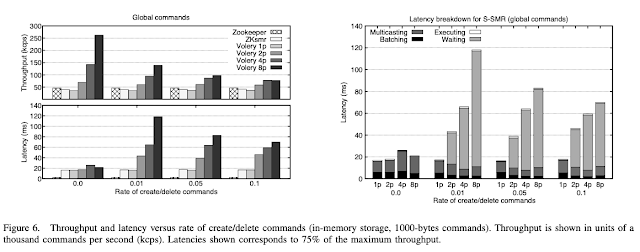

OK, what about the performance of this system? When the paper says scalable SMR, I think it primarily means in terms of parameter/storage space, not in terms of performance. When you partition to K partitions you avoid the storage limitation of all parameters/log fitting in the same node, as you gain K times more space. But the evaluation makes it clear that you pay this back in blood and tears. The multipartition operations means a lot of communication needs to be done for coordination among partitions. I think performing the ordering via atomic broadcast in advance at a lower layer makes the performance of this thing suffer very badly. The first set of evaluations are only with local operations, and the benefit of adding 3 partitions return only 1.5 more throughput. This is without any multipartition operations. So, the fair comparison for this case would be not with one ZooKeeper deployment, but with three ZooKeeper groups where each operation was forwarded to the group responsible for it.

When multipartition operations are involved even only for upto 10% of the operations, the latency skyrockets and throughput plummets. Clearly this SMR solution is not a WAN thing. It involves too much work, and it barely works in LAN.

Comments