Modeling Streamlet in TLA+

I had provided an overview of the Streamlet blockchain protocol in my last post, please read that before reading this post on modeling Streamlet in TLA+. Also if you want to get an idea about TLA+, this may be a good post to read.

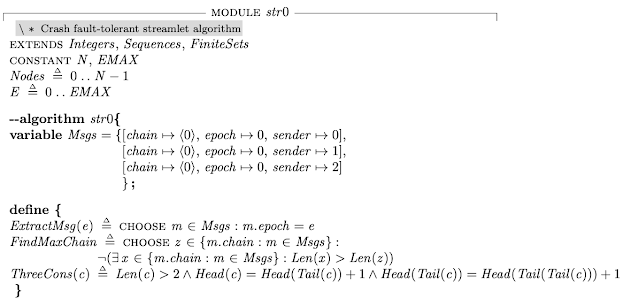

Here I model the crash fault-tolerant version of Streamlet provided in Appendix A of the paper. Modeling Byzantine nodes is very hard: How do you simulate arbitrary behavior of the nodes? How do you then hope to model check it? So I stick with the crash fault-tolerant version which is very similar to the Byzantine fault-tolerant version of the protocol. This version only considers crash faults and in turn it can tolerate up to a minority of node failures.

The reason I started modeling was because I was suspicious how there was no need for using the epoch number knowledge in the protocol or the proof of safety in an asynchronous environment. The protocol employs only the longest chain rule. Longest chain does not necessarily mean the chain with the highest epoch. It cares about how many notarized blocks are there in the chain. As far as the epoch numbers is concerned, the only thing the protocol cares about is to see three consecutive epoch blocks for finalization. The protocol doesn't say about monotonically increasing requirement for epoch numbers in an asynchronous environment (again except for the finality rule which requires 3 consecutive epoch blocks).

OK, let's get to it. For this modeling exercise, I captured my thought process while developing the model, and was able to note down some of my mistakes as well. So I will present this as a dev-log style. I will add in-line comments in parenthesis about how some of my initial decisions evolved/changed over time.

I was rusty with TLA+ as I hadn't modeled anything in TLA+ for maybe 6 months. But I was able to get this working in 3 hours or so.

Gathering my thoughts before modeling

I need a chain, and I can model that as a tuple. (I was first thinking the chain will grow by appending new value/block to its tail. But then when modeling the FindMaxNotarizedChain I need to sometimes chop the latest value/block if it is not notarized. So I reversed the direction of the chain making it grow by adding newest value/block to the head, and chopped the latest block by invoking Tail function on the tuple.)

For communication, the simplest thing is to use a shared whiteboard Message modeled as a set. Processes broadcast messages by adding their message to the Message Set, i.e., Msgs. To read messages, processes can use filtering on the Message Set to retrieve all matching messages. The down side is that all nodes will see the same message set, it will be like atomic delivery to all processes when a Message is added to the Message Set. In other words, if process j sees message m, any process k accessing the Message Set after j is guaranteed to see m as well. But this is OK for the first model, I can relax this assumption later on. This will also help keep the model checking time short.

Should I have two whiteboards, one for proposals, and one for votes? I can get away with one whiteboard. The vote messages can also be with the same message format as the propose messages. A reasonable format is [chain, e, sender-id].

I just need one type of process, because there is no distinguished leader process. There will be two actions to check.

The propose action would be something like this.

if epoch % N = myid then

find parent chain to be the longest notarized chain seen so far by searching the whiteboard with a macro.

Add block to the chain, and

bcast <chain, e, sender-id>

The bcast would be done just by adding this message to the Msgs set for anyone to see.

The vote action would be something like this.

If len(chain+e)> len(any notarized chain)

then bcast yes for <chain, e, id>.

Is there an order to the votes? Not necessarily. If you find a proposal for an epoch you pick, you can send a vote for it. You can even send duplicate vote, the Msgs Set will ignore duplicate votes, because it is a set.

What should the epoch progression rule be? For safety verification, I am checking with and asynchronous environment. The protocol doesn't even have lock-step rounds requirement. A node does not wait for hearing from other nodes to increase its epoch and propose. The only part where some constraint is imposed is when proposing, you have to choose your parent as notarized chain, and that doesn't impose constraint on how epochs are chosen. What about this?

While (TRUE)

with e \in Epochs

if e%n = self, Propose(e)

else if \E propose for e in Msgs then Vote(e)

So a node picks any random e from Epochs, this can make us jump too far in future but it is ok, we want to model check this with any order of epochs in a completely asynchronous environment. This is a bold way to test the protocol, isn't it?

Delving a layer deeper

Finding the notarized chain will require writing some macros that filter messages from the Message Set, i.e. Msgs. I suspect the hard part of the protocol is finding the longest notarized chain to attach to. And while we are talking about chains in Msgs, in the initial state Msgs contain the origin block for the nodes to build on.

What are the variables maintained at each process? I need to have nChain: the maximum notarized chain length a process has seen. I guess that is it, the process does not need to maintain any other state. It may even be possible to derive this from Msgs and use it as such without assigning it to a variable. But assigning to variable nChain would be good for me to have something to watch for in the debug mode, otherwise it would be very hard to follow what is going on just by ogling Msgs.

FindMaxChain(e) is tricky to write. Then for finding max notarized chain, I need to also check for majority senders endorsing the chain.

FindMaxChain== CHOOSE z \in {m.chain: m \in S}:

~(\E x \in {m.chain: m \in S}: Len(x)>Len(z));

That was not bad, nut FindMaxNotarizedChain will be hard.

macro FindMaxNotarizedChain(e) {

check if the MaxChain is notarized;

else maxChain= Tail(FindMaxChain);

}

This checks if the MaxChain is notarized, if not it is because the last block is not notarized; this is because the previous blocks were guaranteed to be notarized before proposing the last block. If that is the case we chop of the latest block, and return the rest of the chain. But this is actually incomplete. Consider this. There may be two different notarized chains c1 and c2 both with length=l. Furthermore, our FindMaxChain returns c1, whose latest block is not notarized, so we chop off the head and return it. But unknown to use the chain c2 with length l was notarized completely including the latest block.

We need to look at all chains with Len(FindMaxChain) one by one, and iterate on them. Jeez... But for now I can just look at one of them, and if the latest block is still not notarized, chop it and only return the Tail of the chain. This is not the right, complete solution. I don't like this much, but this will be a liveness problem at worst, and not a safety problem, so I can live with this for my first model.

How can I model the finality check?

if \E chain with 3 consecutive ending in Msgs, and \E chain' with 3 consecutive ending in Msgs, and chain' is shorter than chain,

then chain'-chopped-tail is a prefix of chain-chopped-tail.

The model

Here is the full first draft model. https://github.com/muratdem/PlusCal-examples/blob/master/Streamlet/str0.tla

I won't show them here, but if you go to my TLA+ file, you will notice at the end of the model there are a lot of Bait/fake Invariants I came up with. These are just so I can get TLA to spit out an execution trace, so I can check whether the model is doing anything at all, and if so doing things according to my expectations. Thanks to them I fixed many bugs like waiting for a propose message for an epoch before voting on it, insisting for the same epoch number for the cardinality check in FindMaxNotarizedChain, and getting (Len(ExtractMsg(e).chain) = Len(nChain)+1) right in Vote macro.

Here is the Consistency property we were interested in checking. It says that if there exists two processes i and j, each one with a chain that ends in three consecutive epoch blocks, then the shorter chain (assume it is nchain[i] WLOG) will be a prefix of the longer chain. In other words, there is finality upto the the last block of the chain.

Consistency == \A i,j \in Nodes: ThreeCons(nChain[i]) /\ ThreeCons(nChain[j]) /\ Len(nChain[i])=< Len(nChain[j])

=> \A k \in 1..Len(nChain[i]): nChain[i][k]=nChain[j][Len(nChain[j])-Len(nChain[i])+k]

The verdict: Yes, consistency works! Even though we do not check for monotonicity of epochs in voting or proposing, consistency (i.e., safety) still works. Here is a trace that satisfies consistency and violates monotonicity of epochs due to asynchronous execution. I challenged the TLA+ to give me this counterexample using the BaitMonotonicity invariant. (Recall that we hold the chain in reverse order. So with the below, I am challenging the model checker to give me a chain that violates the monotonicity condition in a notarized chain. I highlighted the non-monotonic chain)

\A i \in Nodes: ThreeCons(nChain[i]) => \A k \in 2..Len(nChain[i]): nChain[i][k]< nChain[i][k-1]

\A i \in Nodes: ThreeCons(nChain[i]) => \A k \in 2..Len(nChain[i]): nChain[i][k]< nChain[i][k-1]

I don't know if you noticed but my condition is actually a bit stronger than what is in the theorem. I am taking the last block also into account. This stronger version still checks out, I think because all nodes see all sent messages atomically/unequivocally thanks to the shared whiteboard Msgs set. As I wrote earlier, in this model, if process j sees message m, any process k accessing the Message Set after j is guaranteed to see m as well. In a future model, I will add per process inboxes to address this, and check to see how this stronger variant can be violated there.

In the next model I can also add monotonicity of epochs condition by requiring that if a node voted for epoch e block, it shouldn't vote for any proposal with epoch less than e. This is a local condition to check at each process, and it doesn't rely on synchrony assumptions anyway. So it is reasonable to add this in the next model, and explore how it can makes the model simpler (I am hoping this will solve the incompleteness problem of FindMaxNotarizedChain) and more efficient to model check.

Comments

Now thinking about it, I guess it makes sense because it doesn't really matter what the actual epoch number is (except for liveness); it sounds like based on your work we can replace each epoch number with some random string with no ill effect on consistency.

A different version of the protocol would be to vote for not just (one of the) longest notarized chains, but also to tie-break in favor of the larger epoch number (if there are multiple longest notarized chains). Finality then requires only two blocks in a row, but I suspect epoch monotonicity is needed there.

One interesting find is that Streamlet terminates in 4 synchronous epochs, and not 5, in all the cases I checked, and it seems to me that this is true in general.

Also, to respond to Benjamin Chan's comment:

* How would you define the finalization rule with unordered epochs?

* I agree that tie-breaking on the larger epoch would be beneficial, as it would make the protocol finalize new blocks faster in some cases. However, finality would still require 3 blocks in a row. It's easy to try it by commenting out the second conjunct in the definition of `Final` in my first spec. Then TLC finds a safety violation with 5 epochs, and breaking ties using larger epoch numbers wouldn't eliminate that trace.