The FuzzyLog: A partially ordered shared log (OSDI'18)

The paper does not suggest many novel ideas or algorithms, but rather shows a nice amalgamation of existing ideas and algorithms for providing a more scalable version of a shared log across multiple datacenters.

Having a shared log, and funneling all updates through a globally shared log provides simplicity for building fault-tolerant consistent distributed systems. Tango paper from SOSP'13, upon which they build here, argued for the simplicity benefits of such a system. Recently Jay Kreps wrote a nice and accessible explanation of the benefits of using globally shared logs for building distributed systems.

Despite the simplicity it provides, maintaining a shared log with a system-wide total order is impractical, because it is

- expensive: At large scale, the single sequencer will be a bottleneck for the throughput. Also having a single sequencer in wide area networks (WANs) adds to the latency prohibitively.

- often impossible: A network partition can cut off clients from the sequencer or a required quorum of the servers implementing the log.

- and typically unnecessary: updates to disjoint data (e.g., different keys in a map) do not need to be ordered, while updates that touch the same data may commute because the application requires weak consistency guarantees (e.g., causal consistency).

To address this problem, this paper explores how to provide the simplicity of a shared log without imposing a total order.

FuzzyLog

The FuzzyLog uses an expressive partial ordering API.

- Applications partition their state across logical data shards, such that updates against different shards are processed concurrently.

- When deployed across geographical regions, applications weaken consistency to avoid synchronous cross-region coordination; updates across regions even to the same logical data partition can occur concurrently.

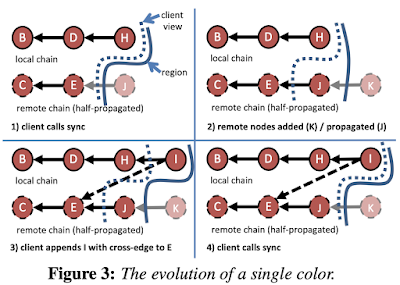

Clients interact only with their own region's local copy of the DAG; they can modify this copy by appending to their own region's chain for a color. The client can synchronize with a single color, playing forward new nodes in the local region's copy of that color in a reverse topological sort order of the DAG. A node can be appended atomically to multiple colors, representing a transactional update across data shards.

To realize the FuzzyLog API over a collection of in-memory storage servers, the paper presents Dapple. Dapple scales throughput linearly by storing each color on a different replica set of servers, so that appends to a single color execute in a single phase, while appends that span colors execute in two phases (in the absence of failures) that only involve the respective replica sets. Dapple achieves this via a fault-tolerant ordering algorithm that builds on Skeen's algorithm and adds recovery protocols to it. The algorithm provides linear scaling for single-color appends, serializable isolation for multi-color appends, and failure atomicity.

In Figure 5, client C1 sends back a max timestamp of 2.2 to servers S1 and S2. When a chainserver receives this message, it moves the multi-append from the pending queue to a delivery queue; it then waits until there is no other multi-append in the pending queue with a lower returned timestamp, or in the delivery queue with a lower max timestamp (i.e., no other multi-append that could conceivably be assigned a lower max timestamp). Once this condition is true, the multi-append is removed from the delivery queue and processed. In Figure 5, server S1 receives a phase 2 message with a max timestamp of 3.1 from client C2, but does not respond immediately since it previously responded to a phase 1 message from client C1 with a timestamp of 2.1. Once C1 sends a phase 2 message with a max timestamp of 2.2, S1 knows the ordering for both outstanding multi-appends and can respond to both C1 and C2.

Evaluation

Published numbers for sequencers in fully functional systems include: roughly 200K ops/sec (CORFU), 250K ops/sec (NOPaxos), and 600K ops/sec (Tango).

They implement FuzzyLog in C++ and evaluate to compare with Tango. (It seems like there is an implementation available here.)

Discussion

The paper does not reference and discuss a comparison with Kafka, but Kafka is very relevant, and it is prior work by several years, and used in industry extensively. Kafka also provides a total order per shard. I guess one difference is FuzzyLog uses Skeen's algorithm to enable updates across shards. This algorithm shouldn't be too hard to implement in Kafka either for cross shard updates.

The use of Skeen's algorithm was beneficial for getting total order implemented over replicas in a lightweight manner, by employing the clients to work as part of the system. The original algorithm has many assumptions, like clients don't die, replicas don't die, nevertheless it provides a lightweight way of getting updates linearized across multiple shards. But then the question becomes, are we punting too much work and responsibility to the clients.

Skeen's algorithm is an old algorithm. This goes back to the famous causally and totally ordered communication debate from 1993. Cheriton and Skeen (from Stanford University) argued for a lightweight system that punts the cost of causal and total order to the clients who are interested in it.

Ken Birman (Cornell university) took this as an attack to the virtual synchrony approach he has been implementing in his systems. He wrote a response.

Then Cheriton and Skeen wrote a response to the response, and Birman wrote a response to the response to the response. This post summarizes the first two exchanges and cuts to the gist of the issue.

This seems founded in a more general debate --should systems developers aim for efficiency and performance first, giving application developers total control, but leaving them to layer safety accordingly, or should they apply an unknown cost to all users, making strong semantics an indelible part of the system?

The FuzzyLog clearly takes the Cheriton & Skeen's side.

Comments