Hermes: A fast fault-tolerant and linearizable replication protocol

This paper (from ASPLOS'20 which was held remotely) is by Antonios Katsarakis, Vasilis Gavrielatos, M. R. Siavash Katebzadeh, Arpit Joshi, Aleksandar Dragojevic, Boris Grot, Vijay Nagarajan. The paper has its own website, where you can get to the video presentation, slides, and code.

Introduction

Hermes is a replication protocol that guarantees linearizability. It enables local reads: a client can execute a read locally on any of the replicas. Hermes enables any replica to coordinate a write to a key, and supports concurrent writes to different keys quickly.Too good to be true? You have to read the protocol section below to see how these are achieved.

But if you are a distributed systems expert, here is my shortcut explanation of the protocol. Hermes is simply chain replication (with CRAQ optimization) deployed with the following "chain" topology:

- The head and tail node of the chain is colocated in one node, called the coordinator

- The intermediate nodes of the "chain" are all parallel-connected (rather than serial) to the coordinator

In addition to solving the latency problem in chain replication by using parallel-wiring, Hermes also allows multiple coordinators to help balance the coordinating load across nodes. Any node can be a coordinator for any key. Thanks to the logical clock timestamping (using node-ids as tie-breakers), the writes are total-ordered in Hermes. Since higher timestamped writes invalidate lower timestamped writes, the result of concurrent writes will be the same at each node, and linearizability is achieved even with local reads.

I summarize Hermes below, and then discuss how Hermes compare with Paxos-based protocols.

Protocol

Writes can be initiated by any replica:

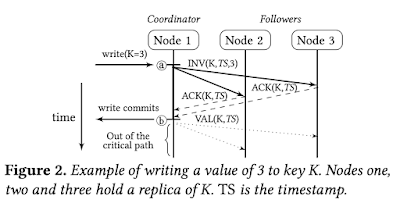

- the replica initiating the write (called coordinator) broadcasts an Invalidation (INV) message to the rest of the replicas (called followers) and waits on acknowledgments (ACKs)

- once all ACKs have been received; the write completes via a Validation (VAL) message broadcast by the coordinator replica

A read request can be served locally on any operational replica (i.e., one with a lease from the membership service). The replica returns the local value of the requested key only if it is in the Valid state. When an INV message for a key is received, the replica is placed in an Invalid state for that key, meaning that reads to the key cannot be served by the replica.

Membership service

In the write operation described above, the coordinator waits to hear an ACK from each replica. If a replica crashes, this results in the write to get stuck forever, right? To address this issue, there is a need for detecting crashed nodes.Instead of making each node have a failure detector ---which is hard to keep consistent---, Hermes (similar to chain replication) employs an external Paxos-backed configuration/membership service that decides on the health of the nodes. This service acts as a single consistent (but not necessarily perfect) failure detector for the replicas. It becomes the sole source of "truth/perspective": While it can be mistaken in its judgment, it keeps every replica in Hermes consistent with respect to their view of which nodes are healthy and part of the protocol.

This Paxos-powered membership/configuration service changes configuration/view when needed, and at each view-change it increases the epoch number. This keeps Hermes safe (and eventually live) in a partially synchronous environment ---with bouts of asynchrony.

Well, there is still the problem with lease safety at replication nodes. Each replica need a lease from the membership service for this to work (again as in chain replication). See the fault-tolerance section below for how this is handled.

Concurrent writes

Hermes allows writes to different keys to proceed in parallel for impoving the throughput.As for concurrent writes to the same key, invalidations plus logical timestamps impose a total order on these writes. This prevents conflicts and aborts, and ensures that those are correctly linearized at the replicas.

A coordinator node issues a write to a key only if it is in the Valid state; otherwise the write is stalled. This doesn't seem to be necessary for safety, because the higher timestamped writes will preempt the lower timestamped writes. So why does Hermes do this? I think they do this, because it get replicas see the writes concluded, even when there is a deluge of writes to the same key. This may in turn help alleviate the read starvation due to constant flood of writes to the same key. I found this in the slack channel for ASPLOS'20 from the first author:

It is safe for a read that initially found the object invalidated with version timestamp 2 and then subsequently invalidated with a version timestamp 3 to get serialized and return the version 2 value. Intuitively this is partly safe because a write with version 3 could not have started unless the write with version 2 has been committed.This assumes no epoch change, I presume. A couple sections below, I will discuss about our Paxos-Quorum-Reads technique which does a similar thing, but without blocking the writes to wait for earlier writes to finish, and without requiring leases or a configuration/membership service.

Read-modify-write updates

Linearizability is not the whole story. You can get linearizability in Cassandra, using the ABD algorithm, which is not even subject to FLP. But the problem is ABD is not consensus, and it is not good alone for maintaining state machine replication.Hermes is trying to do more and achieve state machine replication. It enforces the replicas to have the same log in the same order (for the same key). The paper also shows how Hermes can support read-modify-write (RMW) updates, an atomic execution of a read followed by a write to a key (e.g., a compare-and- swap to acquire a lock).

An RMW update in Hermes is executed similarly to a write, but it is conflicting. An RMW which is concurrently executed with another update operation to the same key may get aborted. Hermes commits an RMW if and only if the RMW has the highest timestamp amongst any concurrent updates to that key. Moreover, it purposefully assigns higher timestamps to writes compared to their concurrent RMWs. As a result, any write racing with an RMW to a given key is guaranteed to have a higher timestamp, thus safely aborting the RMW. Meanwhile, if only RMW updates are racing, the RMW with the highest node id will commit, and the rest will abort.A recent paper, Gryff in NSDI20, also investigates this problem. It uses the ABD algorithm for read-write registers, and EPaxos in conjunction with consensus-after-register timestamps (carstamps) for the RMW updates. In Gryff, the RMW operations do not get aborted, they just get ordered correctly by EPaxos even after a conflict.

While we are on the topic of related work, I wonder how Hermes compares with RIFL:

Implementing Linearizability at Large Scale and Low Latency (SOSP'15). The paper does not cite RIFL, but it would be nice to compare and contrast the two protocols.

Fault-tolerance

Hermes seamlessly recovers from a range of node and network faults thanks to its write replays, enabled by early value propagation.Node and network faults during a write to a key may leave the key in a permanently Invalid state in some or all of the nodes. To prevent this, Hermes allows any invalidated operational replica to replay the write to completion without violating linearizability. This is accomplished using two mechanisms. First, the new value for a key is propagated to the replicas in INV messages (see Figure 2). Such early value propagation guarantees that every invalidated node is aware of the new value. Secondly, logical timestamps enable a precise global ordering of writes in each of the replicas. By combining these ideas, a node that finds a key in an Invalid state for an extended period can safely replay a write by taking on a coordinator role and retransmitting INV messages to the replica ensemble with the original timestamp (i.e., original version number and cid), hence preserving the global write order.For fault-tolerance, the membership service and leases at replicas play a central role. If one replica is partitioned out, the coordinator cannot make progress unless the membership service updates the membership to remove that replica. The membership service waits until the lease it granted to the partitioned replica expires. The lease expiration makes the replica invalid. The membership service then increases epoch number, and disseminates the new membership information to the replicas, and the coordinator (or any other replica node via the early value propagation technique) can make progress.

Protocol-level comparison to Paxos based solutions, and PQR

The round trip and a half protocol in Hermes has similarities (at least in terms of performance bottleneck characteristics) to the Phase-2 "accept" and Phase-3 "commit" the Paxos leader (via MultiPaxos optimization) performs with the followers.The nice thing about Paxos based solutions is that there is no outside membership/reconfiguration box needed in that solution. Below let's discuss how well Paxos-based solutions can hold up to Hermes's features.

Hermes distributes the coordination load across replicas. EPaxos has the same feature due to opportunistic leaders approach. It is also possible to deploy Paxos with per-key sharding to the leaders (this was mentioned and compared with in EPaxos paper I think). In our WPaxos protocol, we improved over the per-key sharding to the leaders approach, and showed how WPaxos can outperform it by stealing keys and assigning it to the closest leaders to improve performance based on the access pattern in the workload.

Hermes does local reads from one replica. Megastore from Google also allows local reads from one replica with support from coordinators.

In our recent work, we introduced Paxos Quorum Reads (PQR) and showed how to perform linearizable reads from Paxos protocols without involving the leader and without using any leases. Since PQR does not require leases, it works in an asynchronous environment. PQR requires reading from majority of the nodes, to catch if there has been a newer pending update to the key. If there is a pending update, the clearing of the read can be done by just barriering on one replica. It is possible to relax the initial majority-quorum read and instead use fewer number of nodes by using a larger write quorum. While reading from multiple nodes in parallel requires more messages, it does not increase the latency. See this brief summary of Paxos Quorum Reads to learn more.

Evaluation

Hermes is evaluated over an RDMA-enabled reliable datastore with five replicas. The evaluation compares Hermes with ZAB and CRAQ. At 5% writes, the tail latency of Hermes is 3.6× lower than that of CRAQ and ZAB.The performance improvement in Hermes, I think comes from using multiple coordinators, which was not made available to ZAB or CRAQ.

The figures show that "fewer writes=more reads" is better for Hermes because of the local reads in Hermes. On the other hand, observe that for uniform key distribution workloads, CRAQ is as good as Hermes for throughput even though the replicas there are serially wired instead of parallel-wired.

For latency, the improvement due to parallel-wiring replicas is significant.

Comments

A small comment is that instead of seeing Hermes from the perspective of Chain Replication, I prefer to look at it from the Primary-Backup.

As you state, compared with Chain Replication, Hermes needs to colocate the "head" and "tail" responsibilities in the same node and use broadcast instead of chaining message propagation. This, to me, maps exactly to Primary-Backup.

So it might be easier to think of Hermes as an improvement over Primary-Backup, where the coupling of invalidations and logical timestamps makes it Primar-Primary.

Kite composes Paxos and shared registers (ABD and Eventual Store) to offer efficient and available release consistency (i.e., the same semantics offered by C++, Java programs).

In a nutshell Kite = pay exactly the price you need for your consistency by avoiding consensus or even ABD when synchronization is not required.

P.S. Thanks for pointing to RIFL, I would take a closer look and come back to you. :)

if RMW can be designed like Write. what's the purpose of this asymmetric design ?

thanks your reply :)

More details can be found in the related question of Hermes Q&A:

Why does Hermes treat RMWs different from a Write?

I am also happy to explain further if necessary.

Say we have a coordinator C and 2 followers A and B: if, when receiving a write W, C sends an INV message to A and then crashes, it seems the protocol recovers by having A replay the write to B, but what if B serves a read before receiving the write replay? In such case, it would return a null value, which would violate linearizability as the client would see null as the result of its write, but would later see the result of W after the replay.

What am I missing?

That's an interesting question would you mind posting it in Hermes' (quora-based) QnA so that others benefit from it as well?

https://qna.hermes-protocol.com/

Thanks in advance,

-Antonis

More on this here: https://www.quora.com/q/hilnxbhqljitmcao/How-does-the-Hermes-Protocol-behave-under-coordinator-failure

re: Primary-Backup, Murat's Chain Replication post says:

> Chain replication is essentially a more efficient retake of primary-backup replication.

So it seems you are both on the same page.

I also wanted to say it might be worth including in the evaluation section Figure 9 from the paper and mention briefly this trade-off when compared majority-based protocols (like Paxos/Raft), i.e. seems like failure of a single node reduces write availability to zero until the next reconfiguration, while a majority-based protocol would be able to tolerate failures of nodes and keep processing writes as long as a majority is operational.