Paper Review: Why Does the Cloud Stop Computing? Lessons from Hundreds of Service Outages

This paper conducts a cloud outage study of 32 popular Internet services, and analyzes outage duration, root causes, impacts, and fix procedures. The paper appeared in SOCC 2016, and the authors are Gunawi, Hao, Suminto Laksono, Satria, Adityatama, and Eliazar.

Availability is clearly very important for cloud services. Downtimes cause financial and reputation damages. As our reliance to cloud services increase, loss of availability creates even more significant problems. Yet, several outages occur in cloud services every year. The paper tries to answer why outages still take place even with pervasive redundancies.

To answer that big question, here are the more focused questions the paper answers first.

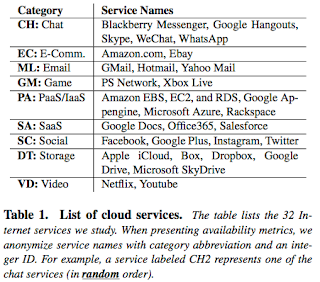

It turns out they just used Google search. They identified 32 popular cloud services (see Table 1), and then googled "service_name outage month year" for every month between January 2009 and December 2006. Then they went through the first 30 search hits and gathered 1247 unique links that describe 597 outages. They then systematically went through those post-mortem reports. Clever!

The paper says that this survey was possible "thanks to the era of providers' transparency". But this also constitutes the caveat for there approach as well. The results are only as good as the providers' transparency allowed. First, the dataset is not complete. Not all outages are reported publicly. The paper defines "service outage" as an unavailability of full or partial features of the service that impacts all or a significant number of users in such a way that the outage is reported publicly. Second, there is a skew in the dataset. The more popular a service is, the more attention its outages will gather. Third, outage classifications are incomplete due to lack of information. For example, only 40% outage descriptions reveal root causes and only 24% reveal fix procedures. (These ratios are disappointingly low.) And finally root causes are sometimes described vaguely in the postmortem reports. "Due to a configuration problem" can imply software bugs corrupting the configuration or operators setting a wrong configuration. But in this case, the paper chooses tags based on the information reported and use CONFIG tag, and not the BUGS or HUMAN tags.

In order not to discredit any service, the paper anonymizes the service names as category type followed by a number. (It is left as a fun exercise to the reader to de-anonymize the service names. :-)

If we consider only the worst year from each service, 10 services (31%) do not reach 99% uptime and 27 services (84%) do not reach 99.9% uptime. In other words, five-nine uptime (five minutes of annual downtime) is still far from reach.

Regarding the question "does service maturity help?", I got this wrong. I had guessed that young services would have more outages than mature services. But turns out, the outage numbers from young services are relatively small. Overall, the survey shows that outages can happen in any service regardless of its maturity. This is because the services do not remain the same as they mature. They evolve and grow with each passing year. They handle more users and complexity increases with the added features. In fact, as discussed in the root causes section, every root cause can occur in large popular services almost in every year. As services evolve and grow, similar problems in the past might reappear in new forms.

The “Cnt” column in Table 3 shows that 355 outages (out of the total 597) have UNKNOWN root causes. Among the outages with reported root causes, UPGRADE, NETWORK, and BUGS are three most popular root causes, followed by CONFIG and LOAD. I had predicted the most common root causes to be configuration and update related and I was right about that.

BUGS label is used for tag reports that explicitly mention “bugs” or “software errors”. UPGRADE label implies hardware upgrades or software updates. LOAD denotes unexpected traffic overload that lead to outages. CROSS labels outages caused by disruptions from other services. POWER denotes failures due to power outages which account for 6% of outages in the study. Last but not least, external and natural disasters (NATDIS) such as lightning strikes, vehicle crashing into utility poles, government construction work cutting two optical cables, and similarly under-water fibre cable cut, cover 3% of service outages in the study.

The paper mentions that UPGRADE failures require more research attention. I think the Facebook "Configerator: Holistic Configuration Management" paper is a very relevant effort to address UPGRADE and CONFIG failures.

The paper answers this paradox as follows: "We find that the No-SPOF principle is not merely about redundancies, but also about the perfection of failure recovery chain: complete failure detection, flawless failover code, and working backup components. Although this recovery chain sounds straightforward, we observe numerous outages caused by an imperfection in one of the steps. We find cases of missing or incorrect failure detection that do not activate failover mechanisms, buggy failover code that cannot transfer control to backup systems, and cascading bugs and coincidental multiple failures that cause backup systems to also fail."

While the paper misses to mention them, I believe the following work are very related for addressing the No-SPOF problem. The first one is the crash-only software idea, which I had reviewed before: "Crash-only software refers to computer programs that handle failures by simply restarting, without attempting any sophisticated recovery. Since failure-handling and normal startup use the same methods, this can increase the chance that bugs in failure-handling code will be noticed." The second line of work is on recovery blocks and n-version software. While these are old ideas, they should still be applicable for modern cloud services. Especially with the current trend of deploying microservices, micro-reboots (advocated by crash-only software) and n-version redundancy can see more applications.

Figure 5 breaks down the root-cause impacts into 6 categories: full outages (59%), failures of essential operations (22%), performance glitches (14%), data loss (2%), data staleness/inconsistencies (1%), and security attacks/breaches (1%). Figure 5a shows the number of outages categorized by root causes and implications.

Only 24% of outage descriptions reveal the fix procedures. Figure 5.b breaks down reported fix procedures into 8 categories: add additional resources (10%), fix hardware (22%), fix software (22%), fix misconfiguration (7%), restart affected components (4%), restore data (14%), rollback software (8%), and "nothing" due to cross-dependencies (12%).

On a personal note, I am fascinated with "failures". Failures are the spice of distributed systems. Distributed systems would not be as interesting and challenging without them. For example, without crashed nodes, without loss of synchrony, and without lost messages, the consensus problem is trivial. On the other hand, with any of those failures, it becomes impossible to solve the consensus problem (i.e., to satisfy both safety and liveness specifications), as the attacking generals and FLP impossibility results prove.

http://muratbuffalo.blogspot.com/2013/04/attacking-generals-and-buridans-ass-or.html

http://muratbuffalo.blogspot.com/2010/10/paxos-taught.html

Here is an earlier post from me about failures, resilience, and beyond: "Antifragility from an engineering perspective".

Here is Dan Luu's summary of Notes on Google's Site Reliability Engineering book.

Finally this is a paper worth reviewing as a future blog post: How Complex Systems Fail.

Availability is clearly very important for cloud services. Downtimes cause financial and reputation damages. As our reliance to cloud services increase, loss of availability creates even more significant problems. Yet, several outages occur in cloud services every year. The paper tries to answer why outages still take place even with pervasive redundancies.

To answer that big question, here are the more focused questions the paper answers first.

- How many services do not reach 99% (or 99.9%) availability?

- Do outages happen more in mature or young services?

- What are the common root causes that plague a wide range of service deployments?

- What are the common lessons that can be gained from various outages?

- 99% availability is easily achievable, 99.9% availability is still hard for many services.

- Young services would have more outages than mature older services.

- Most common root causes would be configuration and update related problems.

- "KISS: Keep it simple stupid" would be a common lesson.

Methodology of the paper

The paper surveys 597 outages from 32 popular cloud services. Wow, that is impressive! One would think these authors must be very well connected to teams in the industry to perform such an extensive survey.It turns out they just used Google search. They identified 32 popular cloud services (see Table 1), and then googled "service_name outage month year" for every month between January 2009 and December 2006. Then they went through the first 30 search hits and gathered 1247 unique links that describe 597 outages. They then systematically went through those post-mortem reports. Clever!

The paper says that this survey was possible "thanks to the era of providers' transparency". But this also constitutes the caveat for there approach as well. The results are only as good as the providers' transparency allowed. First, the dataset is not complete. Not all outages are reported publicly. The paper defines "service outage" as an unavailability of full or partial features of the service that impacts all or a significant number of users in such a way that the outage is reported publicly. Second, there is a skew in the dataset. The more popular a service is, the more attention its outages will gather. Third, outage classifications are incomplete due to lack of information. For example, only 40% outage descriptions reveal root causes and only 24% reveal fix procedures. (These ratios are disappointingly low.) And finally root causes are sometimes described vaguely in the postmortem reports. "Due to a configuration problem" can imply software bugs corrupting the configuration or operators setting a wrong configuration. But in this case, the paper chooses tags based on the information reported and use CONFIG tag, and not the BUGS or HUMAN tags.

In order not to discredit any service, the paper anonymizes the service names as category type followed by a number. (It is left as a fun exercise to the reader to de-anonymize the service names. :-)

Availability

If we consider only the worst year from each service, 10 services (31%) do not reach 99% uptime and 27 services (84%) do not reach 99.9% uptime. In other words, five-nine uptime (five minutes of annual downtime) is still far from reach.

Regarding the question "does service maturity help?", I got this wrong. I had guessed that young services would have more outages than mature services. But turns out, the outage numbers from young services are relatively small. Overall, the survey shows that outages can happen in any service regardless of its maturity. This is because the services do not remain the same as they mature. They evolve and grow with each passing year. They handle more users and complexity increases with the added features. In fact, as discussed in the root causes section, every root cause can occur in large popular services almost in every year. As services evolve and grow, similar problems in the past might reappear in new forms.

Root causes

The “Cnt” column in Table 3 shows that 355 outages (out of the total 597) have UNKNOWN root causes. Among the outages with reported root causes, UPGRADE, NETWORK, and BUGS are three most popular root causes, followed by CONFIG and LOAD. I had predicted the most common root causes to be configuration and update related and I was right about that.

BUGS label is used for tag reports that explicitly mention “bugs” or “software errors”. UPGRADE label implies hardware upgrades or software updates. LOAD denotes unexpected traffic overload that lead to outages. CROSS labels outages caused by disruptions from other services. POWER denotes failures due to power outages which account for 6% of outages in the study. Last but not least, external and natural disasters (NATDIS) such as lightning strikes, vehicle crashing into utility poles, government construction work cutting two optical cables, and similarly under-water fibre cable cut, cover 3% of service outages in the study.

The paper mentions that UPGRADE failures require more research attention. I think the Facebook "Configerator: Holistic Configuration Management" paper is a very relevant effort to address UPGRADE and CONFIG failures.

Single point of failure (SPOF)?

While component failures such as NETWORK, STORAGE, SERVER, HARDWARE, and POWER failures are anticipated and thus guarded with extra redundancies, how come their failures still lead to outages? Is there another "hidden" single point of failure?The paper answers this paradox as follows: "We find that the No-SPOF principle is not merely about redundancies, but also about the perfection of failure recovery chain: complete failure detection, flawless failover code, and working backup components. Although this recovery chain sounds straightforward, we observe numerous outages caused by an imperfection in one of the steps. We find cases of missing or incorrect failure detection that do not activate failover mechanisms, buggy failover code that cannot transfer control to backup systems, and cascading bugs and coincidental multiple failures that cause backup systems to also fail."

While the paper misses to mention them, I believe the following work are very related for addressing the No-SPOF problem. The first one is the crash-only software idea, which I had reviewed before: "Crash-only software refers to computer programs that handle failures by simply restarting, without attempting any sophisticated recovery. Since failure-handling and normal startup use the same methods, this can increase the chance that bugs in failure-handling code will be noticed." The second line of work is on recovery blocks and n-version software. While these are old ideas, they should still be applicable for modern cloud services. Especially with the current trend of deploying microservices, micro-reboots (advocated by crash-only software) and n-version redundancy can see more applications.

Figure 5 breaks down the root-cause impacts into 6 categories: full outages (59%), failures of essential operations (22%), performance glitches (14%), data loss (2%), data staleness/inconsistencies (1%), and security attacks/breaches (1%). Figure 5a shows the number of outages categorized by root causes and implications.

Only 24% of outage descriptions reveal the fix procedures. Figure 5.b breaks down reported fix procedures into 8 categories: add additional resources (10%), fix hardware (22%), fix software (22%), fix misconfiguration (7%), restart affected components (4%), restore data (14%), rollback software (8%), and "nothing" due to cross-dependencies (12%).

Conclusions

The take home message from the paper is that outages happen because software is a SPOF. This is not a new message, but the paper's contribution is to validate and restate this for cloud services.On a personal note, I am fascinated with "failures". Failures are the spice of distributed systems. Distributed systems would not be as interesting and challenging without them. For example, without crashed nodes, without loss of synchrony, and without lost messages, the consensus problem is trivial. On the other hand, with any of those failures, it becomes impossible to solve the consensus problem (i.e., to satisfy both safety and liveness specifications), as the attacking generals and FLP impossibility results prove.

http://muratbuffalo.blogspot.com/2013/04/attacking-generals-and-buridans-ass-or.html

http://muratbuffalo.blogspot.com/2010/10/paxos-taught.html

Related links

Jim Hamilton (VP and Distinguished Engineer at Amazon Web Services) is also fascinated with failures. In his excellent blog Perspectives, he provided detailed analysis of the tragic end of the Italian cruise ship Costa Concordia (Post1, Post2) and another analysis about the Fukushima disaster. His paper titled "On designing and deploying internet scale services" is also a must read. Finally here is a video of his talk "failures at scale and how to ignore them".Here is an earlier post from me about failures, resilience, and beyond: "Antifragility from an engineering perspective".

Here is Dan Luu's summary of Notes on Google's Site Reliability Engineering book.

Finally this is a paper worth reviewing as a future blog post: How Complex Systems Fail.

Comments