Smoke and Mirrors: Reflecting Files at a Geographically Remote Location Without Loss of Performance

This paper from FAST'09 introduces smoke and mirrors filesystem (SMFS) which mirrors files at a geographically remote datacenter with negligible impact on performance. It turns out remote mirroring is a major problem for banking systems which keep off-site mirrors (employing dedicated high-speed 10-40Gbits optical links) to survive disasters.

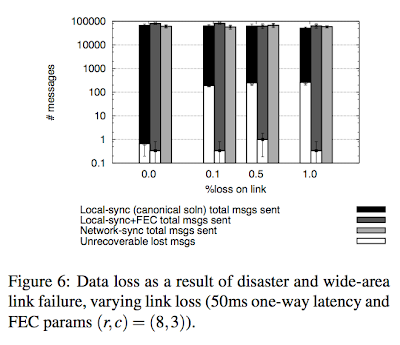

This paper is about disaster tolerance, not your everyday fault-tolerance. The fault model includes that the primary site may get destroyed, and some sporadic packet losses upto 1% may occur simultaneously as well, yet still no data should be lost. (Data is said to be lost if the client is acknowledged for the update but the corresponding update/data no longer exists in the system.) The primary site being destroyed may be a bit over-dramatization. An equivalent way to state the fault model would be that the paper just rules out a post~hoc correction (replay or manual correction). Here is how manual correction would work: if power outage occurs and the system drops some requests, and the mirror is inconsistent, then when we get the primary up again, we can restore the lost requests from the primary and make the mirror eventually-consistent. The paper rules that out, and insists that the system doesn't lose any data ever.

Existing mirroring techniques

Here are the existing mirroring techniques in use:

Synchronous mirroring only sends acknowledgments to the client after receiving a response from the mirror. Data cannot be lost unless both primary and mirror sites fail. This is the most dependable solution, but performance suffers because of wide-area oneway link latencies of upto 50ms.

Semi-synchronous mirroring sends acknowledgments to the client after data written is locally stored at the primary site and an update is sent to the mirror. This scheme does not lose data unless the primary site fails and sent packets drop on the way to the mirror.

Asynchronous mirroring sends acknowledgments to the client immediately after data is written locally. This is the solution that provides the best performance, but it is also the least dependable solution. Data loss can occur even if just the primary site fails.

Proposed network-sync mirroring

Clearly, semi-synchronous mirroring strikes a good balance between reliability and performance. The proposed approach in SMFS is actually a small improvement on the semi-synchronous mirroring. The basic idea is to ensure that once a packet has been sent, the likelihood that it will be lost is as low as possible. They do this by sending forward error correction (FEC) data along with the packet and informing the sending application when FEC has been sent along with the data. (An example of FEC is using Reed-Solomon error correction.) They call this technique "network-sync mirroring".

This idea is simple and straightforward, but this work provides a very good execution of the idea. SMFS employs previous work of the authors, Maelstrom (NSDI'08), to provide FEC for wide-area-network transmission. SMFS implements a filesystem that preserves the order of of operations in the structure of the filesystem itself, a log-structured filesystem. The paper also presents several real-world experiments to evaluate the performance of SMFS as well as its disaster tolerance. Here are two graphs from the evaluation section.

Comments