Beyond the Code: TLA+ and the Art of Abstraction

I have been teaching a TLA+ miniseries inside AWS. I just finished the 10th week, with a one hour seminar each week. I wanted to pen down my learnings from this experience.

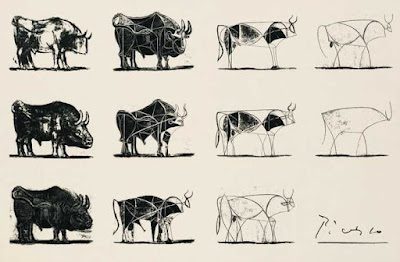

The art of abstraction

Let's start with abstraction. Abstraction is a powerful tool for avoiding distraction. The etimology of the word abstract comes from Latin for cut and draw away. With abstraction, you slice out the protocol from a complex system, omit unnecessary details, and simplify a complex system into a useful model. For example, if you are interested in the consistency model of your distributed system, you can abstract away the mechanics of communication in the system when that is an unnecessary distraction.

In his 2019 talk, Leslie Lamport said:

Abstraction, abstraction, abstraction! That's how you win a Turing Award.Inside Amazon abstraction is also very valuable. We see skilled engineers look at large systems, slice out a protocol/subsystem, cut to the essence of it, and dive deep to make the change to improve it or fix a problem. Abstraction is an invaluable skill. This is what I mean by "going beyond the code" in the title.

The only mental tool by means of which a very finite piece of reasoning can cover a myriad cases is called "abstraction"; as a result the effective exploitation of his powers of abstraction must be regarded as one of the most vital activities of a competent programmer. In this connection it might be worth-while to point out that the purpose of abstraction is not to be vague, but to create a new semantic level in which one can be absolutely precise. --Edsger W. DijkstraWhen we say abstraction, we are not just talking about layering (as in OSI model), interfaces, or modules and encapsulation. That is a very narrow understanding of the idea of abstraction. The more general understanding would be about refinement: a high-level abstract model allowing multiple implementations. The art is to see/find that high-level abstract model that eliminates the irrelevant and the amplifies the essential. You also need to figure out what is the appropriate level for the abstract component, and what is the nature of that abstract component, so it allows a more efficient or fault-tolerant implementation that you may be interested in without interfering with other subsystems in its environment. Abstraction requires both domain knowledge and proper skills, lest you abstract away an important detail/feature -- going with the first meaning of detail here.

We can not solve our problems with the same level of thinking that created them --Albert EinsteinWe would like to teach this skill to new engineers. But how do we go about this? My biggest learning from the TLA+ miniseries has been that: TLA+ is useful for teaching engineers the art of abstraction and the right way to think/reason about distributed systems.

Don't get me wrong. I found abstraction the hardest thing to teach in my series. It is almost as if it requires osmosis and some transfer of zen to communicate the abstraction idea. It still remains pretty much an art.

But TLA+ is very useful in getting across the idea of abstraction, and below I will try to explain why I think so.

Foundations of TLA+: specifying with math

TLA+ has been popular for distributed systems modeling. Lamport came up with it in the 1990s. It's built on strong principles for modeling practical systems, since Lamport had strong industry collaborations. The model checker came in 2000. But even throughout the nineties, TLA+ was delivering value for people to write precise and unambiguous specification, and make them focus on the problem. English is ambiguous.

Specifying using math, rather than a programming language, has a special magic to it. It forces you to focus on the what rather than the how. It forces you--it shakes you-- out of your comfort zone. And you have to think mathematically.

I experienced developers grabs this concept quickly. I worked with an Level 7 and Level 6 engineer in my team and they were able to become productive with TLA+ in a couple of days. I wrote a system and the L7 zoomed in on a complicated subsystem of it and wrote a TLA+ model in a couple of days. For the other case, I modeled a protocol and the L6 was able to, in a couple of days, pick up on TLA+ and write variants of the protocol and solve his problems with these models. When I talked to them afterwards, they told me that they wish all their programming could be this fast. And magic like.

Foundations of TLA+: global state-transition model

TLA+ enables you to write succinct models fast. Marc Brooker had a throwaway comment on company Slack, which I love and try to put in all my talks about TLA+.

Global shared memory model is surely a handy fiction --Marc BrookerYes, TLA+ gives you a stop-the-world transition from one global state to another state by way of a protocol action, and it gives you the God view into the global state of the distributed system you are modeling. The consequence is that safety invariants and liveness properties are written as one liners since the entire global state is accessible for evaluation. This setup enables TLA+ users to model and reason about distributed systems in a succinct and fast manner.

If you want some elaboration on this, here is the TLA+ computation model in a nut shell. A system consist of two things:

- A set of variables (global shared memory model)

- A set of assignments (called "actions")

The global system state evolves as actions take the state to the next state, state', (as in Hoare logic).

{state} transitioning-action {state’}

Finally, the execution of an assignment can be conditional on a predicate (i.e., guard) being true. And that's all folks. This is TLA+'s handy fiction that enables you to write succinct models fast.

TLA+ use at AWS

I covered two points about TLA+ (saving a third one at the end of the post)

- TLA+ forces you to think mathematically/abstractly

- TLA+ enables you to write succinct models fast (by giving you this handy fiction of global shared memory)

I think these make TLA+ indispensable for design space exploration and converging on a protocol or a protocol variant for discovery, improvement, or fixing.

You can see this niche playing through AWS history. Chris Newcombe and Tim Raft gave a Principals of Amazon talk in 2012 bringing TLA+ to the attention of other engineers working on tricky distributed systems protocols. They published a paper in 2015 and shared this with the public. This fired up TLA+ interest in industry.

Today there are hundreds of TLA+ models in AWS, including from S3, DynamoDB, EBS, EFS, Lambda, Journal, etc. The common pattern on all these models is that, the engagement came organic and in a grassroots manner rather than by prescription or through a systematic process. An engineer is faced with a tricky concurrency/distributed system problem, and they bang their heads to the wall. Then they realize that they need to solve this problem at a higher level of thinking that created the problem. They hear by word of mouth about TLA+ and apply it to their problem, and solve it. Then they go away to do other things for a couple more years until they see another suitable problem to apply it. That's why I teach the miniseries and record the lectures. I like to teach the engineers to be prepared so when they see a problem that would benefit from TLA+ modeling. The problem the L6 I mentioned earlier was working on was the secondary index build. This is a background job. I mean, you wouldn't think this is concurrency/coordination intensive task, it is a background job. But it has interesting tricky concurrence race conditions with the foreground operations, and it benefited a lot from modeling with TLA+. If you learn TLA+ in advance, you would be in a good position (luck favors the prepared) to recognize when this is a useful problem to apply TLA+ and get leverage from it.

Other learnings from teaching

Here are some other learnings from my teaching. The hardest thing to teach is still abstraction. TLA+ makes this easier by forcing you from your comfort zone to think beyond the code. But still abstraction is an art. It seems to require osmosis to learn if you have hints, send them my way, because I'm really curious to learn. This is an important thing to teach to our engineers. What I found is nothing beats hands on learning and reading other models. So that's what I try to do in my miniseries.

There is still insufficient content for teaching intermediate concepts like refinement. Refinement is important because it is at the heart of the abstraction concept. TLA+ makes refinement very convenient, but we need to see more examples. I relegate my discussion of refinement to the end of this post.

The engineers like PlusCal. So I teach them how to write in PlusCal and read in TLA+. PlusCal is the gateway drug to TLA+, as Chris Newcombe likes to say.

The VScode plugin that came recently has been great. It creates less friction in learning/trying TLA+ and we need more ecosystem tools that reduce friction. And if we want the TLA+ idea to reach more developers and thrive, we need developer influencers and good expositioners, like Hillel. We need practical examples that developers can relate to. Hillel's learntla site is a very useful resource, and we need more.

I had recently committed a snapshot isolated key-value database model to the TLA+ examples repo. I request you to contribute more TLA+ specification examples to https://github.com/tlaplus/Examples/, so you can help people to pickup TLA+ and master the art of modeling with TLA+. We need examples from different use cases and subfields.

What will the future bring

I am really excited about the TLA+ foundation that launched in April under the Linux foundation. AWS is of course an inaugural member and sponsor. TLA+ has monthly community meetings open to public, and I hope the foundation will lead to way to more people getting involved in the development of TLA+ tools and ecosystem. Leslie Lamport attends the community meetings, so this could be your chance to see him at work, and get involved with the TLA+ community.

I like to mention two big threads for the future. The first is test case generation from TLA+ models to exercise the underlying implementation to explore behavior in cornercase scenarios. The second is the dual of it. Feeding the implementation's traces (with an appropriate abstraction function) to the high level TLA+ models for conformance checking. There are already some examples of these, but we hope to see some tools mature in these areas. The TLA+ foundation will release a call for proposal soon and include these areas for funding.

There are other interesting stuff happening on TLA+, like simulation for performance and availability modeling. You can see an example of it here.

Coexist! Use the right tool for the job

"In most cases, strengths and weaknesses are two sides of the same coin. A strength in one situation is a weakness in another..."

-- Steve Jobs

I am a big fan of TLA+, but I'm not a language zealot/exclusivist. A good craftsperson does not blame their tools, right? A good craftsperson also does not say "oh my screwdriver, that's my best tool. It is all I need all the time. I use it instead of a hammer." No, you use the right tool for the right job. So for something that requires utmost confidence use an automated proof tool. Although TLA+ has TLAPS proof system Daphne is superior for proving transition systems. So use Daphne for that, right? So for conformance checking or testing, use a suitable modeling tool. AWS published "Using Lightweight Formal Methods to Validate a Key-Value Storage Node in Amazon S3" where they used Rust based reference models for validating a system written in Rust. P would also work for conformance checking.

My point is, there is still a big niche for TLA+ for modeling a protocol at the abstract level and making progress there: fixing a problem, or coming up with a variant of it by exploring the design space further. With TLA+, you are able to abstract things away, and you are able to write one-liners for checking, and focus on the essential quickly. Formal methods need to learn to "coexist" like the American bumper sticker says, and even better to learn to thrive together by sharing some formal methods tooling infrastructure.

Foundations of TLA+: refinement

Ok, this is what I saved for the end of the post. If you are still interested in reading more about TLA+ and abstraction, here it is.Refinement is at the heart of abstraction, and is also a cornerstone of TLA+. In TLA+, refinement is just implication just as God intended. This means, the concrete system behaviors is a subset of allowed abstract system behaviors. So refinement is well supported in TLA+. Even any invariant evaluation is done in principle as refinement: Does the system model refine/implement this invariant formula?

Unfortunately, as I mentioned above, we don't see many examples of a TLA+ model refining another in terms of implementing in a stepwise refinement hierarchy. It is well supported, but we don't see more examples of it. It is simply done via declaring an instance of the abstract system in the concrete one, and checking the abstract's spec as an action property via TLC. This checks that any behavior generated of the concrete system is an accepted behavior of the abstract system.

Leslie Lamport's talk I referenced above gives a great example of this, when he explained how he had discovered Paxos. There the abstract *Consensus* problem is given as a brief abstract system. Then an intermediate abstract solution, voting is defined, and shown (and model-checked) to be a refinement of the *Consensus* problem model. The *Voting* model is still abstract, because it describes what properties the voting need to satisfy, but not how the acceptors implement these properties. Finally, Lamport shows a *Paxos* model that refines the *Voting* model. (Some people may find Hillel's simpler refinement example easier to follow.)

What is the right level of abstraction to use? How do we choose the abstract system to refine to? In a quote attributed to Einstein, he says: "Everything should be made as simple as possible, but not simpler." Not very useful, isn't it? But that is all we have as a guideline. Here all we can show is examples of refinement and examples of abstraction, and wait till the concepts click.

Of course the engineers initially fight with it. When we went over a two-phase commit model, they asked me why this is using shared memory rather than message passing. I explained that for the properties I like to explore, such as blocking upon transaction manager (TM) failure, the shared memory model is enough where the TM can read the states of resource managers (RMs) and RMs can read the state of TM. We would be able change this to a simple message passing version if we add a set to maintain the messages sent and filter from that set. For the next seminar, I wrote a message passing version. This added more state, made the model bloated, but did not add any interesting behavior over the shared memory version. I hated doing it, and had to take a shower afterwards, because it felt dirty.

Comments