Paper review. GraphLab: A new Framework for Parallel Machine Learning

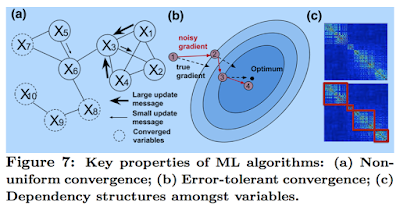

This GraphLab paper is from 2010. It is written elegantly and it does a good job of explaining the GraphLab abstraction and foundations. A later VLDB 2012 paper presents how to extend this basic GraphLab abstraction to a distributed GraphLab implementation. It seems like GraphLab has since took off big time. There are workshops and conferences about GraphLab. And GraphLab is now developed by the company Dato (formerly GraphLab inc.). Dato Inc. raised 6.75M$ from Madrona and New Enterprise Associates in A round, and 18.5M$ in B round from Vulcan Capital and Opus Capital, as well as Madrona and New Enterprise Associates. Introduction The motivation for GraphLab was to hit the sweetspot in development of machine learning solutions. "(Then in 2010) Existing high-level parallel abstractions like MapReduce are insufficiently expressive, while low-level tools like MPI and Pthreads leave machine learning (ML) experts repeatedly solving the same design challenges." GraphLab a