SIGMOD'23: Industry talks, and Ted Codd Innovation Award

Sponsored industry talks

Amazon: Innovation in AWS Database Services — Marc Brooker

Marc, who is a VP/Distinguished Engineer at AWS, talked about systems issues in AWS database services. (If you are not following Marc's blog feed, you should take a couple seconds and fix this problem now.) He talked about the enormous scale that the AWS databases deals with by mentioning that the DynamoDB processed 89 million req/sec during Prime Day. The problem is not only to scale up, but also down. There is a spectrum of scale, with billion times difference in scale. Systems support is need to enable scale up and down. Consider Aurora Serverless v2, which launched couple years ago. We had to solve problems like how to grow and shrink in place, how much cache to allocate, and how much temporary storage to use. (CPU provisioning was easier, since it is elastic.) The logic and reasoning of the 5 minute rule by Gray/Putzolu still remain relevant, although the constants changed since 1986. We still care about latency/throughput and variance in them. Workload placement is another important question. Which workload do we move? The growing one, idle one, or the busy one?

Another theme Marc talked about is the composition of systems from components. Consider MemoryDB, which is a durable fault-tolerance version of redis. We used a distributed transaction log called journal and treated the system as materialized views from the journal. Since journal is an important component that other systems build upon, it is important to invest in that component in terms of ownership, performance, and efficiency, and formal correctness (via TLA+, P, etc.).

Salesforce: Enterprise and the Cloud: Why Is It Challenging? — Pat Helland

Pat is a fount firehose of wisdom. If you haven't seen him talk, you can get a taste here or here.

Pat compared enterprise and cloud computing and then talked about how to reconcile the two. He said enterprise computing is a very tough problem, where durability and low-latency transactions are prioritized. Traditional enterprise computing means you are standing on bedrock, rock solid stuff. It is a lot like driving in an F1 track. Compare this to the cloud, which is like driving in public highways. It is cheap and good most of the time, but it is not the bedrock, rock solid stuff the traditional enterprise computing built on. The cloud philosophy is that many small servers beat fewer large servers. That is, horizontal scalability beats vertical scalability. In the cloud, it is easy to do fast and pretty good, or retriable. But fast and perfect is hard. For example, updating in place, and consensus/quorum is hard. As we move to cloud, we work to deal with this. Another area of work is tolerating gray failures and metastable failures.

Confluent: Consensus in Apache Kafka: from Theory to Production — Jason Gustafson and Guozhang Wang

OK, let's talk about Kafka's control plane needs. Kafka is a streaming platform. Data is organized as partitioned topics by brokers, then data is replicated and log-structured stored, and consumed. As the VLDB15 paper discussed, Kafka circa 2013 used Zookeeper for consensus on metadata (including leader election), and for data replication, it used leader follower replication. Zookeeper governed the control plance, and a single broker acting as a controller propagated all data to followers.

But 2013 was before cloud domination (the presenter's words, not mine!). There was an explosion on metadata, because new features meant new metadata. They then asked the question of how to scale Kafka clusters efficiently in the cloud. The answer involved getting rid of the ZooKeeper as a dependency. By using an event-based variant of the Raft consensus protocol, Kraft greatly consolidating responsibility for metadata into Kafka itself, rather than relying on ZooKeeper. KRaft mode also makes use of a new quorum controller service in Kafka which replaces the previous controller.

They model checked for correctness with TLA+. This doesn't prevent production incidents of course. An example was Kafka 15019: broker heartbeat timeouts. Kraft was put in production on 2000+ clusters. It is the default for new clusters in all regions in AWS, GCP, and Azure. Look for the VLDB'23 paper titled "Kora: the cloud native engine for Kafka" for

more information.

Google: Data and AI at Google BigQuery Scale — Tomas Talius

Big query is the core of Googles' data cloud used by many data professionals. It provides cross cloud support, geospatial analytics, stream analytics, big query ML, and log analytics. Big query is extremely disaggregated (I am pretty sure they didn't use the word in Gustavo's sense):

- colossus storage

- shuffle (mindmeld) in memory

- compute via borg

- scalable metadata via spanner

The talk was a high level features overview, and was low on technical details. It mentioned big query serverless and autoscaling for paying what you use in small increments. It mentioned Bigquery ML, and ML for all SQL users, being run over Google TPUs. It also mentioned the potential of using LLM models in SQL.

Alibaba: Enhancing Database Systems with AI — by Bolin Ding and Jingren Zhou

This talk discussed how AliBaba enhances their database systems with AI. Like the Google talk, all I was able to get from this talk was that I should be excited because ML is being adopted for database services. These look like they are all research prototypes at this stage. The talk didn't give me technical discussion of how these are being incorporated. So I will just list some keywords I caught, without much semantic around them. I apologize for string of incomplete sentences.

Database autonomy service(DAS) in Alibaba cloud databases collects statistics, and recommends actions. Use cases may be anomaly detection, treatment, SQL query optimization, recommending new sizes, performance diagnosis, devops for cloud databases as a microservice system. Timeseries analysis, cardinality estimation. Learning to be a statician; prepare training data points and feed into an NN model Wu et al vldb22. NN+ regularization as an NDV estimator. https://github.com/wurenzhi/learned_ndv_estimator Learned query optimizer. Lero: learning to rank query optimizer, Zhu et al vldb23. Plan explorer, plan comparator, and model trainer. Database tuning; challenges and opportunities of configuration auto-tuning for cloud databases.

Even at this form, this sounds exciting.

Microsoft: Microsoft Fabric - Analytics in the AI Era — Raghu Ramakishnan

The talk was about united analytics in the AI era. Fabric is MS intelligent data platform. (AFAIR, Fabric was a consensus system for control plane at Microsoft. Microsoft seems to like that name and reuse it.) A use case is persona optimized experiences in office. The "Polaris: distributed sql engine in azure synapse paper" from a VLDB'20 looked at it.

Raghu mentioned an eclectic mix of features around Fabric, including lakehouses and warehouses, one copy for all computes, delta-parquet format, going from row/page orient data layout to column oriented log-structured parquet format. Related to the last item, he mentioned a recent paper called, lst-bench, which sounds interesting. He also talked about data co-pilots: "we can produce good enough SQL, your productivity will go off the roof". Similar to the Google and Alibaba talks, this talk also advertised exciting developments, but at high level, and I wasn't able to get a good appreciation of systems challenges/insights in getting these to work.

Edgar F Codd Innovations Award: Joe Hellerstein

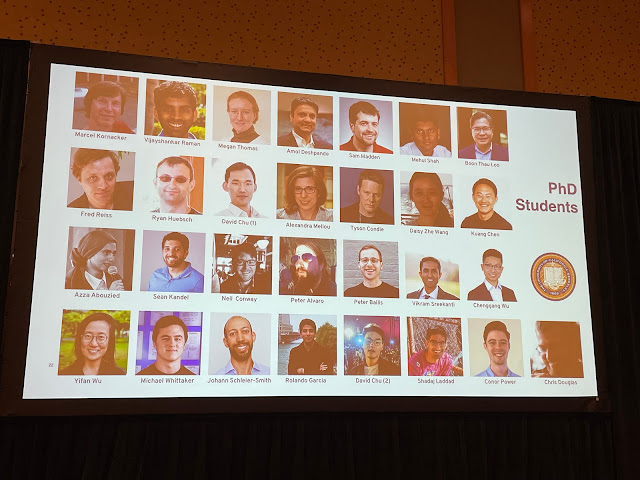

I am a big fan of Joe's and his students' work. I covered many of his work in this blog, including the CALM line of work. I was happy to see him recognized with this Innovation Award.

Joe had prepared a visually stunning presentation! He kept it on the theme of the award. We saw slide after slide of artwork showing Ted Codd in different context, as a robot, in the clouds etc. I thought Joe hired artists to prepare the slides. Turns out it was all prepared by him, with the help of generative AI.

Here is Joe talking about his research work.

The work takes a broader perspective of declarativity. Ted Codd said 1/ formalize your specifications, 2/ automate your implementations, and 3/ keep them separate. Joe took a broader perspective on Codd's lesson. In his words, relational databases are an artificial limitation. Data is everywhere, so we applied the lessons everywhere. This resulted in work on :

- Declarative networking

- Declarative data science and machine learning

- Declarative programming for the cloud: The cloud is the biggest computer, the humans assembled, programming it in Java is an abomination. Cloud is invented to hide computing resources and how computations are executed.

This last part got more coverage, because this is what Joe and his colleagues are working on with the Hydro project.

Of course, the talk would not be complete without talking about generative AI. Joe speculated about the application of generative AI to data systems in two parts.

Programming: The narrow waist is declarative specifications. If you generate Rust with generative AI compiler complains. If you generate Python with generative AI it runs, but you don't know what it does. This is an enormous opportunity for programming.

Interaction: Guide-decide loop, pointing at data visualization and predictive interaction (CIDR 2015).

Joe had a lengthy gratitudes section in his talk. He credited many people including Simon, Judith, Lisa, Billie, all profs, Meichun Hysu, Hamid Pirahesh, Mike Stonbraker, Jeff Naughton, Mike, Rober Wilensky, Christos, David Culler, colleagues in Berkeley db, and fellow data wranglers at trifacta. He said this

award is really an award for my students and postdocs. His wife and one of his daughters was also attending the ceremony.

Since Joe is such a mensch, he had a short talk. So, the organizers started a Q&A session, after which Joe got a standing ovation from close to 1000 SIGMOD/PODS participants.

Q: Why did you get into databases?

A: At Harvard, CS was not cool then, it was for boring people. But Mae had a special seminar on databases. Joe's sister in Berkeley became a Unix sysadmin at IBM. Laura Hass asked Joe would you like to be a pre-doc? And that got things rolling.

Q: How does one go from academia to being a CEO?

A: Running a company is people and project management. There is a big learning curve. Once a startup reaches to 20 people, a phase-transition point is hit. People stop trusting each other; you need process in place. People management skills is the hardest thing you learn. Culture of the company follows the leadership, so you need to be a great role model.

Q: How did you decide what project to take on?

A: You can't get famous by being a smart guy, they come a dozen a dime. You need long term goals, and following them. I had only three themes that I keep banging on.

Q: What is your view of industrial landscape?

A: I have concerns about big companies reducing jobs. Cloud is a big opportunity! IBM research culture was beautiful, except for MS it is not there in the industrial landscape. I hope we can see more of that.

Q: For the DEI (diversity, equity, inclusion) initiative, what else can we be doing?

A: I will be humble in answering the question, I don't think my experience is too much. I learned a lot though. Institutional buy-in is absolutely critical.

I hadn't interacted with Joe in person before. Based on our email interactions, he came across as a nice/considerate person. Seeing him in person, and seeing him acknowledge others for his work, he comes across a genuinely nice and humble, yet very creative person. This rubs on to his students as well based on my observation.

Comments