Academic/research impact

impact (n)

1. the action of one object coming forcibly into contact with another

2. the effect or influence of one person, thing, or action, on another

Impact (with the second meaning :-) is an often talked about desirable thing in academic and research world. Impact is especially important for promoting an associate professor to a full professor status.

Unfortunately there is no well-defined description or an objective way to quantify impact. There are approximated metrics, each with significant flaws:

OK, enough of being cynical. Of course these metrics capture a lot of value and approximate impact in most cases. But my point is, it is easy to fake impact-potential. It is easy to play the game and improve those metrics, without having impact.

I recently performed a series of twitter polls on this. Here are the results with my commentary.

These two showed strong preference for novelty over completeness. It is possible to make a case the other way as well. Let's say the first/simple solution gets stuck 1% of the case and the improved solution covers that. This may make or break the practicality of the solution at large scale. Corner cases and performance problems are deal breakers at large scale.

DARPA has been boasting about its funding atomic clocks research as a use-inspired basic research project. At the time of funding, the applications of precise clocks was unclear, but DARPA funded physicists to work on it. And once atomic clocks were built, applications started to emerge. Around 2000-2010 DARPA has been mentioning this in most of their presentations (I haven't been to DARPA presentations recently). DARPA has been proud of this, and wanted to solicit other work like this. These presentations included this diagram and urged for other use-inspired basic research.

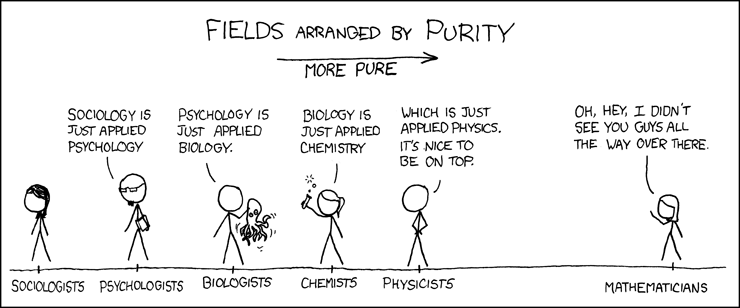

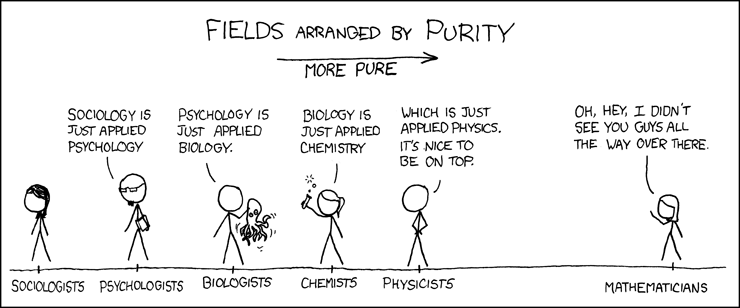

There is something to be said for purity/abstractness. Abstractness can imply generality and may mean more impact. On the other hand, there may also be a phase transition in terms of impact/practicality in the abstractness spectrum. Sometimes things get too abstract and abstruse. But it may be hard to differentiate when that happens and draw a line. Maybe the solution is use-inspired research/theory. It may help to have some practical relevance considerations while doing theory. It may help to do theory that can help as a tool for enabling other work.

There is something to be said for purity/abstractness. Abstractness can imply generality and may mean more impact. On the other hand, there may also be a phase transition in terms of impact/practicality in the abstractness spectrum. Sometimes things get too abstract and abstruse. But it may be hard to differentiate when that happens and draw a line. Maybe the solution is use-inspired research/theory. It may help to have some practical relevance considerations while doing theory. It may help to do theory that can help as a tool for enabling other work.

The talk (and I guess the book as well) argues that having research objectives does not help in achieving them and can even be harmful, because the stepping stones don't resemble the end objective. So the advice given is: don't plan for impact/objectives, just do interesting stuff!

That has also been my conclusion thinking about research impact many years in the back of my mind. Instead of trying to chase impact, it is more impactful to do interesting work that you enjoy, and to do what only you can do. I wrote this in 2014:

Of course, doing stuff that is interesting is a very subjective thing. That may be ok, when choosing what to work on. But evaluating "interesting/novelty" is too subjective. It takes us back in full circle in admitting there is no well-defined description or an objective way to quantify impact. As I speculate in MAD question 3, I believe we deserve better metrics and more research into impact metrics.

You can vote/comment on this by Wednesday June 20.

3. In team sports, we select an mvp as the player with a high profile/visibility, but in reality the real mvp could have low profile in terms of scoring and the metrics tracks but still provides more value. When I was a child, assists were not tracked in soccer, maybe it was too much of a hassle. What about a defender that played strategically and blocked attacks before they developed? How do we credit that? Moneyball (Michael Lewis) shed some light on this.

Coming back to research/academic impact, don't we need/deserve a better more informed approach for tracking and identifying impact? Are there severely underrated work/researchers that have had huge impact that went unnoticed?

1. the action of one object coming forcibly into contact with another

2. the effect or influence of one person, thing, or action, on another

Impact (with the second meaning :-) is an often talked about desirable thing in academic and research world. Impact is especially important for promoting an associate professor to a full professor status.

Unfortunately there is no well-defined description or an objective way to quantify impact. There are approximated metrics, each with significant flaws:

- Citation count is easily quantifiable --Google Scholar shows your citation count and h-index. However, this can be tricked. Some journals have boosted their impact factors by asking accepted articles to cite other articles from the journal. Publishing in popular/crowded areas (e.g., wireless networks) can also boost citation count. It is possible to have high citation count with little impact, and little citation count with high impact. As a personal anectode, many of the work I am most proud of has low citation count, and I have not anticipated how some of my quick "low-hanging-fruit" papers gathered a lot of citations.

- Name recognition/identity is not quantifiable and varies depending on who you ask. Also this can be tricked by owning a very tiny corner without having much impact.

- Number of PhD students graduated may not have a strong correlation with impact.

- Best paper awards are great, but let's be cynical here. The awards show you know how to write good papers: one may even argue, you are a crowd-pleaser, you are too much into group-think/community-consensus. Truly innovative papers fail to get best paper awards, heck, they get rejected for many years. They only get test-of-time and hall-of-fame awards in retrospective.

- Grants are nice, but being cynical you can argue, what they truly measure is how good you are at telling what the reviewers want to hear.

OK, enough of being cynical. Of course these metrics capture a lot of value and approximate impact in most cases. But my point is, it is easy to fake impact-potential. It is easy to play the game and improve those metrics, without having impact.

Recognizing impact

OK, it may be hard to truly recognize impact except in retrospect. I will now argue that even in retroscpect, there is contention in recognizing impact. What are the dimensions of impact, which dimensions are more important? There is disagreement even about that.I recently performed a series of twitter polls on this. Here are the results with my commentary.

Although majority votes favored improving a huge fraction of lives in tiny amount over improving a tiny fraction of lives in huge amount, it is easy to make a case for the latter as well. In fact, I was thinking that would be the winning option---I would have voted for that. Many web services improve huge number of lives in a tiny way, but how much does that matter? How bad would you be off with a little bit of inconvenience. On the other hand a huge improvement in a tiny fraction of people's lives (disabled, poor, sick, etc.) is a big deal for them. Isn't that more impactful? (I tear up watching these videos every time. Even the colorblind seeing color the first time... It is a big deal for them.)Which has more academic/research impact?— Murat Demirbas (@muratdemirbas) June 16, 2018

Improving a tiny fraction of lives in a huge way, or

improving a huge fraction of lives in a tiny way.

This poll returned an even split between small current improvement over a bigger improvement potential. I would have voted for big improvement potential: the power of transformational is orders of magnitude more than the incremental. However, I don't deny that small incremental improvements may also form critical mass and stepping stones and can lead to transformational improvements as well.Which has more impact?— Murat Demirbas (@muratdemirbas) June 16, 2018

(Second option = big "potential" impact down the line)

Which has more impact?— Murat Demirbas (@muratdemirbas) June 16, 2018

Which solution has more impact?— Murat Demirbas (@muratdemirbas) June 16, 2018

These two showed strong preference for novelty over completeness. It is possible to make a case the other way as well. Let's say the first/simple solution gets stuck 1% of the case and the improved solution covers that. This may make or break the practicality of the solution at large scale. Corner cases and performance problems are deal breakers at large scale.

This surprised me in a good way. There was a great appreciation that defining/identifying a problem is very important, and with that out of the way the solution becomes possible and often easy.Which has more impact?— Murat Demirbas (@muratdemirbas) June 16, 2018

This also showed there is good appreciation of theory that enables later practical applications.Which has more impact?— Murat Demirbas (@muratdemirbas) June 16, 2018

This one was a bit disappointing for me. Atomic clocks enabled GPS, so I think it had more impact than GPS. Atomic clocks is a general tool and enables other applications applications: long-baseline interferometry in radioastronomy, detecting gravity-waves, and even used in georeplicated distributed databases.Which has more impact?— Murat Demirbas (@muratdemirbas) June 17, 2018

DARPA has been boasting about its funding atomic clocks research as a use-inspired basic research project. At the time of funding, the applications of precise clocks was unclear, but DARPA funded physicists to work on it. And once atomic clocks were built, applications started to emerge. Around 2000-2010 DARPA has been mentioning this in most of their presentations (I haven't been to DARPA presentations recently). DARPA has been proud of this, and wanted to solicit other work like this. These presentations included this diagram and urged for other use-inspired basic research.

There is something to be said for purity/abstractness. Abstractness can imply generality and may mean more impact. On the other hand, there may also be a phase transition in terms of impact/practicality in the abstractness spectrum. Sometimes things get too abstract and abstruse. But it may be hard to differentiate when that happens and draw a line. Maybe the solution is use-inspired research/theory. It may help to have some practical relevance considerations while doing theory. It may help to do theory that can help as a tool for enabling other work.

There is something to be said for purity/abstractness. Abstractness can imply generality and may mean more impact. On the other hand, there may also be a phase transition in terms of impact/practicality in the abstractness spectrum. Sometimes things get too abstract and abstruse. But it may be hard to differentiate when that happens and draw a line. Maybe the solution is use-inspired research/theory. It may help to have some practical relevance considerations while doing theory. It may help to do theory that can help as a tool for enabling other work.Is it possible to optimize/plan for impact?

After my twitter poll frenzy, a friend forwarded me this talk: why greatness cannot be planned: the myth of the objective. I highly recommend the talk. While I don't agree with all conclusions there, it has merit, and gets several things right.The talk (and I guess the book as well) argues that having research objectives does not help in achieving them and can even be harmful, because the stepping stones don't resemble the end objective. So the advice given is: don't plan for impact/objectives, just do interesting stuff!

That has also been my conclusion thinking about research impact many years in the back of my mind. Instead of trying to chase impact, it is more impactful to do interesting work that you enjoy, and to do what only you can do. I wrote this in 2014:

So publishing less is bad, and publishing more does not guarantee you make an impact. Then what is a good heuristic to adopt to be useful and to have an impact?

I suggest that the rule is to "be daring, original, and bold". We certainly need more of that in the academia. The academia moves more like a herd, there are flocks here and there mowing the grass together. And staying with the herd is a conservative strategy. That way you avoid becoming an outlier, and it is easier to publish and get funded because you don't need justify/defend your research direction; it is already accepted as a safe research direction by your community. (NSF will ask to see intellectual novelty in proposals, but the NSF panel reviewers will be unhappy if a proposal is out in left field and is attempting to break new ground. They will find a way to reject the proposal unless a panelist champions the proposal and challenges the other reviewers about their concocted reasons for rejecting. As a result, it is rare to see a proposal that suggests truly original/interesting ideas and directions.)

To break new ground, we need more mavericks that leave the herd and explore new territory in the jungle. Looking at the most influential names in my field of study, distributed systems, I see that Lamport, Dijkstra, Lynch, Liskov were all black sheep.

The interesting thing is, to be the black sheep, you don't need to put on an act. If you have a no bullshit attitude about research and don't take fashionable research ideas/directions just by face value, you will soon become the black sheep. But, as Feynman used to say "what do you care what other people think?". Ignore everybody, work on what you think is the right thing.Word of caution!! While being different and being black sheep can speed up your way to the top, it works only after you paid the price. It is a very steep climb up there, and you need many grueling years of preparation.

Of course, doing stuff that is interesting is a very subjective thing. That may be ok, when choosing what to work on. But evaluating "interesting/novelty" is too subjective. It takes us back in full circle in admitting there is no well-defined description or an objective way to quantify impact. As I speculate in MAD question 3, I believe we deserve better metrics and more research into impact metrics.

MAD questions

1. What is the most impactful research you know? What are its prominent characteristics? Is it an enabling tool for other work? Is it a simple idea (paradigm shift)? Is it a synthesis of other ideas? Is it a tour-de-force complex technical solution?You can vote/comment on this by Wednesday June 20.

2. What heuristics do you employ for increasing impact in your research?What is the most impactful research you know? (Add as reply.)— Murat Demirbas (@muratdemirbas) June 19, 2018

What are its characteristics?

3. In team sports, we select an mvp as the player with a high profile/visibility, but in reality the real mvp could have low profile in terms of scoring and the metrics tracks but still provides more value. When I was a child, assists were not tracked in soccer, maybe it was too much of a hassle. What about a defender that played strategically and blocked attacks before they developed? How do we credit that? Moneyball (Michael Lewis) shed some light on this.

The central premise of Moneyball is that the collective wisdom of baseball insiders (including players, managers, coaches, scouts, and the front office) over the past century is subjective and often flawed. Statistics such as stolen bases, runs batted in, and batting average, typically used to gauge players, are relics of a 19th-century view of the game and the statistics available at that time. Before sabermetrics was introduced to baseball, teams were dependent on the skills of their scouts to find and evaluate players. Scouts are those who are experienced in the sport, usually having been involved as players or coaches. The book argues that the Oakland A's' front office took advantage of more analytical gauges of player performance to field a team that could better compete against richer competitors in Major League Baseball (MLB).

Coming back to research/academic impact, don't we need/deserve a better more informed approach for tracking and identifying impact? Are there severely underrated work/researchers that have had huge impact that went unnoticed?

Comments