Holistic Configuration Management at Facebook

Move fast and break things

That was Facebook's famous mantra for developers. Facebook believes in getting early feedback and iterating rapidly, so it releases software early and frequently: Three times a day for frontend code, three times a day for for backend code. I am amazed that Facebook is able to maintain such an agile deployment process at that scale. I have heard other software companies, even relatively young ones, develop problems with agility, even to the point that deploying a trivial app would take couple months due to reviews, filing tickets, routing, permissions, etc.Of course when deploying that frequently at that scale you need discipline and good processes in order to prevent chaos. In his F8 Developers conference in 2014, Zuckerberg announced the new Facebook motto "Move Fast With Stable Infra."

I think the configerator tool discussed in this paper is a big part of the "Stable Infra". (By the way, why is it configerator but not configurator? Another Facebook peculiarity like the spelling of Übertrace?)

Here is a link to the paper in SOSP'15 proceedings.

Here is a link to the conference presentation video.

Configuration management

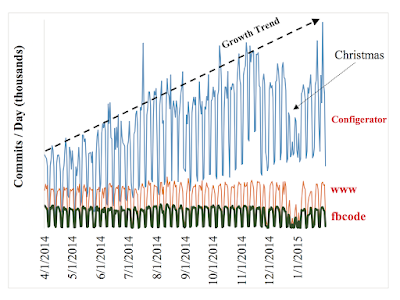

What is even more surprising than daily Facebook code deployment is this: Facebook's various configurations are changed even more frequently, currently thousands of times a day. And hold fast: every single engineer can make live configuration changes! This is certainly exceptional especially considering that even a minor mistake could potentially cause a site-wide outage (due to complex interdependencies). How is this possible without incurring chaos?The answer is: Discipline sets you free. By being disciplined about the deployment process, by having built the configerator, Facebook lowers the risks for deployments and can give freedom to its developers to deploy frequently.

Ok, before reviewing this cool system configerator, let's get this clarified first: what does configuration management involve and where is it needed? It turns out it is essential for many and diverse set of systems at Facebook. These include: gating new product features, conducting experiments (A/B tests), performing application-level traffic control, performing topology setup and load balancing at TAO, performing monitoring alerts/remediation, updating machine learning models (which varies from KBs to GBs), controlling applications' behaviors (e.g., how much memory is reserved for caching, how many writes to batch before writing to the disk, how much data to prefetch on a read).

Essentially configuration management provides the knobs that enable tuning, adjusting, and controlling Facebook's systems. No wonder configuration changes keep growing in frequency and outdo code changes by orders of magnitudes.

Configuration as code approach

The configerator philosophy is treating configuration as code, that is compiling and generating configs from high-level source code. Configerator stores the config programs and the generated configs in the git version control.There can be complex dependencies across systems services in Facebook: after one subsystem/service config is updated to enable a new feature, the configs of all other systems might need be updated accordingly. By taking a configuration as code approach, configerator automatically extracts dependencies from source code without the need to manually edit a makefile. Furthermore, Configerator provides many other foundational functions, including version control, authoring, code review, automated canary testing, and config distribution. We will review these next as part of the Configerator architecture discussion.

While configerator is the main tool, there are other configuration support tools in the suite.

Gatekeeper controls the rollouts of new product features. Moreover, it can also run A/B testing experiments to find the best config parameters. In addition to Gatekeeper, Facebook has other A/B testing tools built on top of Configerator, but we omit them in this paper due to the space limitation. PackageVessel uses peer-to-peer file transfer to assist the distribution of large configs (e.g., GBs of machine learning models), without sacrificing the consistency guarantee. Sitevars is a shim layer that provides an easy-to-use configuration API for the frontend PHP products. MobileConfig manages mobile apps' configs on Android and iOS, and bridges them to the backend systems such as Configerator and Gatekeeper. MobileConfig is not bridged to Sitevars because Sitevars is for PHP only. MobileConfig is not bridged to PackageVessel because currently there is no need to transfer very large configs to mobile devices.

The P2P file transfer mentioned as part of PackageVessel is none other than BitTorrent. Yes, BitTorrent finds many applications in the datacenter. This example from Twitter in 2010.

The Configerator architecture

The Configerator application is designed to defend against configuration errors using many phases. "First, the configuration compiler automatically runs developer-provided validators to verify invariants defined for configs. Second, a config change is treated the same as a code change and goes though the same rigorous code review process. Third, a config change that affects the frontend products automatically goes through continuous integration tests in a sandbox. Lastly, the automated canary testing tool rolls out a config change to production in a staged fashion, monitors the system health, and rolls back automatically in case of problems."

I think this architecture is actually quite simple, even though it may look complex. Both control and data are flowing the same direction: top to down. There are no cyclic dependencies which can make recovery hard. This is a soft-state architecture. New and correct information pushed from top, will cleans old and bad information.

Canary testing: The proof is in the pudding

The paper has this to say on their canary testing:The canary service automatically tests a new config on a subset of production machines that serve live traffic. It com- plements manual testing and automated integration tests. Manual testing can execute tests that are hard to automate, but may miss config errors due to oversight or shortcut under time pressure. Continuous integration tests in a sandbox can have broad coverage, but may miss config errors due to the small-scale setup or other environment differences. A config is associated with a canary spec that describes how to automate testing the config in production. The spec defines multiple testing phases. For example, in phase 1, test on 20 servers; in phase 2, test in a full cluster with thousands of servers. For each phase, it specifies the testing target servers, the healthcheck metrics, and the predicates that decide whether the test passes or fails. For example, the click-through rate (CTR) collected from the servers using the new config should not be more than x% lower than the CTR collected from the servers still using the old config.Canary testing is an end-to-end test, and it somewhat overrides trying to build more exhaustive static tests on configs. Of course the validation, review, and sandbox tests are important precautions to try to make sure the config is sane before it is tried in small amount in production. However, given that Facebook already has canary testing, it is a good end proof for correctness of the config, and this somewhat obviates the need for heavyweight correctness checking mechanisms. The paper gives couple examples of problems caught during canary testing.

On the other hand, the paper does not make it clear how conclusive/exhaustive are the canary tests. What if canary tests don't catch slowly manifesting errors, like memory leaks. Also, how does Facebook detect whether there are abnormality during a canary test? Yes, Facebook has monitoring tools (ubertrace and mystery machine) but are they sufficient for abnormality detection and subtle bug detection? Maybe we don't see adverse effect of configuration change for this application, but what if it adversely affected other applications, or backend services. It seems like an exhaustive monitoring, log collection, and log analysis may need to be done to detect more subtle errors.

Performance of the Configerator

Here are approximate latencies for configerator phases:When an engineer saves a config change, it takes about ten minutes to go through automated canary tests. This long testing time is needed in order to reliably determine whether the application is healthy under the new config. After ca- nary tests, how long does it take to commit the change and propagate it to all servers subscribing to the config? This la- tency can be broken down into three parts: 1) It takes about 5 seconds to commit the change into the shared git repository, because git is slow on a large repository; 2) The git tailer (see Figure 3) takes about 5 seconds to fetch config changes from the shared git repository; 3) The git tailer writes the change to Zeus, which propagates the change to all subscribing servers through a distribution tree. The last step takes about 4.5 seconds to reach hundreds of thousands of servers distributed across multiple continents.

This figure from the paper show that git is the bottleneck for configuration distribution. "The commit throughput is not scalable with respect to the repository size, because the execution time of many git operations increases with the number of files in the repository and the depth of the git history. Configerator is in the process of migration to multiple smaller git repositories that collectively serve a partitioned global name space."

Where is the research?

Configerator is an impressive engineering effort, and I want to focus on what are the important research take aways from this. Going forward, what are the core research ideas and findings? How can we push the envelope for future-facing improvements?How consistent should the configuration rollouts be?

There can be couplings/conflicts between code and configuration. Facebook solves this cleverly. They deploy code first, much earlier than the config, and enable the hidden/latent code later with the config change. There can also be couplings/conflicts between old and new configs. The configuration change arrives at production servers at different times, albeit within 5-10 seconds of each other. Would it cause problems to have some servers run old configuration, some new configuration? Facebook punts this responsibility to the developers, they need to make sure that new config can coexist with old config in peace. After all they use canary testing where fraction of machines use new config, remaining the old config. So, in sum, Facebook does not try to have a strong consistent reset to the new config. I don't know the details of their system, but for backend servers config changes may need stronger consistency than that.

Push versus Pull debate.

The paper claims push is more advantageous than pull in the datacenter for config deployment. I am not convinced because the arguments do not look strong.

Configerator uses the push model. How does it compare with the pull model? The biggest advantage of the pull model is its simplicity in implementation, because the server side can be stateless, without storing any hard state about individual clients, e.g., the set of configs needed by each client (note that different machines may run different applications and hence need different configs). However, the pull model is less efficient for two reasons. First, some polls return no new data and hence are pure overhead. It is hard to determine the optimal poll frequency. Second, since the server side is stateless, the client has to include in each poll the full list of configs needed by the client, which is not scalable as the number of configs grows. In our environment, many servers need tens of thousands of configs to run. We opt for the push model in our environment.This may be worth revisiting and investigating in more detail. Pull is simple and stateless as they also mention, and it is unclear why it couldn't be adopted.

How do we extend to WAN?

All coordination mentioned is single master (i.e., single producer/writer). Would there be a need for multi master solution, a master at each region/continent that can start a config update? Then the system shall need to deal with concurrent and potentially conflicting configuration changes. However, given that canary testing is on the order of minutes, there would not be a practical need for multi-master deployment in the near future.

Reviews of other Facebook papers

Facebook's Mystery Machine: End-to-end Performance Analysis of Large-scale Internet ServicesFacebook's software architecture

Scaling Memcache at Facebook

Finding a Needle in Haystack: Facebook's Photo Storage

Finding a Needle in Haystack: Facebook's Photo Storage

Comments