Finding a Needle in Haystack: Facebook's Photo Storage

This paper appeared in OSDI'10. The title "Finding a needle in Haystack" is a bit over-dramatization :-) Finding a needle in Haystack becomes straightforward if you can memorize the location of each needle in the haystack. And that is exactly what Facebook Haystack system does.

Haystack is an object store for sharing photos on Facebook where data is written once, read often, never modified, and rarely deleted. Haystack storage system was designed because traditional filesystems perform poorly under the Facebook workload. While using network attached storage (NAS) appliance mounted over NFS, several disk operations were necessary to read a single photo: one (or typically more) to translate the filename to an inode number, another to read the inode from disk, and a final one to read the file itself. While insignificant on a small scale, multiplied over billions of photos and petabytes of data, accessing metadata becomes the throughput bottleneck.

Haystack aims to achieve the following 4 goals. High throughput and low latency is achieved by ideas stemming from Log-structured Filesystem work (keeping all metadata in main memory, and minimizing the disk accesses per read and write). Fault-tolerance is achieved by replicating each photo in geographically distinct locations. Cost-effectiveness is achieved by custom designing the filesystem for the photo storing application and trimming all the fat, which results in each usable terabyte costing ~28% less in the new system. Finally, simplicity is achieved by restricting the design to well-known straightforward techniques.

The simplicity of the presentation and design of the system is refreshing. In fact the design is so simple that one may argue there is not much novel ideas here. Yet, the paper is still very interesting because it is from Facebook, a giant. Facebook currently stores over 260 billion images, which translates to over 20 petabytes of data. Users upload one billion new photos (~60 terabytes) each week and Facebook serves over one million images per second at peak.

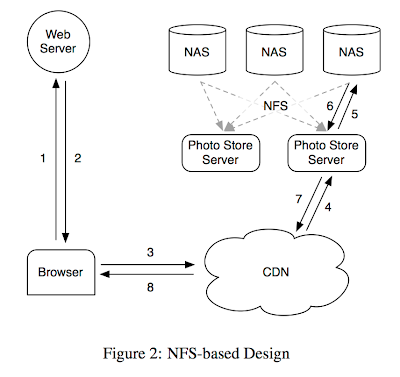

Previous NFS-based design

Figure 2 gives an overview of the photo access process of the previous NFS-based design used at Facebook. The user's browser first sends an HTTP request to the web server. For each photo the web server constructs a URL directing the browser to a location from which to download the data. If the content delivery network (CDN) has the image cached then the CDN responds immediately with the photo. Otherwise the CDN contacts Facebook's photo store server using the URL. The photo store server extracts from the URL the volume and full path to the file, reads the data over NFS, and returns the result to the CDN.

The problem with this previous design is that at least 3 disk operations were needed to fetch an image: one to read directory metadata into memory, one to load the inode into memory, and one to read the file contents. Actually before optimizing the directory sizes, the directory blockmaps were too large to be cached by the NAS device and 10 disk operations might be needed to fetch the image.

Unfortunately, caching at the CDN or caching filehandles returned by NAS devices did not provide much relief to Facebook due to the long tail problem. While caches can effectively serve the hottest photos --profile pictures and photos that have been recently uploaded--, a social networking site like Facebook also generates a large number of requests for less popular (often older) content. Requests from the long tail account for a significant amount of the traffic, and these requests all miss the cache and need to access the photo store servers.

Haystack design

To resolve the disk access bottleneck in the NFS-based approach, the Haystack system keeps all the metadata in main memory. In order to fit the metadata in main memory, Haystack dramatically reduces the memory used for filesystem metadata. Since storing a single photo per file results in more filesystem metadata than could be reasonably cached, Haystack takes a straightforward approach: it stores millions of photos in a single file and therefore maintains very large files. (See the second paragraph below for how this works.)

Figure 3 shows the architecture of the Haystack system. It consists of 3 core components: the Haystack Store, Haystack Directory, and Haystack Cache. The Store's capacity is organized into physical volumes. Physical volumes are further grouped into logical volumes. When Haystack stores a photo on a logical volume, the photo is written to all corresponding physical volumes for redundancy. The Directory maintains the logical to physical mapping along with other application metadata, such as the logical volume where each photo resides and the logical volumes with free space. The Cache functions as an internal CDN, another level of caching to back up the CDN.

When the browser requests a photo, the webserver uses the Directory to construct a URL for the photo, which includes the physical as well as logical volume information. Each Store machine manages multiple physical volumes. Each volume holds millions of photos. A physical volume is simply a very large file (100 GB) saved as "/hay/haystack-logical volumeID". Haystack is a log-structured append-only object store. A Store machine can access a photo quickly using only the id of the corresponding logical volume and the file offset at which the photo resides. This knowledge is the keystone of the Haystack design: retrieving the filename, offset, and size for a particular photo without needing disk operations. A Store machine keeps open file descriptors for each physical volume that it manages and also an in-memory mapping of photo ids to the filesystem metadata (i.e., file, offset and size in bytes) critical for retrieving that photo. Each photo stored in the file is called a needle.

A Store machine is a 2U node with 12 1TB SATA drives in RAID-6 (as a single volume). A single 10TB XFS filesystem (an extent based file system) runs across these on each Store machine. XFS has two main advantages for Haystack. First, the blockmaps for several contiguous large files can be small enough to be stored in main memory. Second, XFS provides efficient file preallocation, mitigating fragmentation and reining in how large block maps can grow.

My remaining questions

Although the paper is written clearly, there are still a couple of points I couldn't understand.

My first question is why was Google Filesystem (GFS) not suitable for implementing the Photo Store Server? That is what are the advantages of Haystack over GFS for this application? The paper just mentions that serving photo requests in the long tail represents a problem for which Hadoop is not well suited. But, what does this argument (which I don't understand) have to do with the unsuitability of GFS. I guess the answer is because Facebook needed to keep all metadata in the memory, and there was no immediate implementation of this provided by GFS master chunkserver. I guess if the GFS master chunkserver is modified to hold the metadata in the memory, then Facebook could as well use GFS for solving their problem. As another disadvantage of GFS, the paper cites that GFS is optimized for a workload consisting mostly of append operations (which is actually what Haystack does as well) and large sequential reads (I am not really sure why GFS cannot handle small random reads easily as well). So I don't see why GFS couldn't be adopted for use here.

My second question is about the details of replica maintenance at the Store. In the paper, replica maintenance is only outlined briefly as follows. A photo is replicated to each of the physical volumes mapped to the assigned logical volume. Haystack Directory provides this mapping. It seems like the replication logic is CP according the CAP theorem/model, and sacrifices availability when one of the nodes to host a physical volume to be used is unavailable. But, that is only basic operation, and there are several other side cases, such as failure of one volume, compaction of one volume, deletion of some photos. It is unclear how the replicas are maintained to the face of these, and how the mappings at the Haystack Directory component are updated to account for these. That should require coordination between the Store and the Directory.

Comments

1) its high latency

2) it has very sparse metadata controls.