Epoxy: ACID Transactions Across Diverse Data Stores

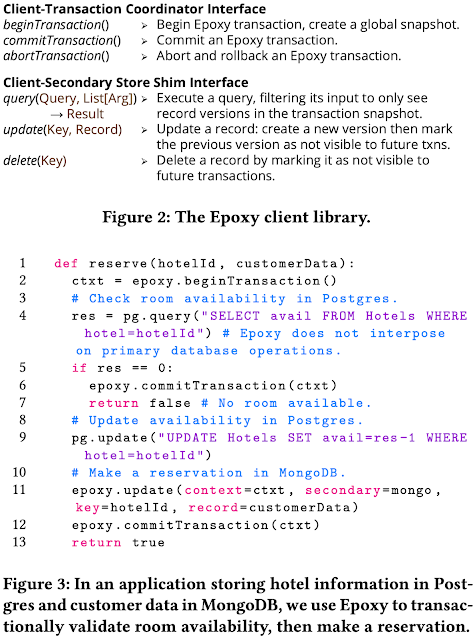

Ok, what is this Epoxy work about? Epoxy leverages Postgres transactional database as the primary/coordinator and extends multiversion concurrency control (MVCC) for cross-data store isolation. It provides isolation as well as atomicity and durability through its optimistic concurrency control (OCC) plus two-phase commit (2PC) protocol. Epoxy was implemented as a bolt-on shim layer for five diverse data stores: Postgres, MySQL, Elasticsearch, MongoDB, and Google Cloud Storage (GCS). (I guess the authors had Google Cloud credits to use rather than AWS credits, and so the experiments were run on Google Cloud.)

Epoxy is opensource at https://github.com/DBOS-project/apiary.

Motivation

The motivation for Epoxy is to provide transactional guarantees to the face of two increasingly popular trends which makes this harder to achieve. Heterogenous data: in addition to database records, large media blobs are also stored and accessed by applications. Microservices: Many systems consist of multiple services each managing its own data.

The evaluation section builds and evaluates some of these systems. Here are examples from the evaluation section.

Hotel reservation application: The room availability service stores data in Postgres. The customer reservation service stores the data in MongoDB. The workload consists of 80%: searches for available rooms, performing a read in Postgres and a geospatial search in MongoDB, and 20%: room reservations, performing a read and update in Postgres, and an insert in MongoDB. Without Epoxy, these operations fail to execute in an atomic and isolated manner, causing anomalies.

E-commerce service: The shopping carts and catalog are stored in Postgres, the catalog is replicated to Elasticsearch for quick search. The workload consists of 90%: Search and add items (Elasticsearch search and Postgres read, insert, update), 8%: Checkouts (Postgres read, delete, two inserts for cart to order transition), 1%: Catalog inserts (Postgres and Elasticsearch), and 1%: Catalog updates (Postgres and Elasticsearch). Without Epoxy, concurrent search and add, along with a catalog update, may lead to incorrect cart additions.

Social network: User profiles are stored in Postgres, and profile images are stored in GCS. The workload consists of 90%: Profile reads (reads in Postgres and GCS), 5%: Profile inserts (add profile to Postgres, upload image to GCS), and 5%: Profile updates (update profile in Postgres, replace image in GCS). Without Epoxy, this app faces fractured reads, where simultaneous read and update to a profile may result in inconsistencies between the seen profile image and its corresponding profile change.

The Epoxy protocol approach

The idea in Epoxy is to provide bolts-on transactions support, leveraging Postgres as the coordinator/primary database, and onboarding additional datastores to this set up via adding shim-layers. (Note that the coordinator and the primary database are slightly different things. Coordinator is that shim above the primary database.)

What is the status quo for solving this problem? If you didn't have Epoxy to solve this problem, you would be writing a custom glue code yourself. You would be taking a workflow-centric solution and embedding/enforcing the business application logic in your glue code. In a way, (in a customized way), you would be extending the OLTP transactions in to the application. But that is customized, and harder to reuse, and the surface area of dealing with atomicity and isolation is big, because you would be smearing this across your codebase.

As a more reusable, well abstracted solution, you can consider using a distributed transaction protocol like X/Open XA, based on two-phase commit in order to perform transactions across data stores. However, X/Open XA lacks transactional isolation, offering only atomicity. Epoxy surpasses X/Open XA by providing snapshot isolation, making it a more robust solution.

Additionally, the X/Open XA approach mandates data stores to implement the participant protocol of two-phase commit, posing compatibility issues with MongoDB, CockroachDB, and Redis. Moreover, in non-transactional data stores like S3/GCS, implementing the "prepare" step of X/Open XA is not feasible.

The Epoxy protocol: setup

Before learning about how Epoxy provides transactional guarantees across data stores, let's review Epoxy's requirements from the primary database (used as coordinator) and the secondary data stores.The primary database must provide ACID transactions with at least snapshot isolation. This is implemented using Postgres in Epoxy. The secondary stores must ensure that:

- Single-object write operations are linearizable and durable.

- Each record has a uniquely identifiable key.

- [Optionally to improve performance] Records can include metadata, and queries in the data store can be efficiently filtered based on this metadata.

Ok, let's delve into the coordinator for the primary database, and learn about snapshot representation and record versioning.

Each Epoxy transaction is linked to a snapshot, representing the set of all past transactions visible to it. Snapshot representation utilizes two transaction IDs, xmin and xmax, along with a list of recently committed transactions, rc_txns. At the time of snapshot creation:

- xmin is the smallest active transaction ID.

- xmax is assigned to be one past the largest committed transaction ID.

- rc_txns denotes the set of committed transactions with IDs greater than xmin.

- A transaction with ID x is in a snapshot if (x < xmin) \/ (x \in rc_txns).

- Record versions are tagged with two values: beginTxn and endTxn.

- beginTxn represents the ID of the transaction creating the record version.

- endTxn is the ID of the transaction superseding it with a new version or deleting the record.

This part is important, so make sure you follow this and corroborate your understanding with Figures 4 & 5, before you read about the Optimistic Concurrency Control (OCC) below.

The Epoxy protocol: OCC

To enforce transactional isolation, specifically snapshot isolation (SI), Epoxy adapts the multi-version optimistic concurrency control (OCC) protocol. SI is used as it aligns well with the lightweight shim model, necessitating only the checking of write-write conflicts. Ensuring serializable isolation would require efficient detection of read-write conflicts, requiring data store-specific implementation on each shim due to the necessity of understanding query semantics.Epoxy employs a two-phase commit (2PC) protocol. The secondary stores prepare first inside their databases, and then the primary concludes the transaction commit (or the abort).

A secondary store S, when executing transaction T, acquires an exclusive lock on the record's key before writing (if the locking fails, then T is aborted). Therefore, each secondary store shim incorporates a lock manager for its records, maintaining an exclusive write lock for each record. This lock prevents concurrent modifications to the endTxn field of the previous record version.

After completing T, S validates it by taking an exclusive (local to S) validation lock. S then checks that no key written by T was also written by a committed transaction not in T's snapshot. If validation succeeds, S provisionally marks T as committed, releases the lock, and votes to commit.

A transaction only commits if all secondary stores successfully validate; otherwise, it aborts and rolls back. Transactions commit by performing a commit operation on the primary database. Atomic commit on the primary database ensures the transaction becomes visible to future transactions on all data stores, appearing in their snapshots. The secondary stores release write locks after learning of a commit (or also for completing a rollback if abort was decided).

If a transaction fails validation or encounters any error in any data store, it initiates an abort. To prevent indefinite hanging on client failure, the coordinator also aborts a transaction if its connection with the client times out. The abort process deletes newly added record versions, and reverts record endTxn fields

The paper lists the following correctness invariants:

- SI1: T always reads data from a snapshot of committed information valid at the time T started.

- SI2: T can commit only if, at commit time, no committed transaction outside the snapshot has modified data intended to be written by T.

- AC1: All processes that reach a decision reach the same one.

- AC2: Once a process reaches a decision, it cannot reverse it.

- AC3&4: The Commit decision is reached only if all processes vote Yes. In the absence of failures and with unanimous Yes votes, the decision is to Commit.

- AC5: In any execution with tolerated failures (crash failures), if all failures are repaired and no new failures occur for a sufficient duration, all processes will eventually reach a decision.

If the primary/coordinator database is down, the secondary stores cannot accept any writes/updates until the primary/coordinator comes back up and recovers things. They can serve reads though. Primary/coordinator failure means abortion and rollback of active transactions in secondary stores. Upon a secondary or primary failure, the goal is to get them back up, and get the secondary stores recovered to reflect committed transactions, establishing a crash-consistent state.

Limitations and Overheads

Now, that we covered the Epoxy protocol, let's investigate its limitations and overheads.Note that Epoxy requires a single coordinator/primary. With multiple primaries things would get convoluted/complex and inefficient with distributed transactions needed across primaries. In the cloud, it is possible to scale a single Postgres coordinator with AWS RDS/Aurora. For geodistribution, a virtual/single coordinator can be provided by a distributed SQL offering.

Above we already mentioned another limitation: Epoxy requires exclusive access to a secondary store table. If a client writes without using Epoxy, the lack of version information makes the write invisible to reads. Similarly, reading without Epoxy may expose conflicting versions of the same record. Adoption of Epoxy by one application on a secondary store table necessitates all other applications on that table to do the same.

In the same vein, I can point to another limitation. It is possible that the secondary shim would reject a nontransactional write, because the transactional update in the secondary takes an exclusive write lock for each key in the shim layer for the entire duration of the transaction (involving the primary and potentially other secondaries). This is in contrast to the DynamoDB transactions design, which took it as a priority that the transactions do not interfere with nontransactional updates. I guess it is also possible to abort the transactional write in favor of a nontransactional write in Epoxy. This is before promising a prepared/commit vote to the primary about it of course; at that stage, the secondary is bound to wait to hear a commit/abort from the primary.

Let's talk about the overhead of shims. The secondary store's shim, after taking an exclusive write lock on each k involved in the transaction, creates a new version of the updated record with beginTxn set to x and endTxn set to infinity. Next, it checks if an older version of the record exists and, if one does, it sets the endTxn field of the most recent older record version to x. Upon an abort decision, the process needs to be reverted for each k involved in the transaction. The evaluation section provides a good coverage and breakdown of the overheads involved for committed and aborted transactions. There is also evaluation about the metadata storage overheads.

A higher overhead comes from garbage collection. Due to Epoxy's MVCC approach of creating new record versions with writes instead of updating existing records, cleaning up old versions is crucial. Record versions are deleted only if they are no longer visible to any transactions, indicated by their endTxn being in the snapshot of all active transactions. Therefore, the transaction coordinator should periodically execute garbage collection. The garbage collector scans all active transactions to identify the smallest xmin, representing the oldest active transaction. It then instructs secondary store shims to delete record versions with endTxn less than this smallest active xmin.

Implementation

They implement one transaction coordinator, on Postgres, and four secondary store shims, on Elasticsearch, MongoDB, Google Cloud Storage, and MySQL. Each shim is implemented under 1K lines of Java code. Epoxy is opensource at https://github.com/DBOS-project/apiary.Postgres is used as the primary database with a configured isolation level of repeatable read (implemented as SI). Postgres utilizes MVCC, optimizing snapshot creation through its system tables. The transaction snapshot is represented with xmin, xmax, and xip_list (a list of active transactions at the snapshot time). Handling aborted transactions is a challenge; aborted transactions remain active until rolled back in both the primary database and secondary stores. The transaction coordinator tracks active or rolling back transactions, excluding them from xip_list during snapshot creation. Here is the visibility formula with this setup.

Let's consider a secondary store implementation, through the MongoDB shim they implemented. MongoDB features a schemaless document-oriented data format with support for indexes. Similar to Elasticsearch shim, MongoDB shim incorporates beginTxn and endTxn fields in all documents. The shim interposes on queries by introducing operators. These operators filter input collections & ensures queries only access record versions present in the transaction snapshot. MongoDB's native support for B-trees is levereged for enhanced performance through indexing (and accessing) of beginTxn and endTxn using B-trees.

Let's consider the Google Cloud Storage (GCS) shim. Similar approach would also apply to AWS S3 or Azure Blob Storage. GCS offers a key-value interface for durably storing large blobs: each blob is linked to a unique key. However, GCS lacks metadata filtering. To compensate for this, the shim interposes on GCS write operations by creating a primary database record with key, beginTxn, and endTxn. It appends beginTxn to the GCS key and stores both key and value in GCS. Efficient management of metadata operations in GCS read operations is achieved by checking the primary database first to find the appropriate key version before accessing the key. Each read involves only one key, necessitating a single primary database lookup overhead.

Evaluation

They run on Google Cloud using c2- standard-8 VM instances with 8 vCPUs, 32GB DRAM, and a SCSI HDD. In experiments involving multiple data stores, they run each data store in single-node mode on its own server (except GCS, which is accessed through its cloud API).The evaluation is extensive, as you can read below, after my takeaways. My main takeaways are as follows.

Due to the use of caching in the secondary shims, the read overhead is reduced. But this, of course, does not work for writes, so the write overhead is high. Epoxy adds <10% overhead compared to a non-transactional baseline on read-mostly workloads, and 72% on write-heavy workloads.

The read cache may be a metastability issue. Also, there are other metastability flags in Epoxy involving non-proportional work done per request. Transaction aborts are costly, so when write-conflicts rates go up, it is possible to go into a metastable mode. A transaction abort in the primary database triggers rolls back of all changes in the secondary stores, deletes of newly added record versions, and reverting of record endTxn fields across the stores. The primary and secondary store failures also incur significant load on the system for recovery, and could cause metastability issues. The evaluation do not cover these issues.

I think the choice of the primary database is crucial. Users have the ability to directly query and update the primary database without utilizing the shim. On the other hand, for a secondary store, everything needs to go through the shim.

Multi-DBMS TPC-C Workload with Epoxy: This runs a 1:1 mixture of NewOrder and Payment transactions and Observe p50 and p99 latency with varying offered load. Epoxy provides 7% higher throughput than XA with comparable latency. Epoxy incurs 53% space overhead due to maintaining indexed version columns in each table. (That is significant because each row is otherwise small in size.) Both Epoxy and XA exhibit substantial overhead (82–95%) compared to the no-transactions baseline.

Epoxy spends 11-24% more time than XA in executing transaction business logic due to versioning metadata and index structures. XA incurs expensive prepare and commit phases with multiple rounds of communication, following the participant protocol of 2PC. XA prepare takes 39-83% longer than a MySQL commit (used by Epoxy for making MySQL data durable before committing). XA commit takes 2.8-3.2× longer than an Epoxy commit.

Microbenchmark Analysis: These provide performance breakdown specifically for inserts and updates in MongoDB. Insert Overhead is mainly attributed to the verification process ensuring no record already exists with the key intended for insertion. Update overhead arises from updating the endTxn field of the most recent older record version.

Write-conflict impact on performance: This experiment stored varying key-value pairs in both Postgres and MongoDB. The workload is 50% reads, 50% updates. Reads and updates target the same uniformly random key in both systems. Variation in the size of the key space is used for altering the frequency of write conflicts. With 100K keys, conflicts are near zero and Epoxy is 1.5x slower than the baseline. With 100 keys, 78.8% of Epoxy transactions and 10.7% of baseline Postgres transactions abort due to write conflicts and Epoxy is 1.9x slower than the baseline. With 10 keys, 98.8% of Epoxy transactions and 57.4% of baseline Postgres transactions abort, and Epoxy is 3× slower than the baseline.

Storage overhead measurement: For a MongoDB document that contains 10 integers, 1 randomized ten-character string, and 1 unique string key, Epoxy adds storage overhead of ~18 bytes per document, taking the document from 118bytes to 136 bytes. This is because Epoxy introduces two long fields (beginTxn and endTxn) to each document and creates an index on beginTxn.

Comments