Designing Distributed Systems Using Approximate Synchrony in Data Center Networks

This paper is from Dan R. K. Ports Jialin Li Vincent Liu Naveen Kr. Sharma Arvind Krishnamurthy (University of Washington) and appeared in NSDI 2015.

The previous week, in our Zoom Reading Group, we had read the "Scalable State Machine Replication" paper in our group. SSMR built on top of a "slow" atomic multicast (implemented with Multi-Ring Paxos) and imposed a total order for all messages before SMR.

This paper introduces SpecPaxos (Speculative Paxos), which also tries to impose an ordering of requests messages before SMR processing. SpecPaxos builds on a carefully constructed network to have most messages ordered correctly to start with and provides a way to deal with messages reorderings as needed. The relaxing of the ordering requirement allows Speculative Paxos to achieve good (average) latency and high throughput, and escape the heavy cost of atomic multicast implementation. (To be fair, SpecPaxos doesn't consider the problem of partitioning SMR and still providing strict serializability over transactions on them like SSMR paper did.)

MOMs against out of order delivery

SpecPaxos introduces a Mostly-Ordered Multicast primitive (MOM), which provides a best-effort guarantee that all receivers will receive messages from different senders in a consistent order. MOMs leverage the structured topology of a data center network and the forwarding flexibility provided by software-defined networking. There are three increasingly costly/high-maintenance way of achieving ordering at the network.

- Topology-aware multicast: Ensure that all multicast messages traverse the same number of links. This eliminates reordering due to path dilation.

- High-priority multicast: Use topology-aware multi- cast, but also assign high QoS priorities to multicasts. This essentially eliminates drops due to congestion, and also reduces reordering due to queuing delays.

- In-network serialization: Use high-priority multicast, but route all packets through a single root switch. This eliminates all remaining non-failure related reordering.

The common intuition behind all these designs is that messages can be sent along predictable paths through the data center network topology with low latency and high reliability in the common case.

SpecPaxos uses the first two approaches. In a datacenter setup, it sets up some root switches and partitions keyspaces among them. To achieve topology-aware multicast, it makes all client requests to go up to the root switch and starts the multicasts from there. (Setting up the root nodes with this consistent partitioning at the root switches is a trusted consensus base to bootstrap the rest of the protocol. Modification of that for load balancing or failures would require care as well.)

Dan Ports, the first author of the paper, published a follow-up work on SpecPaxos the next year at OSDI 2016 called NOPaxos (https://github.com/UWSysLab/NOPaxos). NOPaxos (network ordered Paxos) puts an ordering mechanism in the network hardware instead of network design, making it an overall more predictable and robust system. So instead of worrying too much about the shortcomings of MOM ordering, it is better to check the NOPaxos paper.

SpecPaxos algorithm

SpecPaxos borrows heavily from Fast Paxos. In Fast Paxos, the clients talk directly to all the acceptors/replicas, shaving off a network hop since they don't go through the leader to contact the acceptors. The leader, however, still performs a vital job and receives phase-2b messages from the acceptors to figure out if the decision has been reached in the fast quorum and performs recovery (conflict resolution) as needed. The leader is responsible for responding back to the client. The price paid is that 3f + 1 acceptors are necessary instead of 2f + 1.

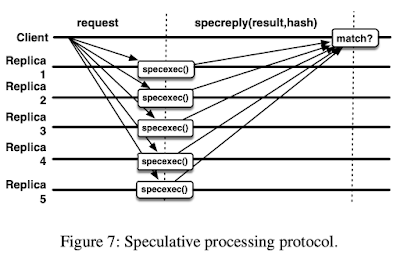

The SpecPaxos algorithm is similar to Fast Paxos, but boosts it up a notch. The client talks to the acceptors/replicas, the acceptors respond directly to the client and if the client sees a fast quorum it considers the operation as committed. This is the speculative part, where we short-circuit the leader. The leader still gets involved, but only in batches to do background synchronization as I explain below.

You see, there are actually three parts/modules to SpecPaxos:

- Speculative processing (as described above) commits requests efficiently in the normal case where messages are ordered and < f/2 replicas have failed

- Synchronization performed by the leader periodically verifies that the replicas have speculatively executed the same requests in the same order

- Reconciliation (invoked only when synchronization fails) ensures progress when requests are delivered out of order or when between f/2 and f nodes have failed

It is this last one, the reconciliation, that is tricky. Dan Ports joined our paper discussion. It was fun to learn from him behind the curtain story of the development. He said that they considered using 3f+1 replicas to keep the reconciliation simpler. But dialogues with database people showed that, the database people were OK with one replication (f+1), they disliked two replication (2f+1), and they detested the 3f+1 replication. So that is why the group had to go with a costly and sophisticated reconciliation protocol and 2f+1 nodes, rather than using 3f+1 nodes to tolerate f faults.

SpecPaxos used the reconciliation protocol of viewstamped replication to start off with, but Dan said later they discovered a very subtle bug in the viewstamped replication's reconciliation protocol. This tells you how tricky recovery can get with "leaderless" protocols. Implementing SMR based on leaderless protocols is a hard undertaking itself, and it gets even more complicated due to the complexity of recovery in leaderless protocols.

Evaluation

SpecPaxos gives low latency and high throughput, and these are very good numbers. SpecPaxos also takes the leader out of the critical path of consensus, and moves it to the background synchronization task, and this helps reduce the incast bottleneck problem at the leader in classical MultiPaxos.

But we also talked about how important performance predictability and tail latency is. SpecPaxos doesn't have that, as the performance is very sensitive to the network behavior. Network "flukes" can cause ordering issues requiring an expensive reconciliation step, creating temporary slow-downs, and variations in latency. Hence it is possible to have a rather bad tail latency and overall less predictable performance.

Going back to the MOMs, another important point in our discussion was the applicability to the wide-area networks. After all, shaving off of one network hop will have the greatest impact when the network latency is large, which happens in WANs. The problem here, of course, is that WANs are inherently non-uniform in their latency between nodes due to physical distances and lack of control over the entire network between nodes, making it way more likely to have messages received in a different order in different regions. This means that MOMs and SpecPaxos won't work well in the environments that could have benefited from this the most. But yeah, life ain't easy.

Here is the YouTube video of the presentation of the SpecPaxos, if you like to watch.

This blog post is jointly written with Aleksey Charapko, who is now an Assistant Professor at the University of New Hampshire.

Discussion

There seems to be a high-level architectural decision we can make.

- Order the requests first. Scalable SMR is an example of the order first approach. But this can backfire, as the ordering could create dependencies which delay the dependent messages until the delivery of the earlier messages is completed, and this can cascade, like in virtual synchrony solutions. NoPaxos uses switch hardware to alleviate some of these concerns.

- Best effort ordering first. SpecPaxos is an example of order-first best effort. We can even consider EPaxos like leaderless solutions under this, because they optimistically assume no conflicts, and deal with conflicts via a recovery protocol.

- Central ordering at the leader. Paxos, MultiPaxos, multi-leader versions of Paxos such as WPaxos orders centrally at the leader or leaders (if considering partitioned keyspace) first.

- Central lazy ordering at the leader. S-Paxos and SDPaxos order centrally at the sequential after replication

Are there any unexplored options? How about lazy ordering at the leader(s)?

Comments