Matchmaker Paxos: A Reconfigurable Consensus Protocol

How does MM compare with previous reconfiguration ideas?

Similar to Vertical Paxos, MM Paxos allows every round, not just every consensus instance/slot, to have a different configuration of acceptors. Round 0 of a consensus instance may use some configuration C0, while round 1 of the same consensus instance may use some completely different configuration C1. MM is a realization/implementation of Vertical Paxos with a more integrated deployment to the Paxos protocol. Vertical Paxos requires an external master, which is itself implemented using state machine replication. The matchmakers in MM are analogous to that external master/Paxos-box and show that such a reconfiguration does not require a nested invocation of state machine replication.Vanilla MultiPaxos and Raft use log-based approach to reconfiguration. Pipelining of Paxos commands becomes a challenge for this approach. Paxos solves this by limiting the length of the pipeline window to α > 0 and only activating the new config Cnew chosen at slot i after slot i + α. Depending on the value of α, this approach either limits throughput or latency of the system. On the other hand, Raft does not impose any limitation of concurrency and proposes two solutions. The first solution is to restrict the reconfiguration operation, i.e. what can be reconfigured. For example, if each operation only adds one node or removes one node, a sequence of these operations can be scheduled to achieve arbitrary changes. The second solution is to change configuration in two phases: a union of both old and new configuration C+Cnew is proposed in the log first, and committed by the quorums combined. Only after the commit, the leader may propose the new config Cnew. During the two phases, any election or command proposal should be committed by quorum in both C and Cnew. To ensure safety during reconfiguration, all these solutions essentially prevent two configurations C and Cnew to make decision at the same time that leads to divergent system states. (This paragraph explaining Paxos/Raft reconfiguration is requoted from our WPaxos paper.)

Unlike those MM (as well as in Vertical Paxos) does not require a log and is applicable to CASPaxos and ePaxos.

MM reconfiguration

The paper emphasizes that MM reconfigures across rounds, not commands. Replication protocols based on classical MultiPaxos assume a totally ordered log of chosen commands, and reconfigure across log entries: each log entry is handled by a single configuration. MM Paxos instead works across rounds of consensus, as proposed by Vertical Paxos. Different Paxos rounds--even for the same command-- can use different configurations. Every round is statically assigned to a single proposer and that proposer selects a single configuration for that round. A higher proposer overrides a lower proposer. (You can recognize these ideas from Heidi's generalized consensus work.)

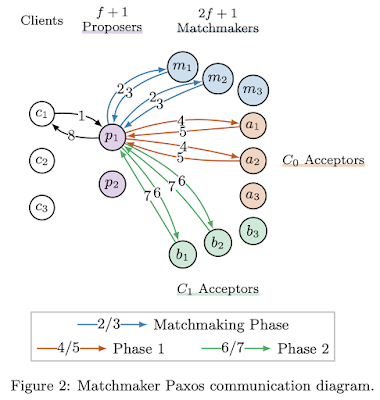

Since every round uses a different configuration of acceptors, a Matchmaker Paxos proposer in the unoptimized case has to contact all of the configurations used in rounds less than i. (We will discuss garbage collection later.) When a proposer begins executing round i, it selects a configuration Ci. It sends the configuration Ci to the matchmakers, and the matchmakers reply with the configurations used in previous rounds. This is called the Matchmaking phase. The proposer then executes Phase 1 of Paxos with the acceptors in these prior configurations, and then executes Phase 2 with the acceptors in configuration Ci, as illustrated in Figure 2.

Matchmaking MultiPaxos

Matchmaker MultiPaxos runs the Matchmaking phase and Phase 1 only during a leader change. The stable leader only records configuration without doing phase1 clearing, and can still safely change configurations. A stable leader doesn't need matchmaking for learning the previous configurations, it just needs to store the new configuration to the matchmakers.The stable leader can easily change configurations by just writing to quorum of matchmakers (as in vertical paxos). There is no need to do phase1 clearing with the acceptors from old configurations because you are the same stable leader all along.

Retiring old configurations

If we prematurely shut down the acceptors in Cj, then proposer p will get stuck in Phase 1, waiting for Phase1B messages from a quorum of nodes that have been shut down. Therefore, we cannot shut down the acceptors in a configuration Cj until we are sure that the matchmakers will never again return Cj during the Matchmaking phase.

There are three situations in which it is safe for a proposer pi in round i to issue a GarbageA⟨i⟩ command.

- If the proposer pi gets a value x chosen in round i,

- If the proposer pi executes Phase 1 in round i and finds that no value has been or will be chosen in any round less than i,

- If the proposer pi learns that a value x has already been chosen and has been stored on f + 1 other machines ---then the proposer can safely issue a GarbageA⟨i⟩ command after it informs a Phase 2 quorum of acceptors in Ci of this fact.

Reconfiguring matchmakers

Ok, that is cool. Thanks to the matchmaker, we make configuration changes and garbage collection old configurations super easy. But maybe you are wondering: we just punted the reconfiguration problem by introducing these matchmakers as a level of indirection (which is itself a contribution), but what about the reconfiguration of the matchmaker nodes themselves?

We can shut down the old matchmakers and replace them with new ones by making sure that the new matchmakers’ initial state is the same as the old matchmakers’ final state. This is not efficient, but it is OK, because this happens rare and can be pipelined with normal execution. The paper discusses how to do this.

Comments