Gryff: Unifying consensus and shared registers

This paper is by Matthew Burke, Audrey Cheng, and Wyatt Lloyd, and appeared in NSDI'20. Here is a link to the paper, slides, and video presentation.

So this led many people to think about providing read/write operations and read-modify-write operations separately for implementing distributed key-value systems. Hermes (ASPLOS'20), which I reviewed earlier, is an example of this. Fine-Grained Replicated State Machines (NSDI'20), which we will discuss in an upcoming Zoom DistSys reading group, also looks at a related problem.

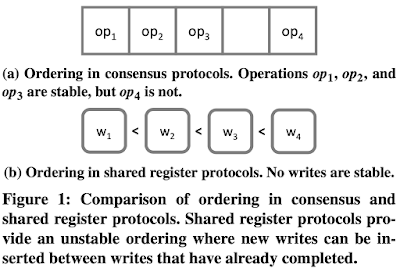

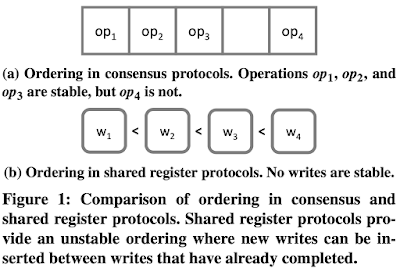

Applying commands in the same order on all replicas requires an ordering mechanism that is stable, i.e., a replica knows when a command's position is fixed and it will never receive an earlier command. In asynchronous systems where processes can fail, consensus protocols are used to agree on this stable ordering.

Shared register protocols provide a linearizable ordering of operations. That ordering does not have to be stable, however, because each write operation fully defines the state of the object. Thus, a replica can safely apply a write w4 even if it does not know about earlier writes. If an earlier write w3 ever does arrive, the replica simply ignores that write because it already has the resulting state from applying w3 and then w4. Figure 1b shows shared register ordering where there is a total order of all writes (denoted by <) without stability.

A shared object in Gryff exposes the following interface:

To leverage this insight, the paper introduces consensus-after-register timestamps, or carstamps. Carstamps allow writes and rmws to concurrently modify the same state without serializing through a leader or incurring additional round trips. Reads use carstamps to determine consistent values without interposing on concurrent updates.

The only difference in read-write in Gryff from multi-writer ABD is that replicas maintain a carstamp associated with the current value of the shared object instead of a tag so that rmws are properly ordered with respect to reads and writes.

In sum, Gryff makes reads more efficient by performing them with ABD (with the above-mentioned Georgiou optimization) instead of EPaxos where a supermajority quorum would be needed. While Gryff uses two-round ABD writes, I think that may also be reduced to one round write with a trick for inferring the higher TS value to propose time with (like using HLC clock timestamps) in an optimistic way and learn the higher timestamp if that fails, and complete in two rounds.

On the other hand, I am starting to like the invalidation idea in Hermes more. In contrast to ABD used in Gryff, Hermes allows linearizable reads while writes are ongoing, and local reads at that.

Because the round-trip time to the replica that is colocated with a client process is negligible relative to the interreplica latency, replicas can coordinate reads for their colocated clients and utilize their local state in the read coordinator protocol to terminate after 1 RTT more often. When using this optimization, we say that the coordinating replica is a proxy for the client process's read.

My big problem with the evaluation is that it doesn't use leader-leases optimization in MultiPaxos to allow serving of reads locally at the leader. This standard optimization would likely lead to MultiPaxos giving the best result for read latencies in the evaluations.

Another thing missing in the evaluation is a comparison with Cassandra. Cassandra implements read/write registers via ABD-like algorithm and can give linearizability if you configure the quorums accordingly. Cassandra also has CASPaxos for compare-and-set for conditional write, which can be used to implement read-modify-write.

The evaluation shows less blocking for rmws in Gryff. Gryff achieves 2 RTT rmws when there are no conflicts and 3 RTT when there are. While Gryff must still block the execution of rmws until all dependencies have been received and executed, Gryff experiences significantly less blocking than EPaxos. This is because EPaxos needs to have dependencies on writes, but Gryff’s rmw protocol does not keep track of dependencies on writes.

We see that Gryff and EPaxos each achieve a slightly higher maximum throughput than MultiPaxos due to their leaderless structure. (This is of course at low conflict rates, because at high conflict rates EPaxos and Gryff pay a stiff price). It is easy to access MultiPaxos to per-key sharded Paxos, and that would compete and out-do Gryff and EPaxos. For achieving best performance (for both throughput and latency) for a WAN key-value deployment, however, I suggest using our WPaxos protocol, as it can adapt to locality of access as well.

Straight talk (from the middle of the book)

- The model of the paper is a great contribution. Stable versus Unstable ordering is a good framework to think in. Carstamps (consensus after registers) logical clock timestamping is a good way to realize this ordering. I think carstamps will see good adoption, as it is clear, concrete, and useful.

- Constructing a hybrid of EPaxos and ABD is a novel idea.

- The performance of Gryff is not good. A straightforward distributed key-value sharded implementation of Paxos would do a better job. I think Hermes is a better choice than Gryff with read-write and read-modify-write operations.

Introduction

Recently we see a lot of interest in unifying consensus and shared registers, the topic of the paper. I think this is because of the popularity of distributed key-value stores/systems. While consensus is often used for implementing distributed key-value stores, this is a bit of an overkill. You don't need to know the previous value of the key, if you are overwriting it with a write operation to the key. For the write operation, the new value is not a function of the old value. As Gryff says in the abstract: "Consensus is inefficient for workloads composed mostly of reads and writes". ABD is good enough for that, and it even gives you linearizability to the face of concurrent asynchronous execution with crash faults. However, ABD for shared registers is too weak to implement stronger synchronization primitives. You would still need to use the consensus gun for the occasional read-modify-write operation.So this led many people to think about providing read/write operations and read-modify-write operations separately for implementing distributed key-value systems. Hermes (ASPLOS'20), which I reviewed earlier, is an example of this. Fine-Grained Replicated State Machines (NSDI'20), which we will discuss in an upcoming Zoom DistSys reading group, also looks at a related problem.

Consensus vs. Shared Registers

A big contribution of the paper is the framework it introduces for thinking about read write operations and read-modify write operations.

Applying commands in the same order on all replicas requires an ordering mechanism that is stable, i.e., a replica knows when a command's position is fixed and it will never receive an earlier command. In asynchronous systems where processes can fail, consensus protocols are used to agree on this stable ordering.

Shared register protocols provide a linearizable ordering of operations. That ordering does not have to be stable, however, because each write operation fully defines the state of the object. Thus, a replica can safely apply a write w4 even if it does not know about earlier writes. If an earlier write w3 ever does arrive, the replica simply ignores that write because it already has the resulting state from applying w3 and then w4. Figure 1b shows shared register ordering where there is a total order of all writes (denoted by <) without stability.

A shared object in Gryff exposes the following interface:

- READ(): returns the value of the object

- WRITE(v): updates the value of the object to v

- RMW( f (·)): atomically reads the value v, updates value to f (v), and returns v

Carstamps for correct ordering

The paper says that AQS (active quorum system) protocol [2010] which tried to unify consensus and shared registers, has a subtle bug that allows rmw to be misplaced/misordered. The paper says that, to simplify reasoning about correctness, it is best to enforce the interaction at a deeper level, in the ordering mechanism, by imposing a structural order in the timestamps.To leverage this insight, the paper introduces consensus-after-register timestamps, or carstamps. Carstamps allow writes and rmws to concurrently modify the same state without serializing through a leader or incurring additional round trips. Reads use carstamps to determine consistent values without interposing on concurrent updates.

Gryff Protocol

The name Gryff stands for Griffin, a hybrid between lion and eagle, as an attribution for the protocol being a hybrid between EPaxos and ABD.The only difference in read-write in Gryff from multi-writer ABD is that replicas maintain a carstamp associated with the current value of the shared object instead of a tag so that rmws are properly ordered with respect to reads and writes.

- Write. The rmwc field is reset to 0 (Line 15 of Algorithm 1).

- Reads. "We make the same observation as Georgiou et al. [26] that the second phase in the read protocol of multi-writer ABD is redundant when a quorum already store the value and associated carstamp chosen in the first phase."

EPaxos is a consensus protocol that provides optimal commit latency in the wide-area. It has three phases in failure-free executions: PreAccept, Accept, and Commit. If a command commits on the fast path (i.e., If the coordinator receives a fast/supermajority quorum of responses that all contain the same dependencies), the coordinator returns to the client after the PreAccept phase and skips the Accept phase (where it builds the final dependencies for the command by taking the union of all the dependencies that it received in the PreAccept phase). Otherwise, the command commits on the slow path after the Accept phase. Commands that do not read state complete at the beginning of the Commit phase; commands that do read state complete after a single replica, typically the coordinator, executes the command to obtain the returned state. The purpose of the PreAccept and Accept phases is to establish the dependencies for a command, or the set of commands that must be executed before the current command. The purpose of the Commit phase is for the coordinator to notify the other replicas of the agreed-upon dependencies.Gryff makes three high-level modifications to EPaxos to unify its stable ordering with the unstable ordering of the multiwriter ABD read and write protocol.

- A base update attribute, base, is decided by the replicas during the same process that establishes the dependencies and the approximate sequence number for a rmw.

- A rmw completes after a quorum execute it.

- When a rmw executes, it chooses its base update from between its base attribute and the result of the previously executed rmw prev. The result of the executed rmw is applied to the value and carstamp of the executing replica.

In sum, Gryff makes reads more efficient by performing them with ABD (with the above-mentioned Georgiou optimization) instead of EPaxos where a supermajority quorum would be needed. While Gryff uses two-round ABD writes, I think that may also be reduced to one round write with a trick for inferring the higher TS value to propose time with (like using HLC clock timestamps) in an optimistic way and learn the higher timestamp if that fails, and complete in two rounds.

On the other hand, I am starting to like the invalidation idea in Hermes more. In contrast to ABD used in Gryff, Hermes allows linearizable reads while writes are ongoing, and local reads at that.

A coordinator node issues a write to a key only if it is in the Valid state; otherwise the write is stalled. This doesn't seem to be necessary for safety, because the higher timestamped writes will preempt the lower timestamped writes. So why does Hermes do this? I think they do this, because it get replicas see the writes concluded, even when there is a deluge of writes to the same key. This may in turn help alleviate the read starvation due to constant flood of writes to the same key. I found this in the slack channel for ASPLOS'20 from the first author:

It is safe for a read that initially found the object invalidated with version timestamp 2 and then subsequently invalidated with a version timestamp 3 to get serialized and return the version 2 value. Intuitively this is partly safe because a write with version 3 could not have started unless the write with version 2 has been committed.

Proxying reads

The base Gryff read protocol provides reads with single round-trip time latency from the coordinator to the nearest quorum including itself (1 RTT) when there are no concurrent updates. Otherwise, reads have at most 2 RTT latency. The paper discusses how read latency can be further improved in deployments across wide area networks.Because the round-trip time to the replica that is colocated with a client process is negligible relative to the interreplica latency, replicas can coordinate reads for their colocated clients and utilize their local state in the read coordinator protocol to terminate after 1 RTT more often. When using this optimization, we say that the coordinating replica is a proxy for the client process's read.

- Propagating Extra Data in Read Phase 1. The proxy includes in the Read1 (i.e., read-phase1) messages its current value v and carstamp cs. Upon receiving a Read1 message with this additional information, a replica applies the value and carstamp before returning its current value and carstamp. This has the effect of ensuring every replica that receives the Read1 messages will have a carstamp (and associated value) at least as large as the carstamp at the proxy when the read was invoked.

- Updating the Proxy’s Data. The proxy also applies the values and carstamps that it receives in Read1Reply messages as it receives them and before it makes the decision of whether or not to complete the read after the first phase. If every reply contains the same carstamp, then the read completes after 1 RTT even if the carstamp at the proxy when the read was invoked is smaller than the carstamp contained in every reply.

Evaluation

Gryff is implemented in the same framework as EPaxos and MultiPaxos and its performance is evaluated in a geo-replicated setting. The evaluation shows that, for workloads with moderate contention, Gryff reduces p99 read latency to ∼56% of EPaxos, but has ∼2x higher write latency. This tradeoff allows Gryff to reduce service-level p50 latency to ∼60% of EPaxos for large-scale web applications whose requests fan-out into many storage-level requests.My big problem with the evaluation is that it doesn't use leader-leases optimization in MultiPaxos to allow serving of reads locally at the leader. This standard optimization would likely lead to MultiPaxos giving the best result for read latencies in the evaluations.

Another thing missing in the evaluation is a comparison with Cassandra. Cassandra implements read/write registers via ABD-like algorithm and can give linearizability if you configure the quorums accordingly. Cassandra also has CASPaxos for compare-and-set for conditional write, which can be used to implement read-modify-write.

The evaluation shows less blocking for rmws in Gryff. Gryff achieves 2 RTT rmws when there are no conflicts and 3 RTT when there are. While Gryff must still block the execution of rmws until all dependencies have been received and executed, Gryff experiences significantly less blocking than EPaxos. This is because EPaxos needs to have dependencies on writes, but Gryff’s rmw protocol does not keep track of dependencies on writes.

We see that Gryff and EPaxos each achieve a slightly higher maximum throughput than MultiPaxos due to their leaderless structure. (This is of course at low conflict rates, because at high conflict rates EPaxos and Gryff pay a stiff price). It is easy to access MultiPaxos to per-key sharded Paxos, and that would compete and out-do Gryff and EPaxos. For achieving best performance (for both throughput and latency) for a WAN key-value deployment, however, I suggest using our WPaxos protocol, as it can adapt to locality of access as well.

Comments

Thanks for the insightful blog post! It's great to see your interest in the idea that using consensus for all types of operations is overkill (both in this post and your earlier post about Hermes). There is definitely more work to be done in terms of optimizing read and write operations based on work from the shared register literature. But I'm happy to see interest even in these first iterations of this idea.

I also want to clarify a few subtleties.

First, Gryff and Hermes are designed for different system models. In particular, Gryff is designed for the asynchronous system model with crash (i.e., undetectable) failures. Hermes is designed for the partially synchronous model and is membership based (like Primary Backup, Chain Replication, CRAQ, etc.). It is very difficult to compare strengths and weaknesses across this big of a gap in system models.

Second, the related works section in the Gryff paper briefly discusses leases in this context. At the very least, time-based leases require stronger assumptions about the clock drift rate than what fully asynchronous systems require. Once you begin making this assumptions, it's not clear that leases are even the best you can do to improve performance! But we considered this to be outside the scope of the paper. I will add that MultiPaxos with time-based leader leases would still have larger read tail latency than Gryff (with 3 replicas) because the wide-area latency from the furthest clients to the leader is typically larger than the wide-area latency from any client to its nearest quorum of replicas.

Hope these clarifications are helpful! I'm also always happy to answer questions or chat about this research over email.

Best,

Matthew

First of all, fantastic work with Gryff, your carstamp approach is very interesting.

To clarify, Hermes works in the asynchronous model (w/o leases) with small overhead over its initial protocol (see Hermes w/o Loosely Synchronised Clocks at the end of the paper).

A similar approach could be used for multi-Paxos to allow for cheap reads w/o the need of leases. Maybe I need to write a short paper on it. :D

Although Hermes commits updates in just one RTT, it needs acknowledgments from all replicas. Instead, Gryff's updates might need more RTTs to commit but only needs to get acknowledgments from the nearest majority. This can provide Gryff a latency benefit over Hermes updates on the WAN environment.

P.S. You might also wanna take a look at "Kite" (PPoPP'20), which takes a similar approach to Gryff and separates read-writes and RMWs with ABD and Paxos in a slightly different context. This direction seems very interesting.

P.P.S. Thanks Murat for one more great summary.

Friendly,

-Antonis (one of Hermes' authors)