The Ben-Or decentralized consensus algorithm

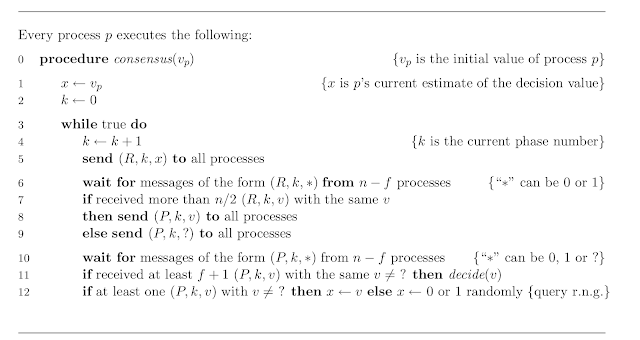

In PODC 1983, Michael Ben-Or published a randomized distributed asynchronous consensus algorithm in a paper titled "Another advantage of free choice (Extended Abstract): Completely asynchronous agreement protocols".

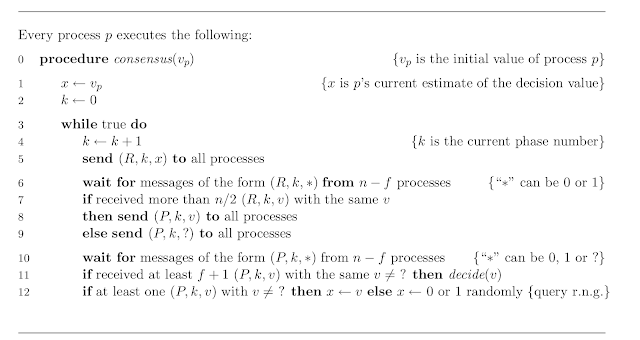

After 15 years, a paper in 1998 provided a correctness proof of Ben-Or's algorithm. Above is the pseudocode for Ben-Or from that paper. From the pseudocode it looks like Ben-Or is a very simple algorithm, but in reality its behavior is not easy to understand and appreciate. But fret not, TLA+ modeling and model checking helps a lot for understanding the behavior of the Ben-Or algorithm. It is fulfilling when you finally understand how the algorithm works, and how safety is always preserved and progress is achieved eventually probabilistically.

I had assigned modeling of Ben-Or as the TLA+ project for my distributed systems class. Here I share my PlusCal modeling of Ben-Or, and discuss how this model helps us to understand the algorithm better. Here is the link to the full model on Github, if you like to play with it.

This is the top level model of each process.

Ben-Or is a decentralized consensus algorithm, it is not a leader-based algorithm. In the absence of a leader for tie-breaking, Ben-Or solves only binary input consensus, rather than arbitrary input value consensus. Even then, it employs randomization for achieving progress. (I had discussed the pros and cons of leader-based versus leaderless consensus algorithms earlier.)

Ben-Or has rounds but, unlike Paxos, the rounds are not client-restricted and do not preempt each other. The rounds are common to all nodes, and they are traversed in a lock-step fashion by N-F nodes in an asynchronous manner, where N is the total number of nodes, and F the upper bound on the number of faulty nodes. Recently we have seen that threshold clock synchronization tries to make such a round concept more systematic.

Each round has two phases. In the first phase of a round, each node tries to identify a value supported by a majority in order to propose that for the second phase. In the second phase (the decision/ratification phase), a node finalizes its decision if it finds the same value proposed by at least F+1 nodes. Upon failure to get a decision in the current round, Ben-Or makes some nodes to change their votes before the next round. This helps jolt/tilt the system toward a decision, so that the system (eventually probabilistically) converges to a consensus value in one of the upcoming rounds.

The model uses the following process local variables. p1v denotes the value the node broadcasts in phase 1 of a round, and p2v denotes the value it broadcasts in phase 2 of a round. The decided variable at each node to store the final/terminal decision a node makes for consensus. Initially decided=-1, and only when the process decides, decided is set to 0 or 1. These variables are initialized as p1v=INPUT[self], p2v=-1, decided=-1. In the very first round, the p1v values are come from the INPUT value for each node, in the consequent rounds, the p1v is assigned as a function of the p2v values in EvalP2 function.

We use MAXROUND to bound the number of rounds a node can try to keep model checking feasible; e.g., MAXROUND=3 or 4 suffices to get the properties tested. We use INPUT to give initial values to nodes. This is binary consensus, so we use 0 or 1 as possible initial values. When we run our model, in the model overview pane, we can give values to these constants as follows: F <- 1, N <- 4, INPUT <- «0,1,1,1», MAXROUND <-3.

We model message passing among nodes by using a shared message board. This is simply a set defined as a global variable. We use p1Msg as the phase1 message board, and p2Msg as the phase2 message board. Each node broadcasts its p1v or p2v value by adding it to the corresponding message board via a simple set union. For example p1Msg:=p1Msg \union {<<self, r, p1v>>} adds p1v to the corresponding phase1 message board. This amounts to broadcasting the p1v value of the node, and any other node can on its own schedule read from p1Msg set what messages have been sent for a given round, r. This reading is done via set filtering. SentP1Msgs(r)=={m \in p1Msg: m[2]=r} returns all messages in broadcast for round r to the p1Msg board, and SentP1MsgsV(r,a)=={m \in p1Msg: m[2]=r /\ m[3]=a} returns all messages broadcast with round number r and value a.

In phase1, each node broadcasts its p1v to p1Msg, and then waits until there are at least N-F phase1 values shared in the channel for round r. This ensures that the nodes traverse the rounds in a lock-step fashion. Among the p1v values retrieved from the channel for round r, if the node sees a majority value $a \in {0,1}$, it adopts that value for its p2v value to propose in phase2 of this round. If the node does not see a majority value (which does not imply that there is no majority value present, because each node sees a different perspective of values), it adopts the default $p2v=-1$ value for proposing in phase2, meaning that it did not see either 0 or 1 as supported from phase 1 communication exchange.

Note that, since both 0 or 1 cannot be in the majority, the p2v value set for a round can be {-1}, {-1,0}, {-1,1}, {0} or {1}. But it is not possible have {-1,0,1} or {0,1} as the p2v value set because if either 0 or 1 is seen in the majority, it is not possible for the other value to be also in the majority.

In phase2, each node broadcasts its p2v to p2Msg, and then waits until there are at least N-F phase2 values shared in the channel for this round r. Among the p2v values retrieved from the channel for round r, if the node sees a value $a \in {0,1}$ sent by at least F nodes, it adopts that value for its decision value. Else if the node sees a 0 or 1 value sent by another node, it adopts this value for its p1v value for the upcoming round. This is an optimistic attempt for accumulating traction for a value that at least one node witnessed as majority among p1v values in this round. Otherwise, as the last resort, the node adopts a random 0 or 1 value as its p1v value for the next round. This serves as the random jolt to get the system tilt towards a value that can be witnessed as majority by some nodes so the case1 or case2 above applies for the round.

Why does the algorithm require seeing at least F nodes with the same p2v value before it is chosen for decision? If less than F nodes have seen a majority p1v value, say 1, and they prematurely decide on this value, it is possible that they may all crash after this decision. The other nodes would be following case2 and case3 of phase2, and it is quite possible that in the next round 0 is seen as majority value by some node, and the system then converges to decide on 0, violating the agreement property. While progress is probabilistic in Ben-Or, safety should and is always satisfied in Ben-Or.

Once a node sees at least F+1 nodes with the same p2v value, say 1, it decides. And it is guaranteed that in this phase 2, other available nodes also see at least one node with p2v=1, even if the F nodes that has proposed p2v=1 crashed. (They await for $N-F$ p2v values, remember.) This means that, in the worst case, in the next round all available processes will see majority p1v=1 among the N-F phase1 messages they receive, and decide in the phase2 of that round.

Okay, this brings us to the properties we check on this model.

Agreement says that for any two nodes j and k that decided, their decision values should be the same. Agreement should always be satisfied, even when F is more than N/2. Of course, if $F>N/2$, nodes will never be able to decide, because in phase1 a majority is required for proposing a 0 or 1 p2v value.

For testing progress for F<N/2, we use the Progress property that says eventually all processes decide something. If we start with the same p1v values at all nodes, progress is guaranteed for N>2F. In that case all nodes will see that value as the majority value and propose it as the p2v value in round 1, and they all decide in phase2 of that round.

But what about if the initial p1v values have a mixture of 0s and 1s? They may not decide because it may not be possible for any available node to observe a majority 0 or 1 from their sample of N-F values, and the default/skip value $p2v=-1$ will be proposed for the phase 2. When such an ambiguity is possible, the decision can then be postponed forever by choosing the worst possible "random" assignments to the nodes for the next round. This is of course not any more a uniformly random assignment of 0s or 1s to p1v, but a pathological/malicious value assignment by the model checker to show how the Progress can be violated.

For example for N=4 and F=1, INPUT=<<0,1,1,1>> will violate the Progress property. The model checker will hide the "p1v=1" from node, and since the other nodes would not see the value "1" in the majority (which is 3 for N=4), they will all propose p2v=-1. The model checker also will find the "random" assignments that will extend this indecision by not giving majority to any value. (On the other hand, for F=0, in this setup, the majority value of "1" will be seen by all nodes, and the system will decide in one round.)

The pathological runs will break the Progress property, but how do we check that under a mixed input set, there are some runs that terminate? To this end, we write a safety/invariant property called BaitProgress which claims that it is not possible for all processes to decide, and watch the model checker come up with a counterexample for how this property is violated, which implies that yest consensus/progress is solved for some runs.

If N=4 and INPUT=«0,1,1,1», is it possible to have 0 decided for a run? To test this we write a safety property called MinorityReport which claims that it is not possible for all the nodes to decide "0" as the consensus value. The model checker will try to prove us wrong by producing a counterexample. If you check this for F=1, we will find that yes, MinorityReport will be violated meaning that there exists an execution where all nodes will decide on 0 as the consensus value, even though it is clearly in the minority in the initial input set.

Texel is also a leaderless binary asynchronous consensus protocol. But Texel does not use rounds, and instead use consistent cuts to evaluate whether there is a consensus value safe to decide on. Texel assumes N>3F+1, so that a value supported by F+1 nodes (which is a majority of 2F+1) is visible to any process even after F nodes crash (or become unavailable otherwise). The problem is there may be two different supporting values, and this violates progress. Moreover, there is a risk that progress is still not guaranteed when all values are the same. When the consistent cut read of nodes (this is called experimentation phase, which is somewhat similar to a phase1 in Ben-Or) conflict with each other, the read is aborted and restarted from scratch. So it is possible that the decision is postponed forever by conflicting experiment phases, even when all input values are the same.

As I discussed earlier, a binary consensus is still useful for blockchain transaction. A well-formed client should avoid double spending, and would not propose two different transactions with the same UTXO, and therefore they are guaranteed for liveness. However liveness is not guaranteed (but safety still holds) for double-spending transactions submitted by Byzantine clients, which conflict with one another. In this case the liveness violation is a feature not a bug.

Avalanche extended Texel's leaderless consensus approach in terms of scalability and quick finality (by using sampling and metastability), and applied the resultant decentralized algorithm in the blockchain domain. I assume a similar transformation is possible for extending Ben-Or to a sampling based solution. Even though Ben-Or is round based unlike Texel, I think via sampling and momentum we can avoid the requirement of the rounds, similar to how Avalanche avoided the requirement of consistent reads in Texel.

After 15 years, a paper in 1998 provided a correctness proof of Ben-Or's algorithm. Above is the pseudocode for Ben-Or from that paper. From the pseudocode it looks like Ben-Or is a very simple algorithm, but in reality its behavior is not easy to understand and appreciate. But fret not, TLA+ modeling and model checking helps a lot for understanding the behavior of the Ben-Or algorithm. It is fulfilling when you finally understand how the algorithm works, and how safety is always preserved and progress is achieved eventually probabilistically.

I had assigned modeling of Ben-Or as the TLA+ project for my distributed systems class. Here I share my PlusCal modeling of Ben-Or, and discuss how this model helps us to understand the algorithm better. Here is the link to the full model on Github, if you like to play with it.

Outline of the algorithm

This is the top level model of each process.

Ben-Or is a decentralized consensus algorithm, it is not a leader-based algorithm. In the absence of a leader for tie-breaking, Ben-Or solves only binary input consensus, rather than arbitrary input value consensus. Even then, it employs randomization for achieving progress. (I had discussed the pros and cons of leader-based versus leaderless consensus algorithms earlier.)

Ben-Or has rounds but, unlike Paxos, the rounds are not client-restricted and do not preempt each other. The rounds are common to all nodes, and they are traversed in a lock-step fashion by N-F nodes in an asynchronous manner, where N is the total number of nodes, and F the upper bound on the number of faulty nodes. Recently we have seen that threshold clock synchronization tries to make such a round concept more systematic.

Each round has two phases. In the first phase of a round, each node tries to identify a value supported by a majority in order to propose that for the second phase. In the second phase (the decision/ratification phase), a node finalizes its decision if it finds the same value proposed by at least F+1 nodes. Upon failure to get a decision in the current round, Ben-Or makes some nodes to change their votes before the next round. This helps jolt/tilt the system toward a decision, so that the system (eventually probabilistically) converges to a consensus value in one of the upcoming rounds.

The model uses the following process local variables. p1v denotes the value the node broadcasts in phase 1 of a round, and p2v denotes the value it broadcasts in phase 2 of a round. The decided variable at each node to store the final/terminal decision a node makes for consensus. Initially decided=-1, and only when the process decides, decided is set to 0 or 1. These variables are initialized as p1v=INPUT[self], p2v=-1, decided=-1. In the very first round, the p1v values are come from the INPUT value for each node, in the consequent rounds, the p1v is assigned as a function of the p2v values in EvalP2 function.

We use MAXROUND to bound the number of rounds a node can try to keep model checking feasible; e.g., MAXROUND=3 or 4 suffices to get the properties tested. We use INPUT to give initial values to nodes. This is binary consensus, so we use 0 or 1 as possible initial values. When we run our model, in the model overview pane, we can give values to these constants as follows: F <- 1, N <- 4, INPUT <- «0,1,1,1», MAXROUND <-3.

Phase1 and Phase2 actions

We model message passing among nodes by using a shared message board. This is simply a set defined as a global variable. We use p1Msg as the phase1 message board, and p2Msg as the phase2 message board. Each node broadcasts its p1v or p2v value by adding it to the corresponding message board via a simple set union. For example p1Msg:=p1Msg \union {<<self, r, p1v>>} adds p1v to the corresponding phase1 message board. This amounts to broadcasting the p1v value of the node, and any other node can on its own schedule read from p1Msg set what messages have been sent for a given round, r. This reading is done via set filtering. SentP1Msgs(r)=={m \in p1Msg: m[2]=r} returns all messages in broadcast for round r to the p1Msg board, and SentP1MsgsV(r,a)=={m \in p1Msg: m[2]=r /\ m[3]=a} returns all messages broadcast with round number r and value a.

In phase1, each node broadcasts its p1v to p1Msg, and then waits until there are at least N-F phase1 values shared in the channel for round r. This ensures that the nodes traverse the rounds in a lock-step fashion. Among the p1v values retrieved from the channel for round r, if the node sees a majority value $a \in {0,1}$, it adopts that value for its p2v value to propose in phase2 of this round. If the node does not see a majority value (which does not imply that there is no majority value present, because each node sees a different perspective of values), it adopts the default $p2v=-1$ value for proposing in phase2, meaning that it did not see either 0 or 1 as supported from phase 1 communication exchange.

Note that, since both 0 or 1 cannot be in the majority, the p2v value set for a round can be {-1}, {-1,0}, {-1,1}, {0} or {1}. But it is not possible have {-1,0,1} or {0,1} as the p2v value set because if either 0 or 1 is seen in the majority, it is not possible for the other value to be also in the majority.

In phase2, each node broadcasts its p2v to p2Msg, and then waits until there are at least N-F phase2 values shared in the channel for this round r. Among the p2v values retrieved from the channel for round r, if the node sees a value $a \in {0,1}$ sent by at least F nodes, it adopts that value for its decision value. Else if the node sees a 0 or 1 value sent by another node, it adopts this value for its p1v value for the upcoming round. This is an optimistic attempt for accumulating traction for a value that at least one node witnessed as majority among p1v values in this round. Otherwise, as the last resort, the node adopts a random 0 or 1 value as its p1v value for the next round. This serves as the random jolt to get the system tilt towards a value that can be witnessed as majority by some nodes so the case1 or case2 above applies for the round.

Why does the algorithm require seeing at least F nodes with the same p2v value before it is chosen for decision? If less than F nodes have seen a majority p1v value, say 1, and they prematurely decide on this value, it is possible that they may all crash after this decision. The other nodes would be following case2 and case3 of phase2, and it is quite possible that in the next round 0 is seen as majority value by some node, and the system then converges to decide on 0, violating the agreement property. While progress is probabilistic in Ben-Or, safety should and is always satisfied in Ben-Or.

Once a node sees at least F+1 nodes with the same p2v value, say 1, it decides. And it is guaranteed that in this phase 2, other available nodes also see at least one node with p2v=1, even if the F nodes that has proposed p2v=1 crashed. (They await for $N-F$ p2v values, remember.) This means that, in the worst case, in the next round all available processes will see majority p1v=1 among the N-F phase1 messages they receive, and decide in the phase2 of that round.

Okay, this brings us to the properties we check on this model.

Safety and Progress properties

Agreement says that for any two nodes j and k that decided, their decision values should be the same. Agreement should always be satisfied, even when F is more than N/2. Of course, if $F>N/2$, nodes will never be able to decide, because in phase1 a majority is required for proposing a 0 or 1 p2v value.

For testing progress for F<N/2, we use the Progress property that says eventually all processes decide something. If we start with the same p1v values at all nodes, progress is guaranteed for N>2F. In that case all nodes will see that value as the majority value and propose it as the p2v value in round 1, and they all decide in phase2 of that round.

But what about if the initial p1v values have a mixture of 0s and 1s? They may not decide because it may not be possible for any available node to observe a majority 0 or 1 from their sample of N-F values, and the default/skip value $p2v=-1$ will be proposed for the phase 2. When such an ambiguity is possible, the decision can then be postponed forever by choosing the worst possible "random" assignments to the nodes for the next round. This is of course not any more a uniformly random assignment of 0s or 1s to p1v, but a pathological/malicious value assignment by the model checker to show how the Progress can be violated.

For example for N=4 and F=1, INPUT=<<0,1,1,1>> will violate the Progress property. The model checker will hide the "p1v=1" from node, and since the other nodes would not see the value "1" in the majority (which is 3 for N=4), they will all propose p2v=-1. The model checker also will find the "random" assignments that will extend this indecision by not giving majority to any value. (On the other hand, for F=0, in this setup, the majority value of "1" will be seen by all nodes, and the system will decide in one round.)

The pathological runs will break the Progress property, but how do we check that under a mixed input set, there are some runs that terminate? To this end, we write a safety/invariant property called BaitProgress which claims that it is not possible for all processes to decide, and watch the model checker come up with a counterexample for how this property is violated, which implies that yest consensus/progress is solved for some runs.

If N=4 and INPUT=«0,1,1,1», is it possible to have 0 decided for a run? To test this we write a safety property called MinorityReport which claims that it is not possible for all the nodes to decide "0" as the consensus value. The model checker will try to prove us wrong by producing a counterexample. If you check this for F=1, we will find that yes, MinorityReport will be violated meaning that there exists an execution where all nodes will decide on 0 as the consensus value, even though it is clearly in the minority in the initial input set.

MAD questions

How does this compare with Texel, another decentralized consensus protocol?Texel is also a leaderless binary asynchronous consensus protocol. But Texel does not use rounds, and instead use consistent cuts to evaluate whether there is a consensus value safe to decide on. Texel assumes N>3F+1, so that a value supported by F+1 nodes (which is a majority of 2F+1) is visible to any process even after F nodes crash (or become unavailable otherwise). The problem is there may be two different supporting values, and this violates progress. Moreover, there is a risk that progress is still not guaranteed when all values are the same. When the consistent cut read of nodes (this is called experimentation phase, which is somewhat similar to a phase1 in Ben-Or) conflict with each other, the read is aborted and restarted from scratch. So it is possible that the decision is postponed forever by conflicting experiment phases, even when all input values are the same.

As I discussed earlier, a binary consensus is still useful for blockchain transaction. A well-formed client should avoid double spending, and would not propose two different transactions with the same UTXO, and therefore they are guaranteed for liveness. However liveness is not guaranteed (but safety still holds) for double-spending transactions submitted by Byzantine clients, which conflict with one another. In this case the liveness violation is a feature not a bug.

Avalanche extended Texel's leaderless consensus approach in terms of scalability and quick finality (by using sampling and metastability), and applied the resultant decentralized algorithm in the blockchain domain. I assume a similar transformation is possible for extending Ben-Or to a sampling based solution. Even though Ben-Or is round based unlike Texel, I think via sampling and momentum we can avoid the requirement of the rounds, similar to how Avalanche avoided the requirement of consistent reads in Texel.

Comments