Paper summary: Bitcoin-NG -- A scalable Blockchain Protocol

This week in our seminar, we discussed the Bitcoin-NG paper. The paper appeared in NSDI 16, and is authored by Ittay Eyal, Adem Efe Gencer, Emin Gün Sirer, and Robbert van Renesse at the Cornell University.

The model section of this paper is very well formalized and written. This is like a Rosetta Stone find for classical distributed systems researchers that want to enter blockchain research. So in this summary, I will start by covering as much of that as I can.

At any time t, a subset of nodes B(t) are Byzantine and can behave arbitrarily, controlled by a single adversary. The mining power of the Byzantine nodes is less than 1/4 of the total compute power at any given time.

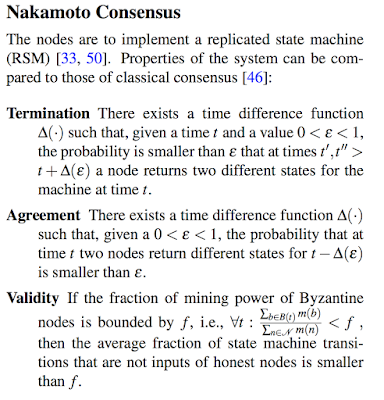

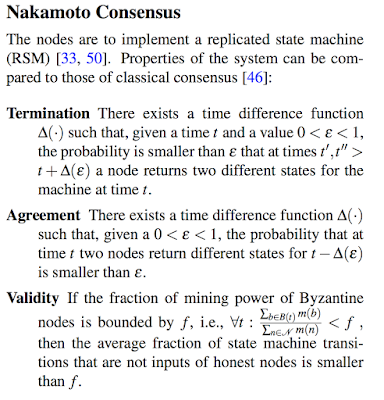

Comparing the Nakamoto consensus problem with the classic Byzantine consensus problem is very instructional. Nakamoto consensus has probabilistic guarantees for Termination, Agreement, and Validity, whereas the classic Byzantine Consensus has deterministic guarantees for them.

If you want to understand the blockchain data structure, this youtube video and demo is fantastic for it.

Unfortunately Bitcoin is haunted with scalability woes. The maximum rate at which Bitcoin can process transactions is capped by the block size and block interval.

Increasing the block size (which is currently set at 1MB) improves throughput, but the resulting bigger blocks take longer to propagate in the network. Of course increasing that to 10 MB will not cause a significant problem in propagation, after all bandwidth is not that limited. However, taken at extreme the tradeoff will be there. Moreover, every second of headstart counts for mining the next block: New blocks received late means miners are wasting resources by building on an old block that is no longer the most recent.

Reducing the block interval reduces latency, but leads to instability due to frequent forks. Bitcoin currently targets a conservative 10 minutes between blocks, yielding 10-minute expected latencies for transactions to be encoded in the blockchain.

With this setup, Bitcoin yields a wimpy 1 to 3.5 transactions per second. Transaction finalization is also problematic. If you wait for 6 blocks to append to declare a transaction to be finalized, that will take an expected 60 minutes time.

Bitcoin-NG divides time into epochs, where each epoch has a single leader. As in Bitcoin, leader election is performed randomly and infrequently via proof-of-work. Once a leader is chosen, it is entitled to serialize transactions via microblocks unilaterally until a new leader is chosen, marking the end of the former’s epoch.

Thus the protocol introduces two types of blocks: key blocks for leader election and microblocks that contain the ledger entries. Like a Bitcoin block, a key block contains the reference to the previous block (either a key block or a microblock, usually the latter), the current Unix time, a coinbase transaction to pay out the reward, a target value, and a nonce field containing arbitrary bits. Unlike Bitcoin, a key block in Bitcoin-NG contains a public key that will be used in subsequent microblocks.

Once a node generates a key block it becomes the leader. As a leader, the node is allowed to generate microblocks at a set rate smaller than a predefined maximum. (Specifically, if the timestamp of a microblock is in the future, or if its difference with its predecessor's timestamp is smaller than the minimum, then the microblock is invalid. This prohibits a greedy leader from swamping the system with microblocks.) A microblock contains ledger entries and a header. The header contains the reference to the previous block, the current Unix time, a cryptographic hash of its ledger entries, and a cryptographic signature of the header. The signature uses the private key that matches the public key in the latest key block in the chain.

Microblock fork prevention is essential for this system, since the leader can spit out many microblocks quickly to make more money from transaction fees. To report on a leader that violates the microblock production rate, a poison transaction is employed. The poison transaction contains the header of the first block in the pruned branch as a proof of fraud, and it has to be placed on the blockchain within the maturity window of the misbehaving leader’s key block, and before the revenue is spent by the malicious leader.

The new leader cannot fake an offending microblock to accuse the old leader, because the poison transaction should contain the private signature of the previous leader. Moreover the 40/60 partitioning of credit for microblock transactions between the old leader and the new leader incentivizes the new leader not to cut the microblock set short, because it would like to get more revenue from them. So the new leader that mines the last key block has all the incentive to behave according to the protocol. The 40/60 partitioning of the credit is shown to satisfy these constraints via mathematical modeling in the paper.

It looks like the microblocks need not be chained in a sequence. Rather the microblocks form a set that follow the keyblock of the corresponding leader and satisfying the rate limitations prescribed in the protocol. So what is the authoritative microblock set taken then, given that slightly different set of microblocks may arrive at different nodes of the network. It looks like the new leader (that mines the next key block) is the one to authoritatively decide on that.

2) It looks like a hard fork will be needed for switching to the Bitcoin-NG protocol, because the current Bitcoin clients are unaware of the concept of microblocks. Oh, well, good luck with getting on a "consensus" on a hard fork in Bitcoin protocol. In his latest blog post "Why Decentralization Matters", Chris Dixon cited as the biggest problem with the centralized services that they can change the rules on the users: "The good news is that billions of people got access to amazing technologies, many of which were free to use. The bad news is that it became much harder for startups, creators, and other groups to grow their internet presence without worrying about centralized platforms changing the rules on them, taking away their audiences and profits. This in turn stifled innovation, making the internet less interesting and dynamic."

On the other hand, the "community-governed" decentralized protocols seem to be haunted by the reverse problem: It is very hard to change the rules even to fix problems with the protocol. Isn't that as big, if not a bigger, problem as the former for stifling innovation?

The model section of this paper is very well formalized and written. This is like a Rosetta Stone find for classical distributed systems researchers that want to enter blockchain research. So in this summary, I will start by covering as much of that as I can.

The Nakamoto Consensus Problem

The blockchain system is comprised of a set of nodes N connected by a reliable peer-to-peer network. Nodes can generate public-private key-pairs for themselves. The system employs a cryptopuzzle system, defined by a cryptographic hash function H. The solution to a puzzle defined by the string y is a string x such that H(y|x) --the hash of the concatenation of the two-- is smaller than some target (i.e., the hash has k number of leading zeros). Each node i has a limited amount of compute power, called mining power, measured by the number of potential puzzle solutions it can try per second. A solution to a puzzle constitutes a "proof of work", as it statistically indicates the amount of work a node had to perform in order to find it.At any time t, a subset of nodes B(t) are Byzantine and can behave arbitrarily, controlled by a single adversary. The mining power of the Byzantine nodes is less than 1/4 of the total compute power at any given time.

Comparing the Nakamoto consensus problem with the classic Byzantine consensus problem is very instructional. Nakamoto consensus has probabilistic guarantees for Termination, Agreement, and Validity, whereas the classic Byzantine Consensus has deterministic guarantees for them.

BitCoin's Blockchain protocol

Bitcoin provides a Byzantine fault tolerant blockchain protocol for implementing a decentralized cryptocurrency. Each user commands addresses, and sends Bitcoins by forming a transaction from her address to another's address and sending it to the Bitcoin mining nodes. Transactions are protected with cryptographic techniques that ensure only the rightful owner of a Bitcoin address can transfer funds from it. A client owns x Bitcoins at time t if the aggregate of unspent outputs to its address is x. Miners accept transactions only if their sources have not been spent, preventing users from double-spending their funds. The miners commit the transactions into a global append-only log called the blockchain. If multiple miners create blocks with the same preceding block, the chain is forked into branches, forming a tree. All miners add blocks to the heaviest chain of which they know, with random tie-breaking.If you want to understand the blockchain data structure, this youtube video and demo is fantastic for it.

Unfortunately Bitcoin is haunted with scalability woes. The maximum rate at which Bitcoin can process transactions is capped by the block size and block interval.

Increasing the block size (which is currently set at 1MB) improves throughput, but the resulting bigger blocks take longer to propagate in the network. Of course increasing that to 10 MB will not cause a significant problem in propagation, after all bandwidth is not that limited. However, taken at extreme the tradeoff will be there. Moreover, every second of headstart counts for mining the next block: New blocks received late means miners are wasting resources by building on an old block that is no longer the most recent.

Reducing the block interval reduces latency, but leads to instability due to frequent forks. Bitcoin currently targets a conservative 10 minutes between blocks, yielding 10-minute expected latencies for transactions to be encoded in the blockchain.

With this setup, Bitcoin yields a wimpy 1 to 3.5 transactions per second. Transaction finalization is also problematic. If you wait for 6 blocks to append to declare a transaction to be finalized, that will take an expected 60 minutes time.

Bitcoin-NG

Bitcoin-NG is a protocol that improves on Bitcoin in terms of transaction throughput and latency of propagation. Bitcoin-NG’s latency is limited only by the propagation delay of the network, and its bandwidth is limited only by the processing capacity of the individual nodes. Bitcoin-NG achieves this performance improvement by decoupling Bitcoin’s blockchain operation into two planes: leader election and transaction serialization. In Bitcoin-NG, consensus is pushed back only to identify the leader, and serialization is performed by the leader. This seems to me to be a classic application of chain replication (while the paper does not cite chain replication).

Bitcoin-NG divides time into epochs, where each epoch has a single leader. As in Bitcoin, leader election is performed randomly and infrequently via proof-of-work. Once a leader is chosen, it is entitled to serialize transactions via microblocks unilaterally until a new leader is chosen, marking the end of the former’s epoch.

Thus the protocol introduces two types of blocks: key blocks for leader election and microblocks that contain the ledger entries. Like a Bitcoin block, a key block contains the reference to the previous block (either a key block or a microblock, usually the latter), the current Unix time, a coinbase transaction to pay out the reward, a target value, and a nonce field containing arbitrary bits. Unlike Bitcoin, a key block in Bitcoin-NG contains a public key that will be used in subsequent microblocks.

Once a node generates a key block it becomes the leader. As a leader, the node is allowed to generate microblocks at a set rate smaller than a predefined maximum. (Specifically, if the timestamp of a microblock is in the future, or if its difference with its predecessor's timestamp is smaller than the minimum, then the microblock is invalid. This prohibits a greedy leader from swamping the system with microblocks.) A microblock contains ledger entries and a header. The header contains the reference to the previous block, the current Unix time, a cryptographic hash of its ledger entries, and a cryptographic signature of the header. The signature uses the private key that matches the public key in the latest key block in the chain.

Microblock fork prevention is essential for this system, since the leader can spit out many microblocks quickly to make more money from transaction fees. To report on a leader that violates the microblock production rate, a poison transaction is employed. The poison transaction contains the header of the first block in the pruned branch as a proof of fraud, and it has to be placed on the blockchain within the maturity window of the misbehaving leader’s key block, and before the revenue is spent by the malicious leader.

The new leader cannot fake an offending microblock to accuse the old leader, because the poison transaction should contain the private signature of the previous leader. Moreover the 40/60 partitioning of credit for microblock transactions between the old leader and the new leader incentivizes the new leader not to cut the microblock set short, because it would like to get more revenue from them. So the new leader that mines the last key block has all the incentive to behave according to the protocol. The 40/60 partitioning of the credit is shown to satisfy these constraints via mathematical modeling in the paper.

It looks like the microblocks need not be chained in a sequence. Rather the microblocks form a set that follow the keyblock of the corresponding leader and satisfying the rate limitations prescribed in the protocol. So what is the authoritative microblock set taken then, given that slightly different set of microblocks may arrive at different nodes of the network. It looks like the new leader (that mines the next key block) is the one to authoritatively decide on that.

MAD questions

1) In the Bitcoin protocol, if we increase the block size what could go wrong? That would definitely help with the throughput problems Bitcoin is facing. It would mean some slight increase in delivery latency depending on the bandwidth available in the path. But even increasing it to 10MB is unlikely to break anything, I think. (But hey I am a newbie in this space.) So why are there endless debates about this in the Bitcoin community? Could it be that the small blockers are concerned more about actually the increase in the transaction volume, which would mean the history grows quickly, which would make being a full Bitcoin node (rather than just a client) become more of a burden? But isn't that just delaying the inevitable? Is the idea here to delay the inevitable until more storage space and computing become available? That sounds so wrong to me as an engineer.2) It looks like a hard fork will be needed for switching to the Bitcoin-NG protocol, because the current Bitcoin clients are unaware of the concept of microblocks. Oh, well, good luck with getting on a "consensus" on a hard fork in Bitcoin protocol. In his latest blog post "Why Decentralization Matters", Chris Dixon cited as the biggest problem with the centralized services that they can change the rules on the users: "The good news is that billions of people got access to amazing technologies, many of which were free to use. The bad news is that it became much harder for startups, creators, and other groups to grow their internet presence without worrying about centralized platforms changing the rules on them, taking away their audiences and profits. This in turn stifled innovation, making the internet less interesting and dynamic."

On the other hand, the "community-governed" decentralized protocols seem to be haunted by the reverse problem: It is very hard to change the rules even to fix problems with the protocol. Isn't that as big, if not a bigger, problem as the former for stifling innovation?

Comments