TLA+/PlusCal modeling of Synchronized Round Consensus Algorithm: Solution

The other day I posed the synchronized round consensus question.

Here is the solution on GitHub, and some brief explanation of the relevant part below.

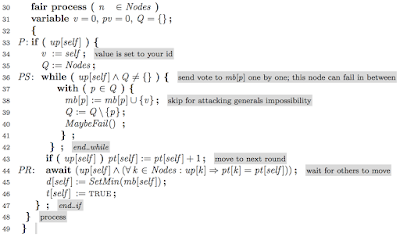

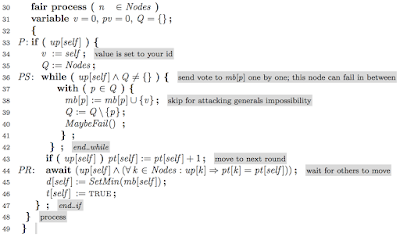

The code above is pretty straightforward. The while loop between lines 36-42 models how a node sends its vote to other nodes one by one. The sender node can fail in this while loop after some of the nodes received the vote. So if we model check with FAILNUM=1, the agreement invariant is violated in this single round algorithm as seen in the error trace below.

The blue highlighted line, state 15, is the last line in the error trace, and the value of the state variables are listed in the window below. If you inspect "up" you can see node 1 is down. Checking "mb" you can see node 2 received node 1's vote, but node 3 did not receive node 1's node. As a result, the decision "d" for node 2 is "1", whereas node 3 decides "2", and both decisions are finalized. So the invariant "Agreement" is violated.

(Note that even if we had 10 nodes, and FAILNUM=8, we could have extended this scenario by failing always the smallest id up node in each round after it delivers the message to the next node in the sequence keeping the "1" vote alive but hidden.)

Another interesting part in the code occurs at lines 43 and 44.

After sending its vote to the other nodes, the node increments its round number, pt, by 1. But then it "awaits" other nodes to catchup, and goes to the next round only after this synchronization await at line 44. Note that the node awaits only for the nodes that are "up". If it waits for a down node to increment its pt+1, it would have to wait forever.

This await at line 44 cuts corners: it assumes shared memory instead of message passing. One way to implement this unrealistic "await" is to use physical time, but even that is a brittle method. In reality it is hard to implement perfectly synchronized rounds. Physical clock synchronization is hard, and since the OS is not a real-time OS, timing assumptions can be violated, say due to garbage collection kicking in, or due to VM/container getting slow, or network contention.

When the round synchronization assumption is broken, this algorithm fails. Trust me, you don't want your consensus algorithm, that the rest of your coordination infrastructure depends on, to fail. That is why consensus algorithms adopted in practice, such as Paxos, Raft, Zab, Viewstamped Replication, do not rely on synchronized rounds, and can tolerate (in the sense that agreement is not violated) extreme asynchrony in the system. When the timing assumptions normalize a bit, those algorithms then achieve progress and solve consensus.

To this end, Line 32 introduces a top-level while loop to the original model iterate through multiple rounds.

The important question here is to determine which round is safe to decide.

The trivial way to do this is to always iterate FAILNUM+1 rounds before deciding, but that is a wasteful solution. FAILNUM is the maximum number of faults that can occur, and the algorithm should be able to decide in less number of rounds in the common case when faults do not occur. But how do you tell that asymmetrically, only using the node's own perception of the system, which is by definition partial and always slightly stale.

One way to do this is to look at the stability of the set of proposed votes and compare mb, the mailbox contents for this round, with pmb, the mailbox contents in the previous round. If there is a potential for fault, it follows that the algorithm should always go to round 2, to confirm with others. The delaying of the decision should continue until the proposed votes converge to a single value for two consecutive rounds and the cardinality of the mailbox is also important because it witnesses to the fact that there are no faults so the above pathological crash sequence of vote hiding is avoided.

I found out these mistakes when I started writing this blog post. So writing and explaining is an indispensable part of the design process. If TLA+ model checking was more performant, I wouldn't give up prematurely, and I would still know about the solution. If model checking is taking long, it may be best to do it on the cloud, say on an AWS, Azure, or GCE. But the state space explosion is an inherent and dangerous nemesis for model-checking and it will bite. The best precaution is to keep things simple/minimal in the modeling. But that is not always easy.

Here is the solution on GitHub, and some brief explanation of the relevant part below.

Single round consensus algorithm

The code above is pretty straightforward. The while loop between lines 36-42 models how a node sends its vote to other nodes one by one. The sender node can fail in this while loop after some of the nodes received the vote. So if we model check with FAILNUM=1, the agreement invariant is violated in this single round algorithm as seen in the error trace below.

The blue highlighted line, state 15, is the last line in the error trace, and the value of the state variables are listed in the window below. If you inspect "up" you can see node 1 is down. Checking "mb" you can see node 2 received node 1's vote, but node 3 did not receive node 1's node. As a result, the decision "d" for node 2 is "1", whereas node 3 decides "2", and both decisions are finalized. So the invariant "Agreement" is violated.

(Note that even if we had 10 nodes, and FAILNUM=8, we could have extended this scenario by failing always the smallest id up node in each round after it delivers the message to the next node in the sequence keeping the "1" vote alive but hidden.)

Another interesting part in the code occurs at lines 43 and 44.

After sending its vote to the other nodes, the node increments its round number, pt, by 1. But then it "awaits" other nodes to catchup, and goes to the next round only after this synchronization await at line 44. Note that the node awaits only for the nodes that are "up". If it waits for a down node to increment its pt+1, it would have to wait forever.

This await at line 44 cuts corners: it assumes shared memory instead of message passing. One way to implement this unrealistic "await" is to use physical time, but even that is a brittle method. In reality it is hard to implement perfectly synchronized rounds. Physical clock synchronization is hard, and since the OS is not a real-time OS, timing assumptions can be violated, say due to garbage collection kicking in, or due to VM/container getting slow, or network contention.

When the round synchronization assumption is broken, this algorithm fails. Trust me, you don't want your consensus algorithm, that the rest of your coordination infrastructure depends on, to fail. That is why consensus algorithms adopted in practice, such as Paxos, Raft, Zab, Viewstamped Replication, do not rely on synchronized rounds, and can tolerate (in the sense that agreement is not violated) extreme asynchrony in the system. When the timing assumptions normalize a bit, those algorithms then achieve progress and solve consensus.

Crash tolerant synchronized round consensus algorithm

To tolerate crash faults, it is clear that the algorithm needs to be extended to delay decision to future rounds where each node can ensure that all the nodes have the same set of values from which to decide.To this end, Line 32 introduces a top-level while loop to the original model iterate through multiple rounds.

The important question here is to determine which round is safe to decide.

The trivial way to do this is to always iterate FAILNUM+1 rounds before deciding, but that is a wasteful solution. FAILNUM is the maximum number of faults that can occur, and the algorithm should be able to decide in less number of rounds in the common case when faults do not occur. But how do you tell that asymmetrically, only using the node's own perception of the system, which is by definition partial and always slightly stale.

One way to do this is to look at the stability of the set of proposed votes and compare mb, the mailbox contents for this round, with pmb, the mailbox contents in the previous round. If there is a potential for fault, it follows that the algorithm should always go to round 2, to confirm with others. The delaying of the decision should continue until the proposed votes converge to a single value for two consecutive rounds and the cardinality of the mailbox is also important because it witnesses to the fact that there are no faults so the above pathological crash sequence of vote hiding is avoided.

Observations

I had written a faulty version of the multi-round algorithm in my first try. I had not taken the cardinality of the set into account and went with straightforward set union. It didn't give any violations of the invariant for N=5 and FAILNUM=3, but the progress part was taking more than an hour on my laptop and I stopped running it. Turns out that version was susceptible to the pathological crash sequence and vote hiding as above. All the nodes decide with "2" but there is this node who just received a "1" vote which was still alive but hidden. So this node goes to next round, but since others have decided, this node will await forever. This is a wacky flavor of consensus, which can still be acceptable maybe if this minority report node kills itself with up:=FALSE. This led me to improve the line 44 condition. Another bug was about a node in a higher round sending messages which gets consumed by a node in a lower round, which leads to nodes getting stuck. To solve this, I had to improve the condition at line 37.I found out these mistakes when I started writing this blog post. So writing and explaining is an indispensable part of the design process. If TLA+ model checking was more performant, I wouldn't give up prematurely, and I would still know about the solution. If model checking is taking long, it may be best to do it on the cloud, say on an AWS, Azure, or GCE. But the state space explosion is an inherent and dangerous nemesis for model-checking and it will bite. The best precaution is to keep things simple/minimal in the modeling. But that is not always easy.

Comments

Thank you so much for this wonderful article really!

If someone want to read more about that Funny Buffalo I think this is the right place for you!