Paper Summary: DeepXplore, Automated Whitebox Testing of Deep Learning Systems

This paper was put on arxiv on May 2017, and is authored by Kexin Pei, Yinzhi Cao, Junfeng Yang, Suman Jana at Columbia and Lehigh Universities.

The paper proposes a framework to automatically generate inputs that trigger/cover different parts of a Deep Neural Network (DNN) for inference and identify incorrect behaviors.

It is easy to see the motivation for high-coverage testing of DNNs. We use DNN inference for safety-critical tasks such as self-driving cars; A DNN gives us results, but we don't know how it works, and how much it works. DNN inference is opaque and we don't have any guarantee that it will not mess up spectacularly in a slightly different input then the ones it succeeded. There are too many corner cases to consider for input based testing, and rote testing will not be able to cover all bases.

DeepXplore goes about DNN inference testing in an intelligent manner. It shows that finding inputs triggering differential behaviors while achieving high neuron coverage for DL algorithms can be represented as a joint optimization problem and solved efficiently using gradient-based optimization techniques. (Gradients of DNNs with respect to inputs can be calculated accurately and can be used to solve this joint optimization problem efficiently.)

DeepXplore also leverages multiple DL systems with similar functionality as cross-referencing oracles and thus avoid manual checking for erroneous behaviors. For example, use Uber, Google, Waymo for the driving video, and compare outputs. Majority voting determines the correct behavior. DeepXplore counts on a majority of the independently trained DNNs not to be susceptible to the bug. This is similar to N-version programming for building resilience against software bugs.

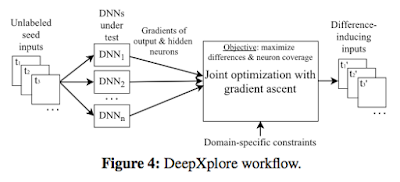

Here is DeepXplore workflow for generating test images. DeepXplore takes unlabeled test inputs as seeds and generates new test inputs that cover a large number of different neurons (i.e., activates them to a value above a customizable threshold) while causing the tested DNNs to behave differently.

Figure 6 shows how "gradient ascent" can be employed in this joint optimization problem. This is a walk up-hill towards less certain scoring, so it is a gradient-ascent, rather than a gradient-descent. Starting from a seed input, DeepXplore performs the guided search by the gradient in the input space of two similar DNNs supposed to handle the same task such that it finally uncovers the test inputs that lie between the decision boundary of these two DNNs. Such test inputs will be classified differently by the two DNNs.

The team implemented DeepXplore using Tensorflow 1.0.1 and Keras 2.0.3 DL frameworks. They used Tensorflow's implementation of gradient computations in the joint optimization process. Tensorflow also supports creating subDNNs by marking any arbitrary neuron's output as the subDNN's output while keeping the input same as the original DNN's input. They used this feature to intercept and record the output of neurons in the intermediate layers of a DNN and compute the corresponding gradients with respect to the DNN’s input. All the experiments were run on a Linux laptop with 16GB RAM. I guess since this is inference rather than training, a laptop sufficed for the experiments.

A criticism to the paper could be this. Yes, DeepXplore catches a bad classification on an image, that is good and useful. But probably the self-driving application already has built-in tolerance to occasional misclassifications. For example, the temporal continuity can help; previous images and next images correctly classify the road, so an interim misclassification would not be very bad. Moreover, application-specific invariants can also act as safety net, e.g., do not steer very sharp, and use a Kalman filter. It would be interesting to do evaluations also in an end-to-end application setting.

UPDATE (6/17/2018): I have received clarification from Yinzhi Cao, one of the authors, about these points. Here are his comments:

First, our light effect (or other changes) can be added constantly over the time domain, and thus DeepXplore should be able to fool the decision engine all the time. That is, the previous images and next images will also lead to incorrect decisions.

Second, DeepXplore can ask the decision engine to gradually switch the steering so that a Kalman filter may not help. For example, the switching from left to right or vice versa is not that sudden so that a Kalman filter cannot rule out the decision.

The paper proposes a framework to automatically generate inputs that trigger/cover different parts of a Deep Neural Network (DNN) for inference and identify incorrect behaviors.

It is easy to see the motivation for high-coverage testing of DNNs. We use DNN inference for safety-critical tasks such as self-driving cars; A DNN gives us results, but we don't know how it works, and how much it works. DNN inference is opaque and we don't have any guarantee that it will not mess up spectacularly in a slightly different input then the ones it succeeded. There are too many corner cases to consider for input based testing, and rote testing will not be able to cover all bases.

DeepXplore goes about DNN inference testing in an intelligent manner. It shows that finding inputs triggering differential behaviors while achieving high neuron coverage for DL algorithms can be represented as a joint optimization problem and solved efficiently using gradient-based optimization techniques. (Gradients of DNNs with respect to inputs can be calculated accurately and can be used to solve this joint optimization problem efficiently.)

DeepXplore also leverages multiple DL systems with similar functionality as cross-referencing oracles and thus avoid manual checking for erroneous behaviors. For example, use Uber, Google, Waymo for the driving video, and compare outputs. Majority voting determines the correct behavior. DeepXplore counts on a majority of the independently trained DNNs not to be susceptible to the bug. This is similar to N-version programming for building resilience against software bugs.

Here is DeepXplore workflow for generating test images. DeepXplore takes unlabeled test inputs as seeds and generates new test inputs that cover a large number of different neurons (i.e., activates them to a value above a customizable threshold) while causing the tested DNNs to behave differently.

Figure 6 shows how "gradient ascent" can be employed in this joint optimization problem. This is a walk up-hill towards less certain scoring, so it is a gradient-ascent, rather than a gradient-descent. Starting from a seed input, DeepXplore performs the guided search by the gradient in the input space of two similar DNNs supposed to handle the same task such that it finally uncovers the test inputs that lie between the decision boundary of these two DNNs. Such test inputs will be classified differently by the two DNNs.

The team implemented DeepXplore using Tensorflow 1.0.1 and Keras 2.0.3 DL frameworks. They used Tensorflow's implementation of gradient computations in the joint optimization process. Tensorflow also supports creating subDNNs by marking any arbitrary neuron's output as the subDNN's output while keeping the input same as the original DNN's input. They used this feature to intercept and record the output of neurons in the intermediate layers of a DNN and compute the corresponding gradients with respect to the DNN’s input. All the experiments were run on a Linux laptop with 16GB RAM. I guess since this is inference rather than training, a laptop sufficed for the experiments.

A criticism to the paper could be this. Yes, DeepXplore catches a bad classification on an image, that is good and useful. But probably the self-driving application already has built-in tolerance to occasional misclassifications. For example, the temporal continuity can help; previous images and next images correctly classify the road, so an interim misclassification would not be very bad. Moreover, application-specific invariants can also act as safety net, e.g., do not steer very sharp, and use a Kalman filter. It would be interesting to do evaluations also in an end-to-end application setting.

UPDATE (6/17/2018): I have received clarification from Yinzhi Cao, one of the authors, about these points. Here are his comments:

First, our light effect (or other changes) can be added constantly over the time domain, and thus DeepXplore should be able to fool the decision engine all the time. That is, the previous images and next images will also lead to incorrect decisions.

Second, DeepXplore can ask the decision engine to gradually switch the steering so that a Kalman filter may not help. For example, the switching from left to right or vice versa is not that sudden so that a Kalman filter cannot rule out the decision.

Comments