Modeling a Replicated Storage System in TLA+, Project 2

Two weeks ago, I had described phase 1 of the TLA+ project I assigned in my distributed systems class. I promised to give you the solution soon. I put the solution for phase1 on github.

In this post, I will describe the phase 2 of the project. In phase 2 of the project, we use Chain Replication to ensure persistence and consistency of the objects stored. Read my chain replication summary to refresh on the algorithm.

Before performing the write, the client reads from the tail of chain to learn the highest versioned write completed. The client then increments the version number, and performs the write to the head of chain with this version number. Since a node failure may lead to a request being lost (be it a read request, or update request), the client needs to repeat the request until a response is returned from the tail. The client cannot start a new request before it receives a reply to its earlier request.

A configurator process (an infallible process which would in practice be implemented as a Paxos cluster) will be used for configuring the chain. One responsibility of the configurator is to remove a down node from the chain, and the other is to add a new node to the tail of the chain if the length of the chain is less than 3. The chain is initially empty, and the configurator populates the chain using the "add a new node to the tail" action.

A storage node can crush (provided that FAILNUM is not exceeded), and recover at any time. For simplicity we assume the client writes to only one item, so we omit the key part of the key-value pair item, and model the database db at each node to consist of one item. The newer version of the item will writeover the old version.

In this phase of the project, the storage system performs server-side routing, and modeling of the system is done using message passing instead of shared memory. Once a storage node receives a new request in its message-box msg, it will consult the configurator to figure out its successor in the chain and propagate the request to its successor. If the storage node is the tail it will send a reply to the client. An update request modifies the db with the newer version of the item. A read request does not change the db.

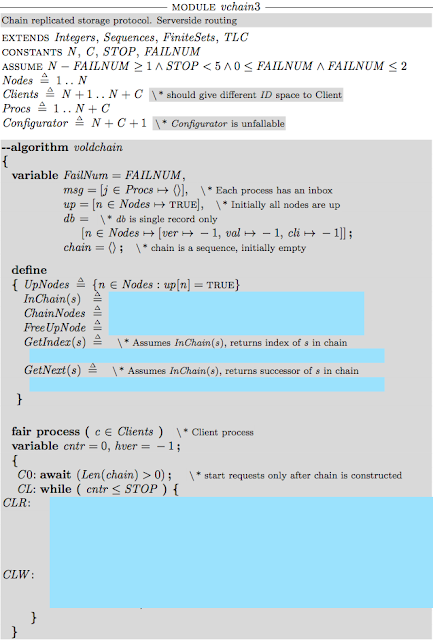

The students are asked to write a PlusCal program to model this algorithm. I provide them the below template as a starting point, and they fill in the redacted parts, use the toolkit to translate their code to TLA+, write invariant properties and model-check for correctness. I also ask them to list their observations about phase2 "Voldchain" versus phase1 "Voldemort quorums" version of the protocol. How do they compare? Is Voldchain capable of tolerating more failures with less number of nodes? How do you explain the difference? What is the analog of write quorum, and read quorum in Voldchain?

I will wait a week or so before I share my solution for the Project2.

My experience with using TLA+ in distributed systems class (Jan 2015)

Modeling the hygienic dining philosophers algorithm in TLA+

There is a vibrant Google Groups forum for TLA+ : https://groups.google.com/

Clicking on label "tla" at the end of the post you can reach all my posts about TLA+

In this post, I will describe the phase 2 of the project. In phase 2 of the project, we use Chain Replication to ensure persistence and consistency of the objects stored. Read my chain replication summary to refresh on the algorithm.

Before performing the write, the client reads from the tail of chain to learn the highest versioned write completed. The client then increments the version number, and performs the write to the head of chain with this version number. Since a node failure may lead to a request being lost (be it a read request, or update request), the client needs to repeat the request until a response is returned from the tail. The client cannot start a new request before it receives a reply to its earlier request.

A configurator process (an infallible process which would in practice be implemented as a Paxos cluster) will be used for configuring the chain. One responsibility of the configurator is to remove a down node from the chain, and the other is to add a new node to the tail of the chain if the length of the chain is less than 3. The chain is initially empty, and the configurator populates the chain using the "add a new node to the tail" action.

A storage node can crush (provided that FAILNUM is not exceeded), and recover at any time. For simplicity we assume the client writes to only one item, so we omit the key part of the key-value pair item, and model the database db at each node to consist of one item. The newer version of the item will writeover the old version.

In this phase of the project, the storage system performs server-side routing, and modeling of the system is done using message passing instead of shared memory. Once a storage node receives a new request in its message-box msg, it will consult the configurator to figure out its successor in the chain and propagate the request to its successor. If the storage node is the tail it will send a reply to the client. An update request modifies the db with the newer version of the item. A read request does not change the db.

The students are asked to write a PlusCal program to model this algorithm. I provide them the below template as a starting point, and they fill in the redacted parts, use the toolkit to translate their code to TLA+, write invariant properties and model-check for correctness. I also ask them to list their observations about phase2 "Voldchain" versus phase1 "Voldemort quorums" version of the protocol. How do they compare? Is Voldchain capable of tolerating more failures with less number of nodes? How do you explain the difference? What is the analog of write quorum, and read quorum in Voldchain?

Links

Using TLA+ for teaching distributed systems (Aug 2014)My experience with using TLA+ in distributed systems class (Jan 2015)

Modeling the hygienic dining philosophers algorithm in TLA+

There is a vibrant Google Groups forum for TLA+ : https://groups.google.com/

Clicking on label "tla" at the end of the post you can reach all my posts about TLA+

Comments