TensorFlow: A system for large-scale machine learning

This paper has been uploaded to arxiv.org on May 27 2016, so it is the most recent description of Google TensorFlow. (Five months ago, I had commented on an earlier TensorFlow whitepaper, if you want to check that first.) Below I summarize the main points of this paper by using several sentences/paragraphs from the paper with some paraphrasing. I end the post with my wild speculations about TensorFlow. (This speculation thing is getting strangely addictive for me.)

TensorFlow is built leveraging Google's experience with their first generation distributed machine learning system, DistBelief. The core idea of this paper is that TensorFlow's dataflow representation subsumes existing work on parameter server systems (including DistBelief), and offers a uniform programming model that allows users to harness large-scale heterogeneous systems, both for production tasks and for experimenting with new approaches.

TensorFlow is based on the dataflow architecture. Dataflow with mutable state enables TensorFlow to mimic the functionality of a parameter server, and even provide additional flexibility. Using TensorFlow, it becomes possible to execute arbitrary dataflow subgraphs on the machines that host the shared model parameters. We say more on this when we discuss the TensorFlow model and the structure of a typical training application below.

Naiad is designed for computing on sparse, discrete data, and does not support GPU acceleration. TensorFlow borrows aspects of timely dataflow iteration from Naiad in achieving dynamic control flow.

TensorFlow's programming model is close to Theano's dataflow representation, but Theano is for a single node and does not support distributed execution.

In sum, TensorFlow innovates on these two aspects:

Figure 1 shows a typical training application, with multiple subgraphs that execute concurrently, and interact through shared variables and queues. Shared variables and queues are stateful operations that contain mutable state. (A Variable operation owns a mutable buffer that is used to store the shared parameters of a model as it is trained. A Variable has no inputs, and produces a reference handle.)

This Figure provides a concrete explanation of how TensorFlow works. The core training subgraph depends on a set of model parameters, and input batches from a queue. Many concurrent steps of the training subgraph update the model based on different input batches, to implement data-parallel training. To fill the input queue, concurrent preprocessing steps transform individual input records (e.g., decoding images and applying random distortions), and a separate I/O subgraph reads records from a distributed file system. A checkpointing subgraph runs periodically for fault tolerance.

The API for executing a graph allows the client to specify the subgraph that should be executed. A subgraph is specified declaratively: the client selects zero or more edges to feed input tensors into the dataflow, and one or more edges to fetch output tensors from the dataflow; the run-time then prunes the graph to contain the necessary set of operations. Each invocation of the API is called a step, and TensorFlow supports multiple concurrent steps on the same graph, where stateful operations enable coordination between the steps. TensorFlow is optimized for executing large subgraphs repeatedly with low latency. Once the graph for a step has been pruned, placed, and partitioned, its subgraphs are cached in their respective devices.

TensorFlow partitions the operations into per-device subgraphs. A per-device subgraph for device d contains all of the operations that were assigned to d, with additional Send and Recv operations that replace edges across device boundaries. Send transmits its single input to a specified device as soon as the tensor is available, using a rendezvous key to name the value. Recv has a single output, and blocks until the value for a specified rendezvous key is available locally, before producing that value. Send and Recv have specialized implementations for several device-type pairs. TensorFlow supports multiple protocols, including gRPC over TCP, and RDMA over Converged Ethernet.

TensorFlow is implemented as an extensible, cross-platform library. Figure 5 illustrates the system architecture: a thin C API separates user-level in various languages from the core library written in C++.

The paper mentions ongoing work on automatic optimization to determine default policies for performance improvement that work well for most users. While power-users can get their way by taking advantage of TensorFlow's flexibility, this automatic optimization feature would make TensorFlow more user-friendly, and can help TensorFlow adopted more widely (which looks like what Google is pushing for). The paper also mentions that, on the system level, Google Brain team is actively developing algorithms for automatic placement, kernel fusion, memory management, and scheduling.

Previously I had speculated that with the ease of partitioning of the dataflow graph and its heterogenous device support, TensorFlow can span over and bridge smartphone and cloud backend machine learning. I still standby that prediction.

This is a wilder speculation, but a long-running self-improving machine learning backend in the datacenter can also provide great support for self-driving cars. Every minute, new data and decisions from self-driving cars would flow from TensorFlow subgraphs running on the cars to the cloud backend TensorFlow program. Using this constant flux of data, the program can adopt to changing road conditions (snowy roads, poor visibility conditions) and new scenarios on the fly, and all self-driving cars would benefit from the new improvements to the models.

Though the paper mentions that reinforcement style learning is future work, for all we know Google might already have reinforcement learning implemented on TensorFlow. It also looks like the TensorFlow model is general enough to tackle some other distributed systems data processing applications, for example large-scale distributed monitoring at the datacenters. I wonder if there are already TensorFlow implementations for such distributed systems services.

In 2011, Steve Yegge ranted about the lack of platforms thinking in Google. It seems like Google is doing good in that department lately. TensorFlow constitutes an extensible and flexible distributed machine learning platform to leverage for several directions.

TensorFlow is built leveraging Google's experience with their first generation distributed machine learning system, DistBelief. The core idea of this paper is that TensorFlow's dataflow representation subsumes existing work on parameter server systems (including DistBelief), and offers a uniform programming model that allows users to harness large-scale heterogeneous systems, both for production tasks and for experimenting with new approaches.

TensorFlow versus Parameter Server systems

DistBelief was based on the parameter server architecture, and satisfied most of Google's scalable machine learning requirements. However, the paper argues that this architecture lacked extensibility, because adding a new optimization algorithm, or experimenting with an unconventional model architecture would require users to modify the parameter server implementation. Not all the users are comfortable with making those changes due to the complexity of the high-performance parameter server implementation. In contrast, TensorFlow provides a high-level uniform programming model that allows users to customize the code that runs in all parts of the system, and experiment with different optimization algorithms, consistency schemes, and parallelization strategies in userspace/unprivilege code.TensorFlow is based on the dataflow architecture. Dataflow with mutable state enables TensorFlow to mimic the functionality of a parameter server, and even provide additional flexibility. Using TensorFlow, it becomes possible to execute arbitrary dataflow subgraphs on the machines that host the shared model parameters. We say more on this when we discuss the TensorFlow model and the structure of a typical training application below.

TensorFlow versus dataflow systems

The principal limitation of a batch dataflow systems (including Spark) is that they require the input data to be immutable and all of the subcomputations to be deterministic, so that the system can re-execute subcomputations when machines in the cluster fail. This unfortunately makes updating a machine learning model a heavy operation. TensorFlow improves on this by supporting expressive control-flow and stateful constructs.Naiad is designed for computing on sparse, discrete data, and does not support GPU acceleration. TensorFlow borrows aspects of timely dataflow iteration from Naiad in achieving dynamic control flow.

TensorFlow's programming model is close to Theano's dataflow representation, but Theano is for a single node and does not support distributed execution.

Tensorflow model

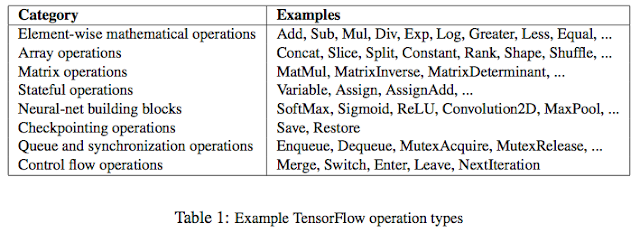

TensorFlow uses a unified dataflow graph to represent both the computation in an algorithm and the state on which the algorithm operates. Unlike traditional dataflow systems, in which graph vertices represent functional computation on immutable data, TensorFlow allows vertices to represent computations that own or update mutable state. By unifying the computation and state management in a single programming model, TensorFlow allows programmers to experiment with different parallelization schemes. For example, it is possible to offload computation onto the servers that hold the shared state to reduce the amount of network traffic.In sum, TensorFlow innovates on these two aspects:

- Individual vertices may have mutable state that can be shared between different executions of the graph.

- The model supports multiple concurrent executions on overlapping subgraphs of the overall graph.

Figure 1 shows a typical training application, with multiple subgraphs that execute concurrently, and interact through shared variables and queues. Shared variables and queues are stateful operations that contain mutable state. (A Variable operation owns a mutable buffer that is used to store the shared parameters of a model as it is trained. A Variable has no inputs, and produces a reference handle.)

This Figure provides a concrete explanation of how TensorFlow works. The core training subgraph depends on a set of model parameters, and input batches from a queue. Many concurrent steps of the training subgraph update the model based on different input batches, to implement data-parallel training. To fill the input queue, concurrent preprocessing steps transform individual input records (e.g., decoding images and applying random distortions), and a separate I/O subgraph reads records from a distributed file system. A checkpointing subgraph runs periodically for fault tolerance.

The API for executing a graph allows the client to specify the subgraph that should be executed. A subgraph is specified declaratively: the client selects zero or more edges to feed input tensors into the dataflow, and one or more edges to fetch output tensors from the dataflow; the run-time then prunes the graph to contain the necessary set of operations. Each invocation of the API is called a step, and TensorFlow supports multiple concurrent steps on the same graph, where stateful operations enable coordination between the steps. TensorFlow is optimized for executing large subgraphs repeatedly with low latency. Once the graph for a step has been pruned, placed, and partitioned, its subgraphs are cached in their respective devices.

Distributed execution

TensorFlow's dataflow architecture simplifies distributed execution, because it makes communication between subcomputations explicit. Each operation resides on a particular device, such as a CPU or GPU in a particular task. A device is responsible for executing a kernel for each operation assigned to it. The TensorFlow runtime places operations on devices, subject to implicit or explicit device constraints in the graph. the user may specify partial device preferences such as “any device in a particular task”, or “a GPU in any Input task”, and the runtime will respect these constraints.TensorFlow partitions the operations into per-device subgraphs. A per-device subgraph for device d contains all of the operations that were assigned to d, with additional Send and Recv operations that replace edges across device boundaries. Send transmits its single input to a specified device as soon as the tensor is available, using a rendezvous key to name the value. Recv has a single output, and blocks until the value for a specified rendezvous key is available locally, before producing that value. Send and Recv have specialized implementations for several device-type pairs. TensorFlow supports multiple protocols, including gRPC over TCP, and RDMA over Converged Ethernet.

TensorFlow is implemented as an extensible, cross-platform library. Figure 5 illustrates the system architecture: a thin C API separates user-level in various languages from the core library written in C++.

Current development on TensorFlow

On May 18th, it was revealed that Google built the Tensor Processing Unit (TPU) specifically for machine learning. The paper mentions that TPUs achieve an order of magnitude improvement in performance-per-watt compared to alternative state-of-the-art technology.The paper mentions ongoing work on automatic optimization to determine default policies for performance improvement that work well for most users. While power-users can get their way by taking advantage of TensorFlow's flexibility, this automatic optimization feature would make TensorFlow more user-friendly, and can help TensorFlow adopted more widely (which looks like what Google is pushing for). The paper also mentions that, on the system level, Google Brain team is actively developing algorithms for automatic placement, kernel fusion, memory management, and scheduling.

My wild speculations about TensorFlow

Especially with the addition of mutable state and coordination via queues, TensorFlow is equipped for providing incremental on the fly machine learning. Machine learning applications built with TensorFlow can be long-running applications that keep making progress as new input arrive, and can adapt to new conditions/trends on the fly. Instead of one shot huge batch machine learning, such an incremental but continuous machine learning system has obvious advantages in today's fast paced environment. This is definitely good for Google's core search and information indexing business. I also speculate this is important for Android phones and self-driving cars.Previously I had speculated that with the ease of partitioning of the dataflow graph and its heterogenous device support, TensorFlow can span over and bridge smartphone and cloud backend machine learning. I still standby that prediction.

TensorFlow enables cloud backend support for machine learning to the private/device-level machine learning going on in your smartphone. It doesn't make sense for a power-hungry entire TensorFlow program to run on your wimpy smartphone. Your smartphone will be running only certain TensorFlow nodes and operations, the rest of the TensorFlow graph will be running on the Google cloud backend. Such a setup is also great for preserving privacy of your phone while still enabling machine learned insights on your Android.Since TensorFlow supports inference as well as training, it can use 100s of servers for fast training, and run trained models for inference in smartphones concurrently. Android voice assistant (or Google Now) is a good application for this. In any case, it is a good time to be working on smartphone machine learning.

This is a wilder speculation, but a long-running self-improving machine learning backend in the datacenter can also provide great support for self-driving cars. Every minute, new data and decisions from self-driving cars would flow from TensorFlow subgraphs running on the cars to the cloud backend TensorFlow program. Using this constant flux of data, the program can adopt to changing road conditions (snowy roads, poor visibility conditions) and new scenarios on the fly, and all self-driving cars would benefit from the new improvements to the models.

Though the paper mentions that reinforcement style learning is future work, for all we know Google might already have reinforcement learning implemented on TensorFlow. It also looks like the TensorFlow model is general enough to tackle some other distributed systems data processing applications, for example large-scale distributed monitoring at the datacenters. I wonder if there are already TensorFlow implementations for such distributed systems services.

In 2011, Steve Yegge ranted about the lack of platforms thinking in Google. It seems like Google is doing good in that department lately. TensorFlow constitutes an extensible and flexible distributed machine learning platform to leverage for several directions.

Comments