All file systems are not created equal: On the complexity of crafting crash-consistent applications

This paper appeared in OSDI'14 and is authored by Thanumalayan Sankaranarayana Pillai, Vijay Chidambaram, Ramnatthan Alagappan, Samer Al-Kiswany, Andrea C. Arpaci-Dusseau, and Remzi H. Arpaci-Dusseau at University of Wisconsin–Madison.

A previous OSDI'14 paper we discussed had said almost every failure is due to bad exception/error-handling. But this paper shows that even when you divine the correct error-handling/recovery code, it may still not work. The layering abstraction leaks, and the filesystem underneath may do funny things in a crash.

The paper considers an important and timely problem, because many important applications, including databases such as SQLite and key-value stores such as LevelDB, are currently implemented on top of file systems instead of directly on raw disks. Such data-management applications must be crash consistent, but achieving this goal atop modern file systems is challenging because the exact guarantees provided by file systems are unclear and underspecified.

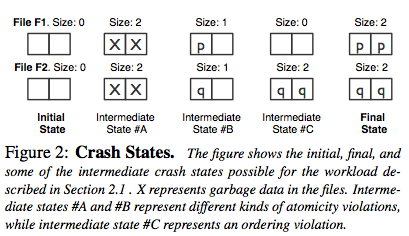

The paper defines persistence (a better term would be consistent-persistence) as a combination of two properties: atomicity and ordering (external linearizability). Figure 2 gives an example of how persistence can be violated by a crash.

From Table 1, we observe that persistence properties vary widely among file systems, and even among different configurations of the same file system. The order of persistence of system calls depends upon small details like whether the calls are to the same file or whether the file was renamed. The datajournal configuration of the filesystems are pretty solid, but they incur an overhead in terms of performance as well.

In order to analyze application-level protocols and detect crash vulnerabilities, the authors build ALICE framework. (ALICE is available as opensource here.) ALICE detects 60 vulnerabilities in total for the 11 applications analyzed, with 5 resulting in silent failures, 12 in loss of durability, 25 leading to inaccessible applications, and 17 returning errors while accessing certain data. ALICE is also able to detect previously known vulnerabilities.

The paper is easy to read and follow. And the conference presentation does a good job of explaining the paper in an accessible manner.

But if we use a journaling approach (e.g., an append-only log approach) to writing the critical recovery states, this problem can be avoided. I guess a write-once style storage for critical state can be implemented even at the application-level. But again we pay a cost for fault-tolerance. If you take this to an extreme (to be able to recover everything), you implement the datajournal configuration of the filesystem at the application level.

This paper provides some motivation for the self-stabilization approach. If it is hard to enforce consistency, then always be converging to the consistent states. That is what the stabilization approach prescribes.

A previous OSDI'14 paper we discussed had said almost every failure is due to bad exception/error-handling. But this paper shows that even when you divine the correct error-handling/recovery code, it may still not work. The layering abstraction leaks, and the filesystem underneath may do funny things in a crash.

The paper considers an important and timely problem, because many important applications, including databases such as SQLite and key-value stores such as LevelDB, are currently implemented on top of file systems instead of directly on raw disks. Such data-management applications must be crash consistent, but achieving this goal atop modern file systems is challenging because the exact guarantees provided by file systems are unclear and underspecified.

The paper defines persistence (a better term would be consistent-persistence) as a combination of two properties: atomicity and ordering (external linearizability). Figure 2 gives an example of how persistence can be violated by a crash.

From Table 1, we observe that persistence properties vary widely among file systems, and even among different configurations of the same file system. The order of persistence of system calls depends upon small details like whether the calls are to the same file or whether the file was renamed. The datajournal configuration of the filesystems are pretty solid, but they incur an overhead in terms of performance as well.

In order to analyze application-level protocols and detect crash vulnerabilities, the authors build ALICE framework. (ALICE is available as opensource here.) ALICE detects 60 vulnerabilities in total for the 11 applications analyzed, with 5 resulting in silent failures, 12 in loss of durability, 25 leading to inaccessible applications, and 17 returning errors while accessing certain data. ALICE is also able to detect previously known vulnerabilities.

The paper is easy to read and follow. And the conference presentation does a good job of explaining the paper in an accessible manner.

Discussion

Is this paper being too alarmist? If we allow our system to recover to an earlier state instead of the most recent state at crash time, would that enable us to circumvent these crash-consistency problems? (Let's say we define "earlier state" as occuring in the past enough to be successfully flashed to the filesystem state.) Even that approach may fail if the most recent state at the moment of crash overwrites it inconsistently, which would corrupt it. So there is a reason to be alarmed!But if we use a journaling approach (e.g., an append-only log approach) to writing the critical recovery states, this problem can be avoided. I guess a write-once style storage for critical state can be implemented even at the application-level. But again we pay a cost for fault-tolerance. If you take this to an extreme (to be able to recover everything), you implement the datajournal configuration of the filesystem at the application level.

This paper provides some motivation for the self-stabilization approach. If it is hard to enforce consistency, then always be converging to the consistent states. That is what the stabilization approach prescribes.

Comments