Facebook's Mystery Machine: End-to-end Performance Analysis of Large-scale Internet Services

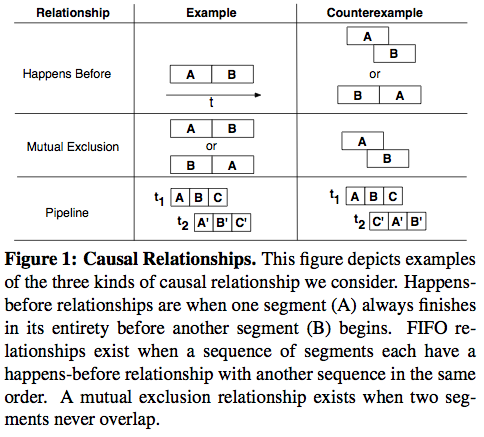

This paper appeared in OSDI'14, and is authored by Michael Chow, University of Michigan; David Meisner, Facebook, Inc.; Jason Flinn, University of Michigan; Daniel Peek, Facebook, Inc.; Thomas F. Wenisch, University of Michigan . The goal of this paper is very similar to that of Google Dapper (you can read my summary of Google Dapper here) . Both work try to figure out bottlenecks in performance in high fanout large-scale Internet services. Both work use similar methods, however this work (the mystery machine) tries to accomplish the task relying on less instrumentation than Google Dapper. The novelty of the mystery machine work is that it tries to infer the component call graph implicitly via mining the logs, where as Google Dapper instrumented each call in a meticulous manner and explicitly obtained the entire call graph. The motivation for this approach is that comprehensive instrumentation as in Google Dapper requires standardization....and I am quoting the rest from the pa...