My experience with using TLA+ in distributed systems class

I used TLA+ in my distributed system class in Fall 2014. (To learn the backstory on this, read my pre-semester TLA+ post.)

In short, I loved the experience and I am hooked. Integrating TLA+ to the class gave students a way to get a hands-on experience in algorithms design and correctness verification. Even for a sophisticated algorithm, such as Paxos, I can refer the students to TLA+ to practice and play with the algorithm, so they can internalize what is going on. Throughout the semester as I had more chance to work with TLA+, I started growing a library of TLA+ modeling of distributed algorithms. Next time I teach the class, I hope to provide the students with a TLA+ model of each algorithm I cover in the class.

The student feedback was also positive. The students liked TLA+ since it gave them a way to experiment and supported them in reasoning about the algorithms. Several students complained of the learning curve. They wanted me to cover TLA+ in more detail in the class. (The TLA+ manual can be very dense for the students.) Next semester I plan to allocate more time getting the students acquainted with TLA+. (Next time around, I will also work on devising automated ways to grade the TLA+ projects. My TA had do manual testing of the projects, not a pleasant task for a class of 60 students.)

The aim of the first mini-project was to get the students started with TLA+. I asked the students to model a bean can problem (white and black beans with rules to remove beans form the can). This was simple and there was only one process. But the students got to learn how they can check safety and liveness conditions on their program.

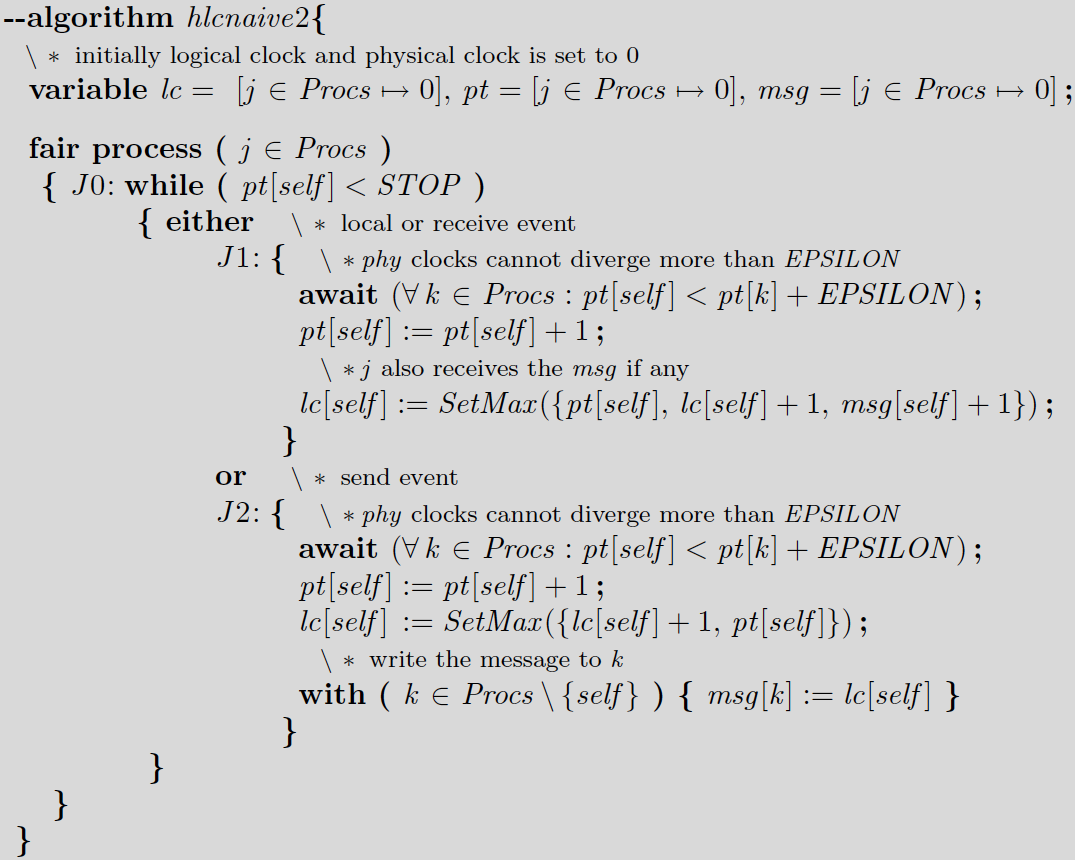

The second mini-project required them to deal with multiple processes. I asked them to model the logical physical clocks we had introduced in our research. Using TLA+ model checker the students found the counterexample in the naive algorithm, where the logical clock gradually but unboundedly gets ahead of the physical clock. This counterexample is hard to find and involves 30 steps in the algorithm. So this was an example were TLA+ proved its value. (Try to figure out this counterexample with unit tests, I dare you.)

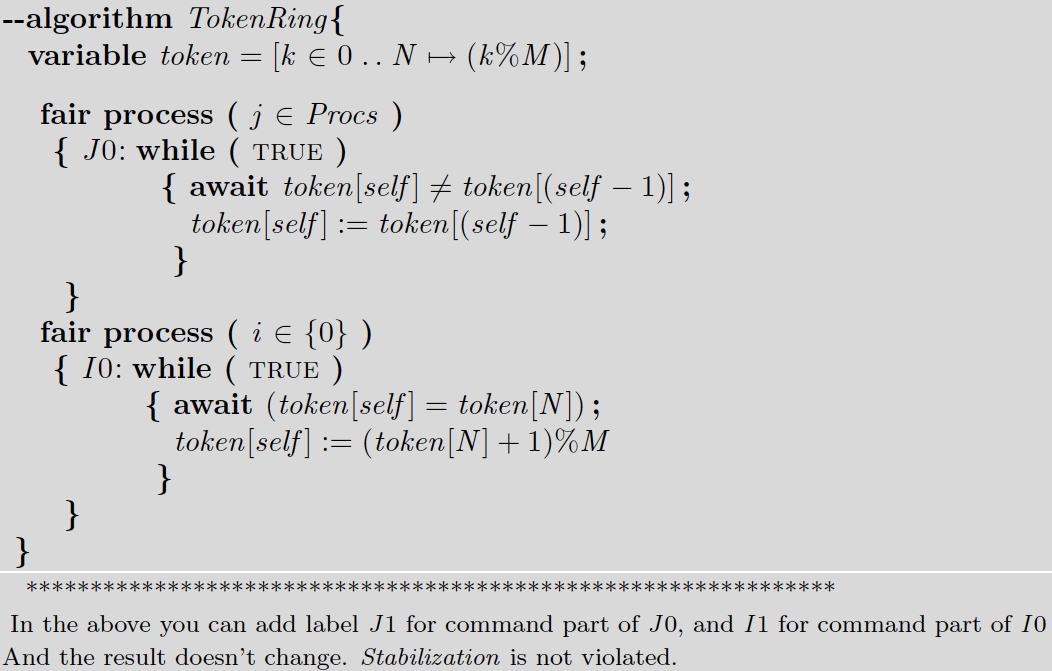

The final mini-project gave them more practice on modeling distributed algorithms. I asked them to model Dijkstra's classical stabilizing token ring algorithm as well as Dijkstra's lesser known 4-state stabilizing token ring algorithm. Students learned about self-stabilization and how it deals with arbitrary memory corruption.

Learning to model algorithms using TLA+ takes time. The language is powerful and very general; it is Math after all. You need to study many examples to learn about the RIGHT ways of doing things. I experienced this as a novice first hand. I had written a PlusCal code for Paxos within a couple days. My code worked, but it used procedures, and it was very procedural/operational rather than declarative--the way Math should be. And so my code was very slow to model check. Model checking with 2 acceptors worked, but I gave up on model checking with 3 acceptors since it took more than an hour. Then I found Lamport's PlusCal code about Byzantine agreement. I rewrote my Paxos implementation, imitating his style. I used macros, instead of procedures. My code become more declarative than operational. And, for message passing, I used Lamport's trick of using record typed messages which are written just once to a shared message board; it was the acceptors responsibility to react to them (by just reading, not consuming them). My new refactored code translated to ~150 LOC TLA+, whereas my first version had translated to ~800 LOC TLA. And the new version model checked in a minute for 3 acceptors, leading to a couple of magnitudes of order improvement in efficiency.

While PlusCal is nice, the crutch of using labels in PlusCal algorithms (for enabling TLA+ translation) is a bit ugly. The labels in PlusCal are not just aesthetic. They determine the granularity of atomic execution of blocks of code, so it is critical to place the labels right. If you fail to do this right, you will run into deadlocks due to co-dependent await conditions in different blocks of code. Inserting a label reduces the granularity of block of code from atomic to break at the label. Labels were a little unintuitive for me. But after some practice, I was able to get a hang of them.

Another ugly part is the lack of data structure support for passing messages and parsing them. Using tuples without field names is flexible, but it becomes intractable. Using records help somewhat, but manipulating field names in records also gets complicated quickly. TLA+ doesn't have static/strict typing, and I got stuck with runtime errors several times. In one case, I realized I had to find a way to flatten out a set inside of another set; after a lot of head banging, I found about the UNION operator, which solved the problem.

Good engineers use good tools. And TLA+ is a great tool for modeling distributed algorithms. Modeling an algorithm with TLA+ is really rewarding as it makes you "grok" the algorithm.

My previous post has links to useful documentation for TLA+/PlusCal

Clicking on label "tla" at the end of the post you can reach all my posts about TLA+

In short, I loved the experience and I am hooked. Integrating TLA+ to the class gave students a way to get a hands-on experience in algorithms design and correctness verification. Even for a sophisticated algorithm, such as Paxos, I can refer the students to TLA+ to practice and play with the algorithm, so they can internalize what is going on. Throughout the semester as I had more chance to work with TLA+, I started growing a library of TLA+ modeling of distributed algorithms. Next time I teach the class, I hope to provide the students with a TLA+ model of each algorithm I cover in the class.

The student feedback was also positive. The students liked TLA+ since it gave them a way to experiment and supported them in reasoning about the algorithms. Several students complained of the learning curve. They wanted me to cover TLA+ in more detail in the class. (The TLA+ manual can be very dense for the students.) Next semester I plan to allocate more time getting the students acquainted with TLA+. (Next time around, I will also work on devising automated ways to grade the TLA+ projects. My TA had do manual testing of the projects, not a pleasant task for a class of 60 students.)

The TLA+ mini-projects I used

Throughout the semester, I assigned 3 TLA+ mini-projects with increasing complexity.The aim of the first mini-project was to get the students started with TLA+. I asked the students to model a bean can problem (white and black beans with rules to remove beans form the can). This was simple and there was only one process. But the students got to learn how they can check safety and liveness conditions on their program.

The second mini-project required them to deal with multiple processes. I asked them to model the logical physical clocks we had introduced in our research. Using TLA+ model checker the students found the counterexample in the naive algorithm, where the logical clock gradually but unboundedly gets ahead of the physical clock. This counterexample is hard to find and involves 30 steps in the algorithm. So this was an example were TLA+ proved its value. (Try to figure out this counterexample with unit tests, I dare you.)

The final mini-project gave them more practice on modeling distributed algorithms. I asked them to model Dijkstra's classical stabilizing token ring algorithm as well as Dijkstra's lesser known 4-state stabilizing token ring algorithm. Students learned about self-stabilization and how it deals with arbitrary memory corruption.

Lessons learned (the good, the bad, and the ugly)

I learned that PlusCal language is the right abstraction for modeling distributed algorithms. TLA+ is too low level for writing (and reading) distributed algorithms. PlusCal allows you to include TLA+ expressions and definitions, so you benefit from all the expressive power of TLA+ without going full TLA+. PlusCal is not a separate entity, but just a more convenient way to write TLA+ code. In the TLA+ toolkit you can write the algorithm in PlusCal and hit the "translate to TLA+" to produce the corresponding TLA+ code. When I use the term "modeling an algorithm in TLA+", I really mean writing the model in PlusCal, auto-translating it to TLA+ and model checking it.Learning to model algorithms using TLA+ takes time. The language is powerful and very general; it is Math after all. You need to study many examples to learn about the RIGHT ways of doing things. I experienced this as a novice first hand. I had written a PlusCal code for Paxos within a couple days. My code worked, but it used procedures, and it was very procedural/operational rather than declarative--the way Math should be. And so my code was very slow to model check. Model checking with 2 acceptors worked, but I gave up on model checking with 3 acceptors since it took more than an hour. Then I found Lamport's PlusCal code about Byzantine agreement. I rewrote my Paxos implementation, imitating his style. I used macros, instead of procedures. My code become more declarative than operational. And, for message passing, I used Lamport's trick of using record typed messages which are written just once to a shared message board; it was the acceptors responsibility to react to them (by just reading, not consuming them). My new refactored code translated to ~150 LOC TLA+, whereas my first version had translated to ~800 LOC TLA. And the new version model checked in a minute for 3 acceptors, leading to a couple of magnitudes of order improvement in efficiency.

While PlusCal is nice, the crutch of using labels in PlusCal algorithms (for enabling TLA+ translation) is a bit ugly. The labels in PlusCal are not just aesthetic. They determine the granularity of atomic execution of blocks of code, so it is critical to place the labels right. If you fail to do this right, you will run into deadlocks due to co-dependent await conditions in different blocks of code. Inserting a label reduces the granularity of block of code from atomic to break at the label. Labels were a little unintuitive for me. But after some practice, I was able to get a hang of them.

Another ugly part is the lack of data structure support for passing messages and parsing them. Using tuples without field names is flexible, but it becomes intractable. Using records help somewhat, but manipulating field names in records also gets complicated quickly. TLA+ doesn't have static/strict typing, and I got stuck with runtime errors several times. In one case, I realized I had to find a way to flatten out a set inside of another set; after a lot of head banging, I found about the UNION operator, which solved the problem.

Good engineers use good tools. And TLA+ is a great tool for modeling distributed algorithms. Modeling an algorithm with TLA+ is really rewarding as it makes you "grok" the algorithm.

Useful Links:

There is a vibrant Google Groups forum for TLA+ : https://groups.google.com/My previous post has links to useful documentation for TLA+/PlusCal

Clicking on label "tla" at the end of the post you can reach all my posts about TLA+

Comments

In proof.pdf, page 3 (of the version I have):

"

In TLAPS, a proof manager translates the proof into a set of separate proof obligations, checks the ones that are trivially true, and sends the others to one or more backend provers. Its default behavior is to try these three backend provers: SMT, Zenon, and Isabelle—in that order.

"