The scalable commutativity rule: Designing scalable software for multicore processors

This paper was one of the best paper's at SOSP 13. The paper is open access since SOSP paid for the open access fees for each paper that appeared in the conference.

The scalable commutativity rule says that "Whenever interface operations commute, they can be implemented in a way that scales". In other words, whenever several operations commute at the interface-level (i.e., there's no way to distinguish their execution order using the interface), for those operations we can have implementations whose memory accesses are conflict-free.

(Scalable commutativity sounds like a misnomer, shouldn't the theorem be called "commutative scalability" theorem? The end goal is scalability, and commutativity is a tool for it. Thus "commutative" should qualify "scalability", not the other way around.)

I found several nice generalizable insights in the paper.

The first one is using commutativity as a detectable/measurable and controllable witness for concurrency. Concurrency is an abstract and specification-dependent concept. If you disregard safety (which is defined by the specification), you can boost concurrency easily by parallelizing aggressively, and you end up with a highly scalable system full of race conditions and incorrect/unsafe operations. It is hard to measure "safe concurrency" and boost it. By way of contrast, commutativity is well defined and easier to measure and control. If the outcome doesn't change when you change the order of operations then the order is not important and that means you don't need to lock anything and you can find a lock-free/wait-free/coordination-free implementation.

The second insight is to pose the rule as a "possibility result" to guide the implementation process. It is clear that the paper is motivated by practical problems and from the ground up. These guys are Linux kernel hackers, they are trying to modify Linux to run on multicore machines in a more efficient/scalable manner. As part of their work to make Linux more scalable, they work at the trenches and develop a lot of tricks and hacks. But they didn't know when to quit and when to persist: "Before the rule, we tried to determine if these operations could scale by analyzing all of the implementations we could think of. This process was difficult, unguided, and itself did not scale to complex interfaces, which motivated our goal of reasoning about scalability in terms of interfaces." When operations commute at the specification level, this rule tells them that they should persist because scalable implementations exist. When operations don't commute, this rule tells them to quit because a scalable implementation may not exist with this specifications.

Finally this rule can be used to guide the design as well. When a scalable implementation may not be available with the current specifications of the operations (i.e., the operations do not commute at the interface level), the developers may consider changing the operations slightly to find a design where operations commute (where it is guaranteed to find scalable implementations). To make the operations commute, the operations may be designed to use different parameters or relax the guarantees on the returned result. For example, in the case of a file operation, don't return the smallest unused file descriptor FD, but return an unused FD. If you change the specification this way, then you prevent the contention on the smallest unused FD. In a sense this is moving away from a queue semantic (which is order sensitive) to a set semantic (which is order insensitive, a.k.a. commutative). The essence of trick has been used to modify operations to commute and achieve conflict-free implementations.

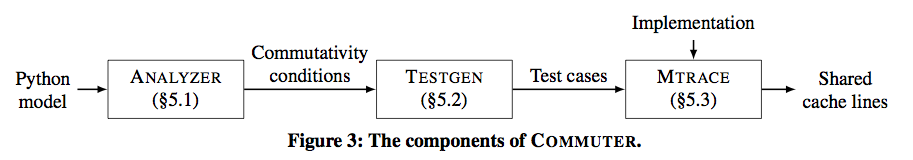

The paper is a tour de force. The authors developed a tool called COMMUTER that accepts high-level interface models and generates tests of operations that commute and hence could scale. The tool uses a combination of symbolic and concolic execution, and generates test cases for an arbitrary implementation based on a model of that implementation's interface.

"First, ANALYZER takes a symbolic model of an interface and computes precise conditions under which that interface’s operations commute. Second, TESTGEN takes these conditions and generates concrete test cases of sets of operations that commute according to the interface model, and thus should have a conflict-free implementation according to the commutativity rule. Third, MTRACE checks whether a particular implementation is conflict-free for each test case."

The evaluation of the paper is also exhaustive. They modeled several POSIX file system and virtual memory calls in COMMUTER, then used this both to evaluate Linux's scalability and to develop a scalable file and virtual memory system for their sv6 research kernel. I loved Figure 6, it shows a lot of things without overwhelming the viewer.

To show how the results in Figure 6 translate to scalability on real hardware they evaluated with some microbenchmarks and a mail server application.

A: The paper avoids this question and doesn't have an answer to this. My guess is the inverse would indicate only a relative unscalability, not an absolute one. And it is hard to quantify the relative unscalability. But the original theorem is clean, it provides an absolute result: an implementation with conflict-free memory accesses, i.e., full-scalability is possible.

Question: Is this rule is applicable to the general distributed systems and not just multicore systems?

A: The paper claims this is the case in the related work but doesn't elaborate more on that. Yes, this should work but there are assumptions. The operation should have invocation and response parts. The high level specification of the operations should make it clear what the operation does so you can perform the commutativity tests.

Question: What are the tradeoffs when coming up with variants of operations to make them commute?

A: Instead of a big operation, you may divide it into two small operations to improve commutativity. But did this make the job of the developers harder. Well maybe, but it is worth it if you made it conflict-free on multicores. And now instead of crossing the kernel boundary once, you may be crossing the kernel boundary twice because you have replaced one operation with two operations. So you pay overhead, but it is OK if you had improved the commutativity and scalability of the operations.

http://www.srl.inf.ethz.ch/

It was also profiled in Linux Weekly: http://lwn.net/Articles/

The scalable commutativity rule says that "Whenever interface operations commute, they can be implemented in a way that scales". In other words, whenever several operations commute at the interface-level (i.e., there's no way to distinguish their execution order using the interface), for those operations we can have implementations whose memory accesses are conflict-free.

(Scalable commutativity sounds like a misnomer, shouldn't the theorem be called "commutative scalability" theorem? The end goal is scalability, and commutativity is a tool for it. Thus "commutative" should qualify "scalability", not the other way around.)

I found several nice generalizable insights in the paper.

The first one is using commutativity as a detectable/measurable and controllable witness for concurrency. Concurrency is an abstract and specification-dependent concept. If you disregard safety (which is defined by the specification), you can boost concurrency easily by parallelizing aggressively, and you end up with a highly scalable system full of race conditions and incorrect/unsafe operations. It is hard to measure "safe concurrency" and boost it. By way of contrast, commutativity is well defined and easier to measure and control. If the outcome doesn't change when you change the order of operations then the order is not important and that means you don't need to lock anything and you can find a lock-free/wait-free/coordination-free implementation.

The second insight is to pose the rule as a "possibility result" to guide the implementation process. It is clear that the paper is motivated by practical problems and from the ground up. These guys are Linux kernel hackers, they are trying to modify Linux to run on multicore machines in a more efficient/scalable manner. As part of their work to make Linux more scalable, they work at the trenches and develop a lot of tricks and hacks. But they didn't know when to quit and when to persist: "Before the rule, we tried to determine if these operations could scale by analyzing all of the implementations we could think of. This process was difficult, unguided, and itself did not scale to complex interfaces, which motivated our goal of reasoning about scalability in terms of interfaces." When operations commute at the specification level, this rule tells them that they should persist because scalable implementations exist. When operations don't commute, this rule tells them to quit because a scalable implementation may not exist with this specifications.

Finally this rule can be used to guide the design as well. When a scalable implementation may not be available with the current specifications of the operations (i.e., the operations do not commute at the interface level), the developers may consider changing the operations slightly to find a design where operations commute (where it is guaranteed to find scalable implementations). To make the operations commute, the operations may be designed to use different parameters or relax the guarantees on the returned result. For example, in the case of a file operation, don't return the smallest unused file descriptor FD, but return an unused FD. If you change the specification this way, then you prevent the contention on the smallest unused FD. In a sense this is moving away from a queue semantic (which is order sensitive) to a set semantic (which is order insensitive, a.k.a. commutative). The essence of trick has been used to modify operations to commute and achieve conflict-free implementations.

The paper is a tour de force. The authors developed a tool called COMMUTER that accepts high-level interface models and generates tests of operations that commute and hence could scale. The tool uses a combination of symbolic and concolic execution, and generates test cases for an arbitrary implementation based on a model of that implementation's interface.

"First, ANALYZER takes a symbolic model of an interface and computes precise conditions under which that interface’s operations commute. Second, TESTGEN takes these conditions and generates concrete test cases of sets of operations that commute according to the interface model, and thus should have a conflict-free implementation according to the commutativity rule. Third, MTRACE checks whether a particular implementation is conflict-free for each test case."

The evaluation of the paper is also exhaustive. They modeled several POSIX file system and virtual memory calls in COMMUTER, then used this both to evaluate Linux's scalability and to develop a scalable file and virtual memory system for their sv6 research kernel. I loved Figure 6, it shows a lot of things without overwhelming the viewer.

To show how the results in Figure 6 translate to scalability on real hardware they evaluated with some microbenchmarks and a mail server application.

Discussion

Question: The scalable commutativity rule says: If interface operations commute, then they can be implemented in a way that scales. Is there a reason why you don't claim the inverse: If interface operations don't commute, then they cannot be implemented in a way that scales. If the inverse is true, then this rule will have stronger implications for interface design.A: The paper avoids this question and doesn't have an answer to this. My guess is the inverse would indicate only a relative unscalability, not an absolute one. And it is hard to quantify the relative unscalability. But the original theorem is clean, it provides an absolute result: an implementation with conflict-free memory accesses, i.e., full-scalability is possible.

Question: Is this rule is applicable to the general distributed systems and not just multicore systems?

A: The paper claims this is the case in the related work but doesn't elaborate more on that. Yes, this should work but there are assumptions. The operation should have invocation and response parts. The high level specification of the operations should make it clear what the operation does so you can perform the commutativity tests.

Question: What are the tradeoffs when coming up with variants of operations to make them commute?

A: Instead of a big operation, you may divide it into two small operations to improve commutativity. But did this make the job of the developers harder. Well maybe, but it is worth it if you made it conflict-free on multicores. And now instead of crossing the kernel boundary once, you may be crossing the kernel boundary twice because you have replaced one operation with two operations. So you pay overhead, but it is OK if you had improved the commutativity and scalability of the operations.

Updates:

Martin Vechev emailed me about my first question, and he had this to say: " we had an earlier POPL'11 paper that shows one part of the inverse question you are asking, that if you have strong non-commutativity you need expensive instructions:http://www.srl.inf.ethz.ch/

It was also profiled in Linux Weekly: http://lwn.net/Articles/

Comments