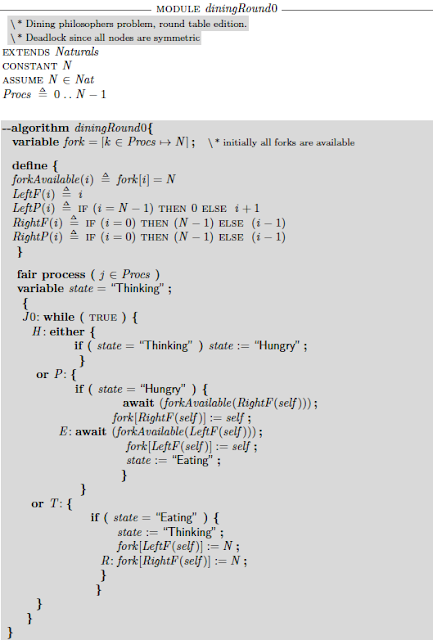

Modeling the Dining Philosophers Algorithm in TLA+

In this post I will provide a modeling of the dining philosophers algorithm in TLA+. If you have not heard about TLA+ before, take a look at my earlier posts on TLA+ to get some context. Post1 and Post2 The dining philosophers problem is an instance/refinement of the mutual exclusion problem. The specification of the mutual exclusion problem dictates that when a node is in critical section, no other node in the system can be in the critical section. The dining philosopher relaxes this by taking into account the topology: When a node is in critical section, none of the neighbors of that node can be in critical section. Note that, this does not rule out two nodes to be in critical section simultaneously provided that they are not neighbors. In the original formulation of the dining philosophers problem by Dijkstra, there are 5 philosophers sitting around a table. Between each philosopher is a fork and, in order to eat a philosopher must hold both of the forks. (The spaghetti i...