Xen and the art of virtualization

This week in the seminar class we discussed the Xen virtualization paper from SOSP 2003. Xen is the first open source virtualization solution, however, Vmware was already available in 2000 as a commercial solution for virtualization.

A virtual machine (VM) is a software implementation of a machine (i.e. a computer) that executes instructions like a physical machine. The biggest benefit of virtualization is in server consolidation: enabling efficient usage of computer server resources in order to reduce the total number of servers that an organization requires. Thanks to the virtualization's ability to separate the OS and application from the hardware, it becomes possible to run multiple applications (in complete isolation from each other) on each server instead of just one application per server. This increases the utilization rate of servers and prevents the problem of "server sprawl", a situation in which multiple, under-utilized servers take up more space and resources than can be justified by their workload. Another motivation for virtualization is that it provides flexibility. We can easily migrate virtual machines across the network, from server to server or datacenter to datacenter, to balance loads and use compute capacity more efficiently. I will discuss live migration of virtual machines in the next paper review post shortly.

From the motivation above, it follows that the biggest goal of virtualization is isolation of the virtual machines from one another. And the important thing here is to achieve virtualization with as little overhead (performance penalty) as possible.

Some basic terms used in the virtualization domain are "guestOS: the OS that xen hosts", "domain: the virtual machine where the guestOS runs", and "hypervisor: Xen as it runs at a higher privilege level than the guestOSes it hosts".

Xen provides para-virtualization as opposed to full-virtualization. Vmware provided full virtualization (i.e., a complete simulation of the underlying hardware), so there was no need to modify the OS at all. To achieve this the Vmware hypervisor trapped and translated any binary command to mediate access to the resources, but this approach incurs a big penalty on the performance. Xen avoided this penalty on the performance by using paravirtualization. In order to achieve better performance Xen required modifications to the guestOS (a one-time overhead per guestOS to hack this up). Even then, note that Xen did not require any modifications to the application running on the guestOS.

Before I can discuss why Xen needed to modify the guestOS and what modifications are needed, here is a brief description of the Xen architecture. There are four distinct privilege levels (described as rings) on x86: level 0 (most privileged) where the OS runs, levels 1,2 unused, and level 3 (least privileged) where the application code runs. Xen hypervisor has to run at the most privileged level, so it runs at level 0 and bumps the OS to run at the previously unused level 1. Then, the hypervisor has to play the role of the mediator between the hardware and the guestOS running at the less privileged level. Running at a less privileged level prevents the guestOS from directly executing privileged instructions. The memory management is trickier than the CPU privilege management. The x86 does not have a software-managed Translation Lookaside Buffer (TLB), so TLB misses are serviced automatically by the processor from the page table structure in the hardware. The x86 also does not have a tagged TLB, so address space switches require a complete TLB flush. This forced Xen to take the paravirtualization route: guestOSes are made responsible for allocating and managing the hardware page tables with minimal involvement from Xen to ensure safety and isolation. Each time a guestOS requires a new page table it allocates and initializes a page from its own memory and registers it with Xen. Each update to the page-table memory should also be validated by Xen. In 2008, Intel Nahelem and AMD (SVM) introduced tags as part of the TLB entry and dedicated hardware which checks the tags during lookup. A ring -1 privilege level is also added for the hypervisor to run while guestOS runs at ring 0. As a result Xen does not need to modify the guestOS anymore.

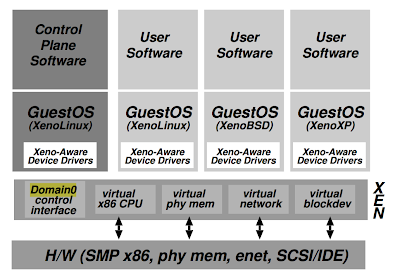

This figure shows a machine running Xen hosting different guestOSs, including Domain0 running control software in a XenoLinux environment.

Domain0 is special, it is used for bootup. Only domain0 has direct access to the physical discs, and the management software running in domain0 is responsible for mediating access to the hardware devices.

There are two types of control transfer between the domain (VM) and hypervisor. The first one is via a synchronous hypercall from the domain to hypervisor to perform a privileged operation. The second one is an asynchronous event from hypervisor to the domain that replaces the usual delivery mechanism for device interrupts (e.g., new data received over the network). The data transfer to and from each domain is mediated via Xen. Rather than emulating existing hardware devices as in full-virtualization, Xen exposes a set of clean and simple device abstractions. The data transfer is done using shared-memory asynchronous buffer descriptor rings (not to be confused with privilege rings). The I/O descriptor ring implements two queues: request queue and response queue between the domain and Xen.

The paper provides extensive evaluation results. The short of it is that Xen can host up to 100 virtual machines simultaneously on a server circa 2003, and Xen's performance overhead is only around 3% compared to the unvirtualized case.

One question asked in the class during the discussion is: would it make sense to perform a co-design of schedulers of hypervisor and guestOS? Hypervisor scheduler can provide hints to the guestOS scheduler about when a device/resource will be available, and guestOS can use this information to schedule things more cleverly. Would this pay off? How can this be done cleanly?

Virtualization approach takes the guestOS/application as a blackbox. By taking the guestOS/application as a graybox (using some contracts, mutual rely-guarantees, or having access to a specification of the application), there can be ways to improve performance. But this is a very fuzzy seed of a thought for now.

A virtual machine (VM) is a software implementation of a machine (i.e. a computer) that executes instructions like a physical machine. The biggest benefit of virtualization is in server consolidation: enabling efficient usage of computer server resources in order to reduce the total number of servers that an organization requires. Thanks to the virtualization's ability to separate the OS and application from the hardware, it becomes possible to run multiple applications (in complete isolation from each other) on each server instead of just one application per server. This increases the utilization rate of servers and prevents the problem of "server sprawl", a situation in which multiple, under-utilized servers take up more space and resources than can be justified by their workload. Another motivation for virtualization is that it provides flexibility. We can easily migrate virtual machines across the network, from server to server or datacenter to datacenter, to balance loads and use compute capacity more efficiently. I will discuss live migration of virtual machines in the next paper review post shortly.

From the motivation above, it follows that the biggest goal of virtualization is isolation of the virtual machines from one another. And the important thing here is to achieve virtualization with as little overhead (performance penalty) as possible.

Some basic terms used in the virtualization domain are "guestOS: the OS that xen hosts", "domain: the virtual machine where the guestOS runs", and "hypervisor: Xen as it runs at a higher privilege level than the guestOSes it hosts".

Xen provides para-virtualization as opposed to full-virtualization. Vmware provided full virtualization (i.e., a complete simulation of the underlying hardware), so there was no need to modify the OS at all. To achieve this the Vmware hypervisor trapped and translated any binary command to mediate access to the resources, but this approach incurs a big penalty on the performance. Xen avoided this penalty on the performance by using paravirtualization. In order to achieve better performance Xen required modifications to the guestOS (a one-time overhead per guestOS to hack this up). Even then, note that Xen did not require any modifications to the application running on the guestOS.

Before I can discuss why Xen needed to modify the guestOS and what modifications are needed, here is a brief description of the Xen architecture. There are four distinct privilege levels (described as rings) on x86: level 0 (most privileged) where the OS runs, levels 1,2 unused, and level 3 (least privileged) where the application code runs. Xen hypervisor has to run at the most privileged level, so it runs at level 0 and bumps the OS to run at the previously unused level 1. Then, the hypervisor has to play the role of the mediator between the hardware and the guestOS running at the less privileged level. Running at a less privileged level prevents the guestOS from directly executing privileged instructions. The memory management is trickier than the CPU privilege management. The x86 does not have a software-managed Translation Lookaside Buffer (TLB), so TLB misses are serviced automatically by the processor from the page table structure in the hardware. The x86 also does not have a tagged TLB, so address space switches require a complete TLB flush. This forced Xen to take the paravirtualization route: guestOSes are made responsible for allocating and managing the hardware page tables with minimal involvement from Xen to ensure safety and isolation. Each time a guestOS requires a new page table it allocates and initializes a page from its own memory and registers it with Xen. Each update to the page-table memory should also be validated by Xen. In 2008, Intel Nahelem and AMD (SVM) introduced tags as part of the TLB entry and dedicated hardware which checks the tags during lookup. A ring -1 privilege level is also added for the hypervisor to run while guestOS runs at ring 0. As a result Xen does not need to modify the guestOS anymore.

This figure shows a machine running Xen hosting different guestOSs, including Domain0 running control software in a XenoLinux environment.

Domain0 is special, it is used for bootup. Only domain0 has direct access to the physical discs, and the management software running in domain0 is responsible for mediating access to the hardware devices.

There are two types of control transfer between the domain (VM) and hypervisor. The first one is via a synchronous hypercall from the domain to hypervisor to perform a privileged operation. The second one is an asynchronous event from hypervisor to the domain that replaces the usual delivery mechanism for device interrupts (e.g., new data received over the network). The data transfer to and from each domain is mediated via Xen. Rather than emulating existing hardware devices as in full-virtualization, Xen exposes a set of clean and simple device abstractions. The data transfer is done using shared-memory asynchronous buffer descriptor rings (not to be confused with privilege rings). The I/O descriptor ring implements two queues: request queue and response queue between the domain and Xen.

The paper provides extensive evaluation results. The short of it is that Xen can host up to 100 virtual machines simultaneously on a server circa 2003, and Xen's performance overhead is only around 3% compared to the unvirtualized case.

One question asked in the class during the discussion is: would it make sense to perform a co-design of schedulers of hypervisor and guestOS? Hypervisor scheduler can provide hints to the guestOS scheduler about when a device/resource will be available, and guestOS can use this information to schedule things more cleverly. Would this pay off? How can this be done cleanly?

Virtualization approach takes the guestOS/application as a blackbox. By taking the guestOS/application as a graybox (using some contracts, mutual rely-guarantees, or having access to a specification of the application), there can be ways to improve performance. But this is a very fuzzy seed of a thought for now.

Comments